Science is firstly a methodology. It is not firstly a body of proven knowledge as rather pompous self important Covid19 ex spurts and Little Greta

would have would have us believe. Scientists should start from observation, hypothesis, prediction, experiment and thus to cautious conclusion.

An honest scientists sets out to disprove the hypothesis, not prove it. But in these days of chasing grants and accolades, this golden rule is too frequently ignored, often with serious harm done.

I suspect that is the case with the highly politicised Covid 19 pronouncements of ‘The Science.’ In the process, unscrupulous nonentities are advanced up the science career ladder where they feed off the work of brighter Phd students, then claim credit in many cases.

Science is firstly a methodology. It is not firstly a body of proven knowledge as rather pompous self important Covid19 ex spurts and Little Greta

would have would have us believe. Scientists should start from observation, hypothesis, prediction, experiment and thus to cautious conclusion.

An honest scientists sets out to disprove the hypothesis, not prove it. But in these days of chasing grants and accolades, this golden rule is too frequently ignored, often with serious harm done.

I suspect that is the case with the highly politicised Covid 19 pronouncements of ‘The Science.’ In the process, unscrupulous nonentities are advanced up the science career ladder where they feed off the work of brighter Phd students, then claim credit in many cases.

Science is firstly a methodology. It is not firstly a body of proven knowledge as rather pompous self important Covid19 ex spurts and Little Greta

would have would have us believe. Scientists should start from observation, hypothesis, prediction, experiment and thus to cautious conclusion.

An honest scientists sets out to disprove the hypothesis, not prove it. But in these days of chasing grants and accolades, this golden rule is too frequently ignored, often with serious harm done.

I suspect that is the case with the highly politicised Covid 19 pronouncements of ‘The Science.’ In the process, unscrupulous nonentities are advanced up the science career ladder where they feed off the work of brighter Phd students, then claim credit in many cases.

In the case of Covid. theApril whole outpouring of how to deal with and exaggerate this problem, brings such so called experts to the fore, with guesswork passed off as science -with no account to the wider harm done to health and mass survival. R.J Cook

Previous Science material is now on the the Science Archive Page.

December 19th 2022

Why scientists can’t give up the hunt for alien life

There will always be “wolf-criers” whose claims wither under scrutiny. But aliens are certainly out there, if science dares to find them.

Key Takeaways

- All throughout human history, we’ve gazed up at the stars and wondered if we’re truly alone in the Universe, or if other life — possibly even intelligent life — is out there for us to find.

- Although there have been many who claim that aliens exist and that we’ve already contacted them, those claims have all withered under scrutiny, with their claimants having cried “wolf” with insufficient evidence.

- Nevertheless, the scientific case remains extremely compelling for suspecting that life, and possibly even intelligent life, is out there somewhere. Here’s why we must keep looking.

Ethan Siegel Share Why scientists can’t give up the hunt for alien life on Facebook Share Why scientists can’t give up the hunt for alien life on Twitter Share Why scientists can’t give up the hunt for alien life on LinkedIn

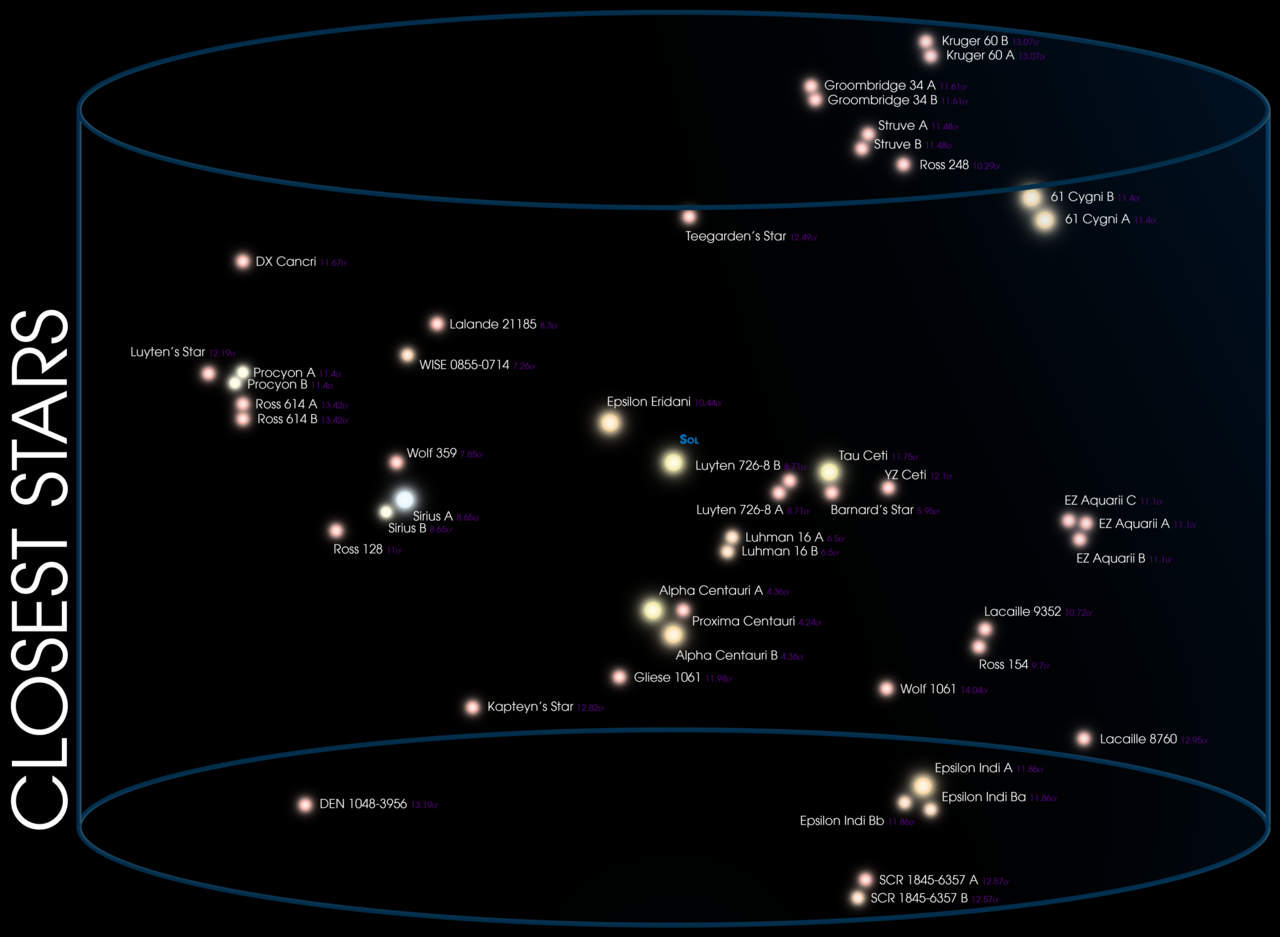

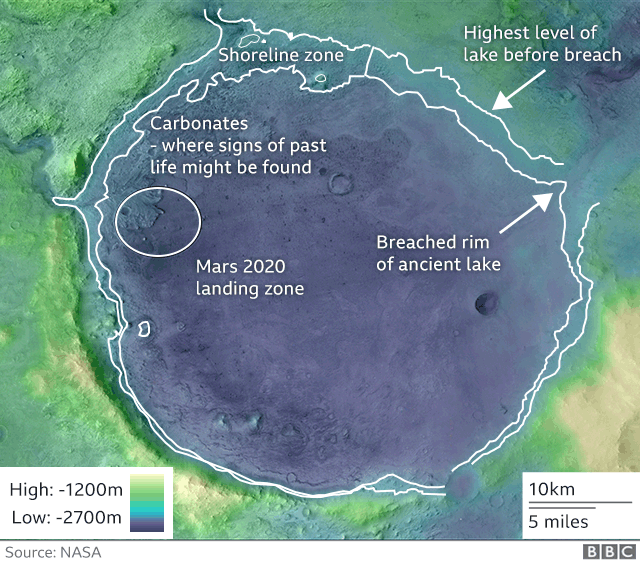

Despite all we’ve learned about ourselves and the physical reality that we all inhabit, the giant question of whether we’re alone in the Universe remains unanswered. We’ve explored the surfaces and atmospheres of many worlds in our own Solar System, but only Earth shows definitive signs of life: past or present. We’ve discovered more than 5,000 exoplanets over the past 30 years, identifying many Earth-sized, potentially inhabited worlds among them. Still, none of them have revealed themselves as actually inhabited, although the prospects for finding extraterrestrial life in the near future are tantalizing.

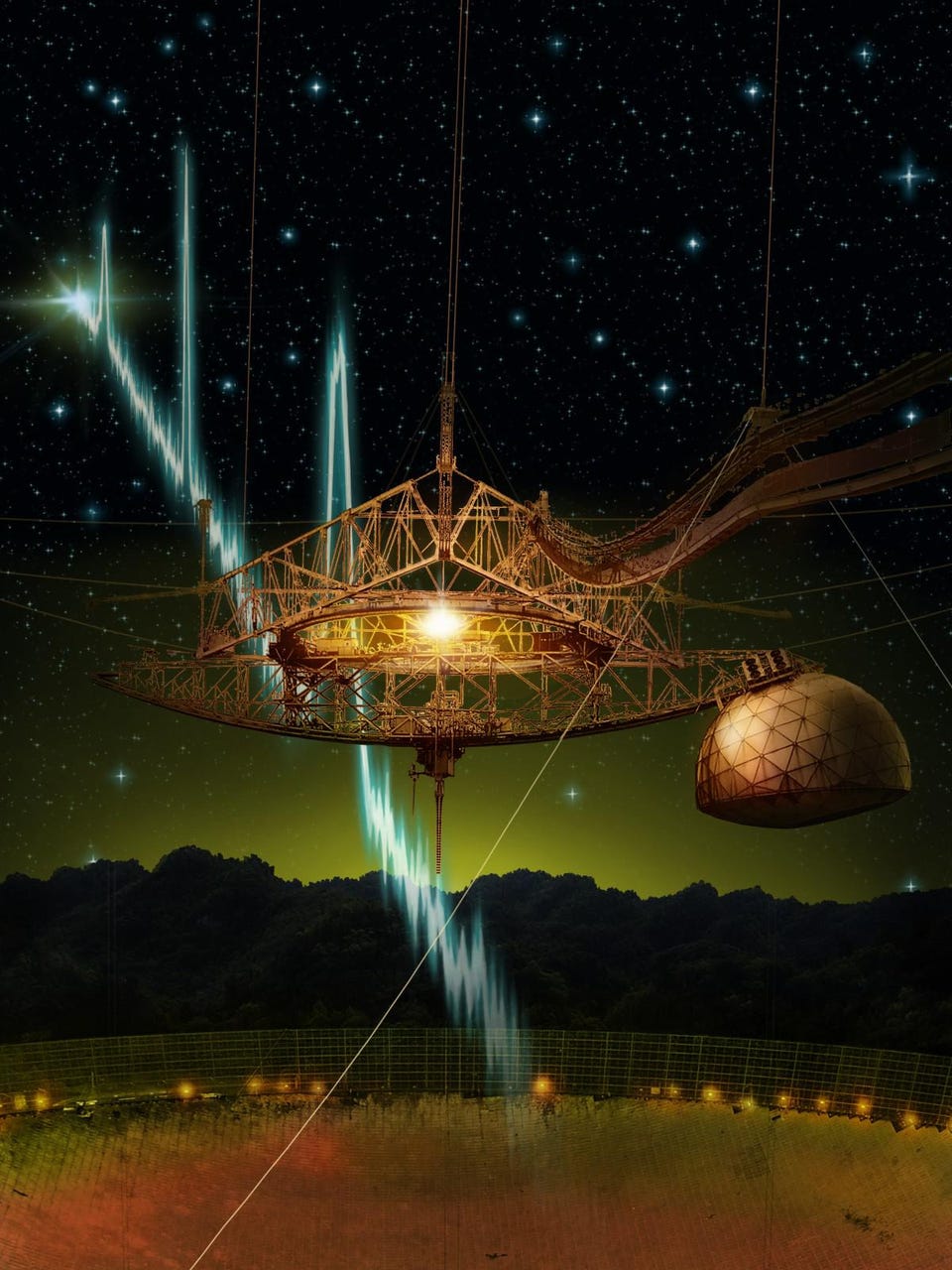

And finally, we’ve begun searching directly for any signals from space that might indicate the presence of an intelligent, technologically advanced civilization, through endeavors such as SETI (the Search for Extra-Terrestrial Intelligence) and Breakthrough Listen. All of these searches have returned only null results so far, despite memorably loud claims to the contrary. However, the fact that we haven’t met with success just yet should in no way discourage us from continuing to search for life on all three fronts, to the limits of our scientific capabilities. After all, when it comes to the biggest existential question of all, we have no right to expect that the lowest-hanging branches on the cosmic tree of life should bear fruit so easily.

Each of the three main ways we have of searching for life beyond the life that arose and continues to thrive on planet Earth has its own sets of advantages and disadvantages.

- We can access the surfaces and atmospheres of other worlds in our Solar System, enabling us to look for even tiny, microscopic signs of biological activity, including imprints left by ancient, now-extinct forms of life. But we may have to dig through tens of kilometers of ice to find it, or recognize life forms wholly unrelated to life-as-we-know-it on Earth.

- With thousands of exoplanets now known, the imminent technological advances that will enable transit spectroscopy and/or direct imaging of Earth-sized worlds could lead us to discover living planets with unmistakable biosignatures in their atmospheres. If life thriving on an Earth-sized world is common, positive detections are only a matter of time and resources.

- And searches for extraterrestrial intelligence offer the most profound rewards: a chance to make contact with another, perhaps even a technologically superior, intelligent species. The odds are unknown, but the payoff could be unfathomable.

For these (and other) reasons, the only sensible strategy is to continue to pursue all three methods to the limits of our capabilities, as without superior information, we have no way of knowing what sorts of probabilities any of these methods will have of yielding our first positive detection. After all, “Absence of evidence is not evidence of absence,” and that saying certainly applies to life in the Universe.

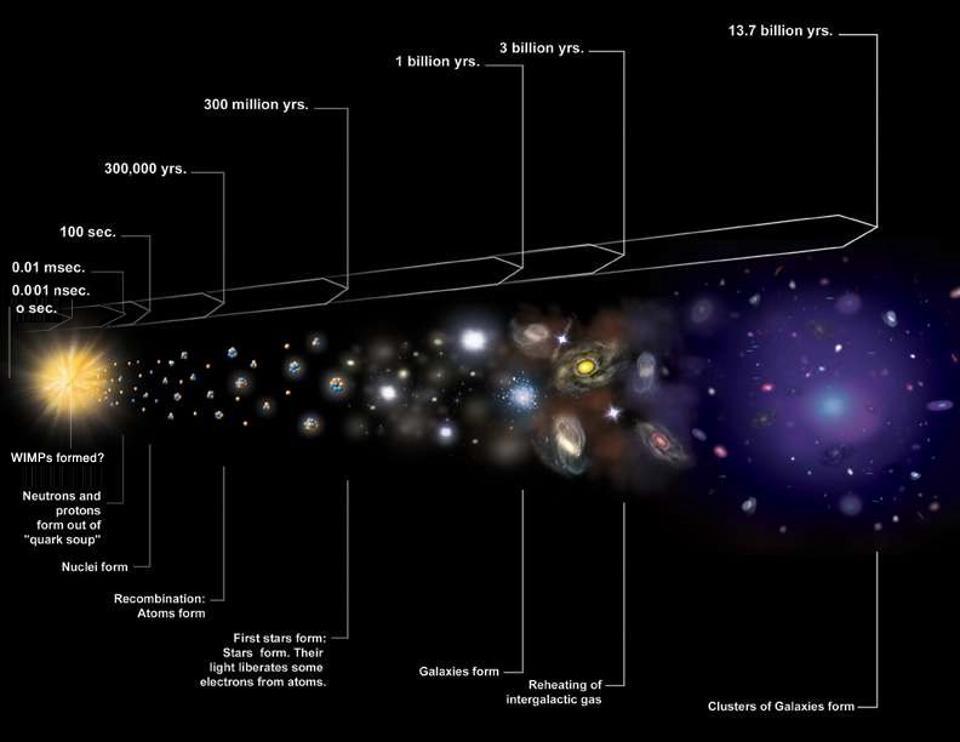

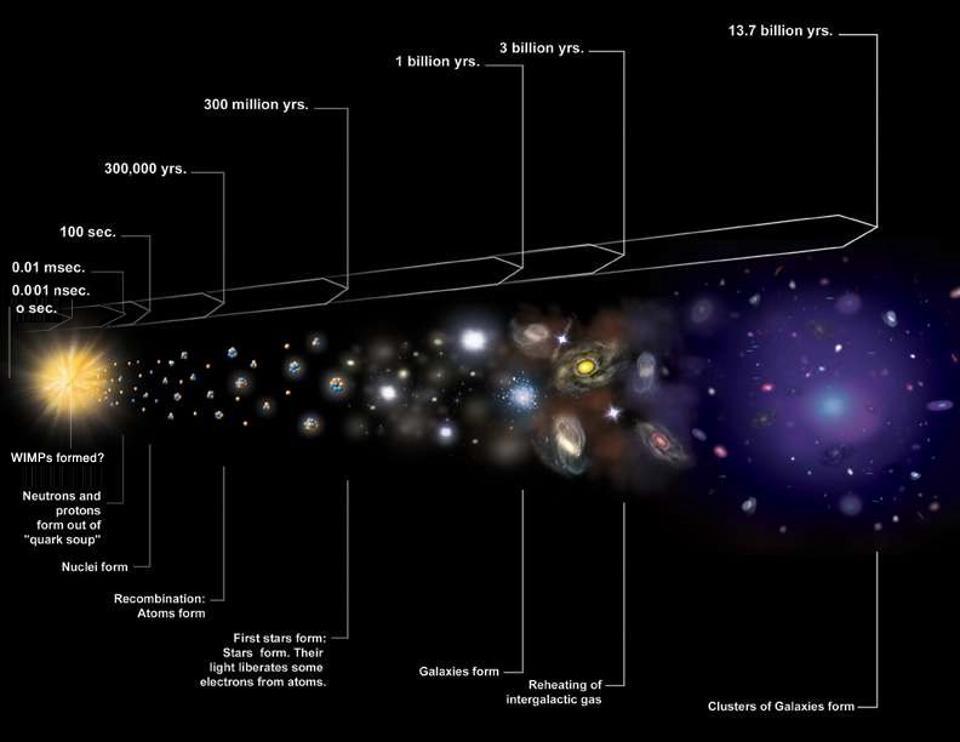

From a cosmic perspective, the laws that govern the Universe as well as the nature of the components that make it up indicate that the potential for life as a common occurrence might be absolutely inevitable. Initially, at the start of the hot Big Bang, our Universe was hot, dense, and filled with particles, antiparticles, and radiation moving at or indistinguishably close to the speed of light. In these beginning stages, neither the ingredients nor the conditions necessary for chemical-based life were in place; the Universe was born life-free. And yet, as time went on, the potential for biological activity would rise and rise.

As the Universe expanded and cooled, the following steps sequentially occurred:

- the particles and antiparticles annihilated away, leaving a tiny excess of matter behind,

- quarks and gluons formed bound states, giving rise to protons and neutrons,

- fusion reactions occurred, creating the light elements,

- atoms formed from these atomic nuclei and the surrounding bath of electrons,

- gravitational contraction and collapse takes place, giving rise to stars,

- star clusters and other clumps of matter attract, forming galaxies,

- and within those galaxies, successive generations of stars are formed, creating heavy elements.

Once a galaxy becomes enriched enough with these heavy elements, the new generations of stars that follow can form with rocky worlds within those stellar systems, many of which will have the potential for life.

Within our observable Universe, since the dawn of the hot Big Bang, sextillions of stars have formed. Of those, the majority of them are found in large, massive, rich galaxies: galaxies comparable in size and mass to the Milky Way or greater. By the time billions of years have gone by, most of the new stars will have sufficiently large fractions of heavy elements to lead to the formation of rocky planets and molecules that are known as precursors to life. These precursor molecules have been found everywhere, from comets and asteroids to the interstellar medium to stellar outflows to planet-forming disks.

And, at this critical step, we find ourselves face-to-face with the end of our scientific certainty.

- Where, and under what conditions, does life come into existence?

- On those worlds where life arises, how frequently does it survive and thrive, persisting for billions of years?

- How often does that life saturate its habitable regions, transforming and feeding back on its biosphere?

- Where this occurs, how often does life diversify, becoming complex and differentiated?

- And where that occurs, how frequently does life become intelligent enough to become technologically advanced, capable of communicating across or even traversing the vast interstellar distances?

These questions aren’t merely there for us to philosophically ponder; they’re there for us to gather information about, and eventually, to draw scientifically valid conclusions about such probabilities.

Of course, there are plenty of valid explanations for why we haven’t succeeded in our searches for life just yet. The most sobering — and the most pessimistic — is that it’s possible that one or more of the steps required to give rise to the type of life we’d be sensitive to are particularly difficult, and only rarely can the Universe achieve them. In other words, it’s possible that any one of life, sustained life, complex and differentiated life, or intelligent and technologically advanced life are rare, and none of the worlds we’ve surveyed possess them. That’s a possibility we have to keep in mind so long as we’re committed to remaining intellectually honest.

But there’s no reason, at least so far, to suspect that’s the primary reason we haven’t discovered life beyond Earth just yet. The old saying, “If at first you don’t succeed, try, try again,” applies wherever the odds of success are unknown, but we have every indication that success is possible under the right circumstances. Here on Earth, the evidence strongly indicates that our home planet is an example of such circumstances, and hence it’s likely that there are places all throughout space and time where life sustains itself, evolves to become complex and differentiated, and achieves a level of technological advancement sufficient for interstellar communication.

The big unknowns are in the probabilities of the various types of alien life that are actually out there, not in the question of whether such achievements are possible within our Universe.

That doesn’t mean that we should take seriously every claim that’s been made — even by scientists — that alien life has been found. The “Wow!” signal, for example, was a high-powered radio signal received over the span of 72 seconds back in 1977; although its nature is unknown, it has never been replicated, either back at the original source or anywhere else. Without confirmation or repeatability, we can draw no affirmative, definitive conclusions.

Fast radio bursts, like many signatures observed astronomically, appear in many locations both in and out of our galaxy, but have no indication that they were intelligently created; they are likely simply a natural astronomical phenomena whose origins have yet to be determined.

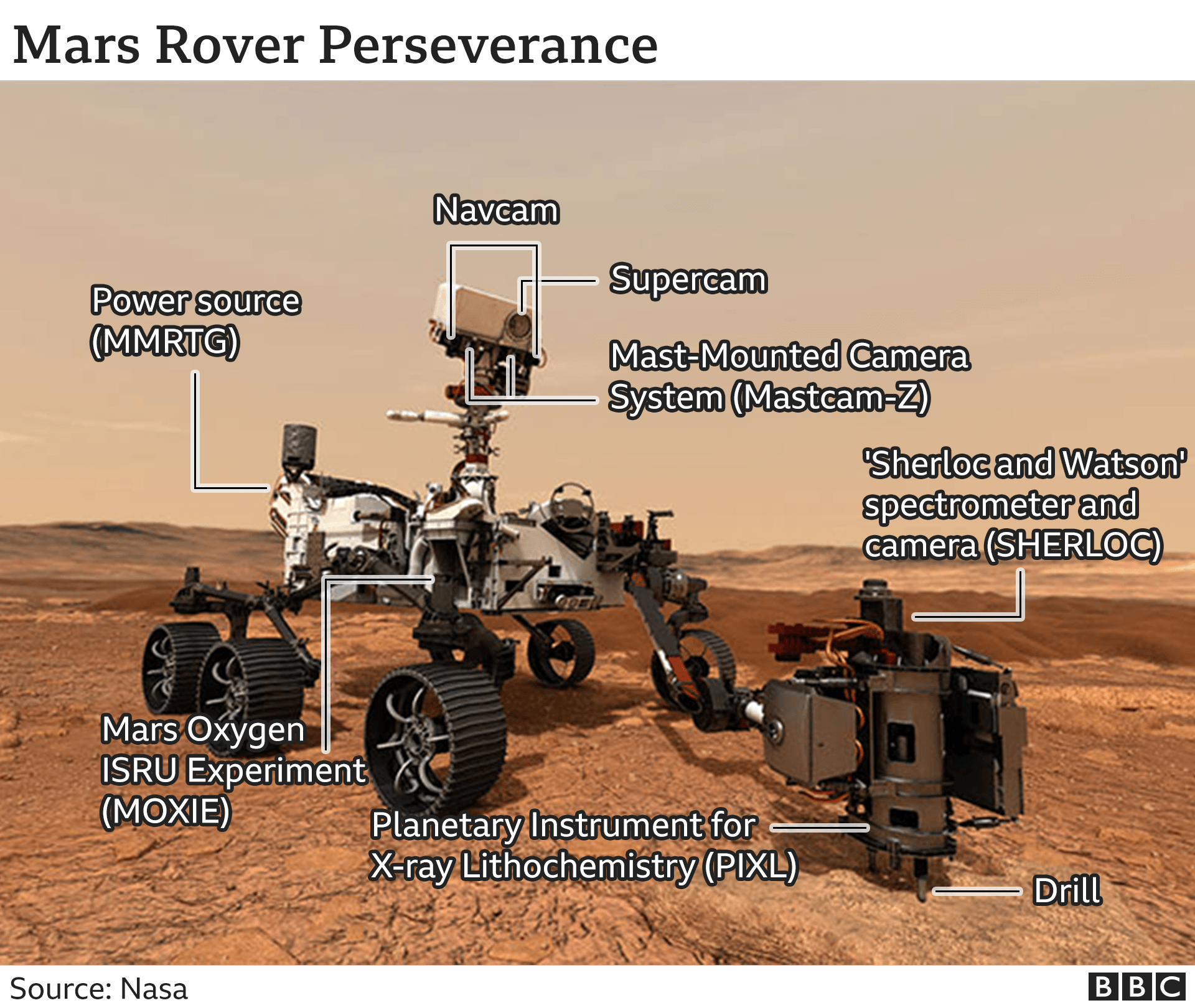

NASA’s Mars Viking lander conducted numerous tests for life on the Martian surface, with one experiment (the Labeled Release experiment) giving a positive signature. However, the possibility of contamination, the lack of reproducibility, and the lack of a verified follow-up experiment has cast tremendous doubt on the “biological positive” interpretation of the experiment.

And despite the possibility of encountering interstellar space probes, direct alien contact, or even the ubiquity of alien abduction stories, no robust verification of any of these claims has ever come forth. We have to keep our minds open while at the same time remaining skeptical of any grand claims. The conclusions we draw can only be as strong as the supporting evidence for them.

It’s primarily for these reasons — we have every indication that the Universe has all the necessary ingredients for life, but no indication that we’ve found it just yet — that it’s so vital to keep looking in a scientifically scrupulous way. When we do announce that we’ve found extraterrestrial life, we don’t want it to be another instance of crying “wolf” with insufficient evidence that we’ve found a wolf; we want the claim to be supported by overwhelming, unassailable evidence.

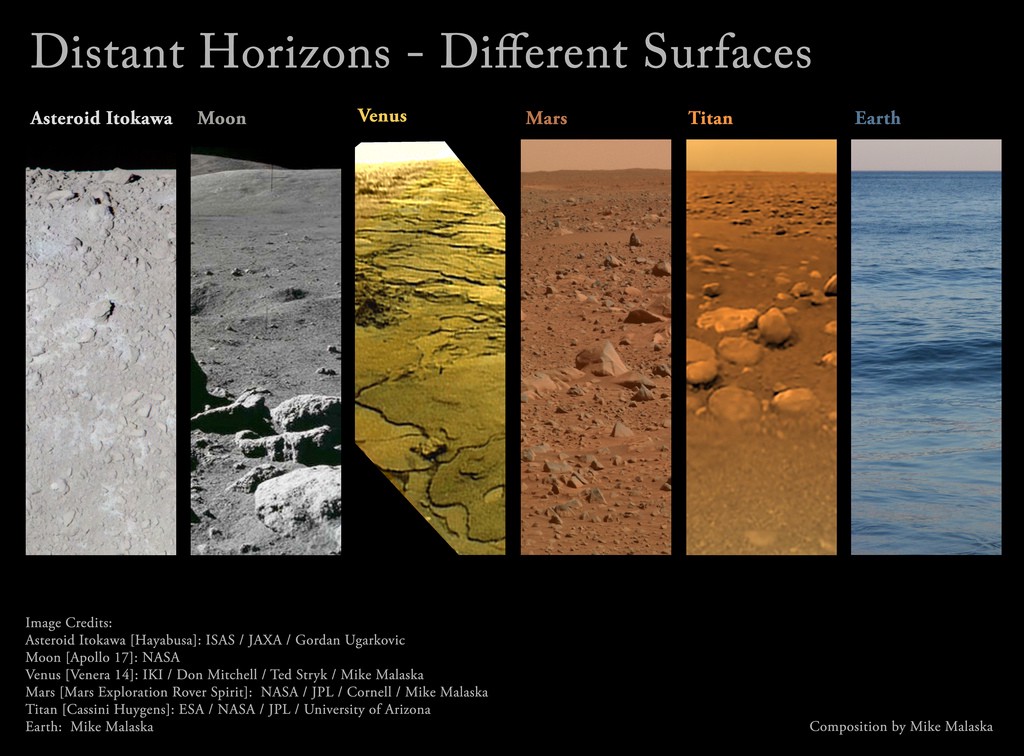

- That means building fleets of orbiters, landers, sample-return missions, and laboratory-equipped rovers to explore a wide variety of worlds in our Solar System: Venus’s atmosphere, Mars’s surface, Titan’s lakes, and the oceans of Europa, Enceladus, Triton, and Pluto, among others.

- That means building superior coronagraphs on world-class space-based and ground-based telescopes, considering the construction of a starshade, and investing in transit spectroscopy. By imaging the atmospheres and surfaces of exoplanets, including breaking down their molecular and atomic constituents and abundances over time, we should be able to identify any world with a life-saturated biosphere.

- And that means continuing to search, with greater precisions and sensitivities across the electromagnetic spectrum, for any signals that might come from an intelligent species seeking to communicate or announce their presence.

If you don’t find fruit on the lowest-hanging branches, that doesn’t necessarily mean you give up on the tree; it means you find a way to climb higher, where the fruit may be present but out of your present reach.

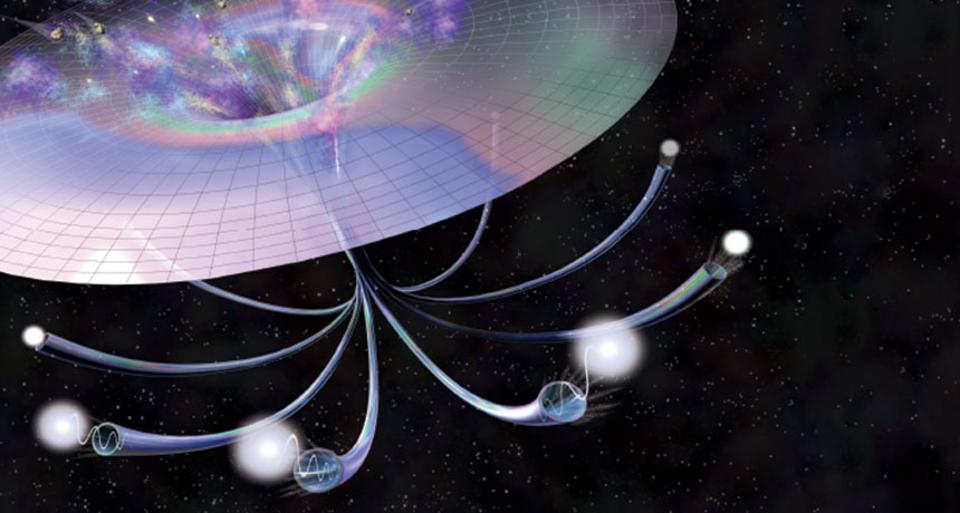

This might also include expanding our searches for extraterrestrial intelligence. While most searches focus on far-reaching radio transmissions, it’s possible that alien civilizations who seek to communicate across the stars and galaxies will rely on a different technology. Perhaps we should be monitoring the tails of water maser lines or the 21 cm spin-flip transition of hydrogen. Perhaps we should be looking for patterns in pulsar signals, including correlating signals between pulsars. Perhaps we should even be looking for extraterrestrial intelligences in gravitational wave signals that we have yet to discover. Wherever a signal can be encoded by a sufficiently advanced species, humanity should be looking and listening.

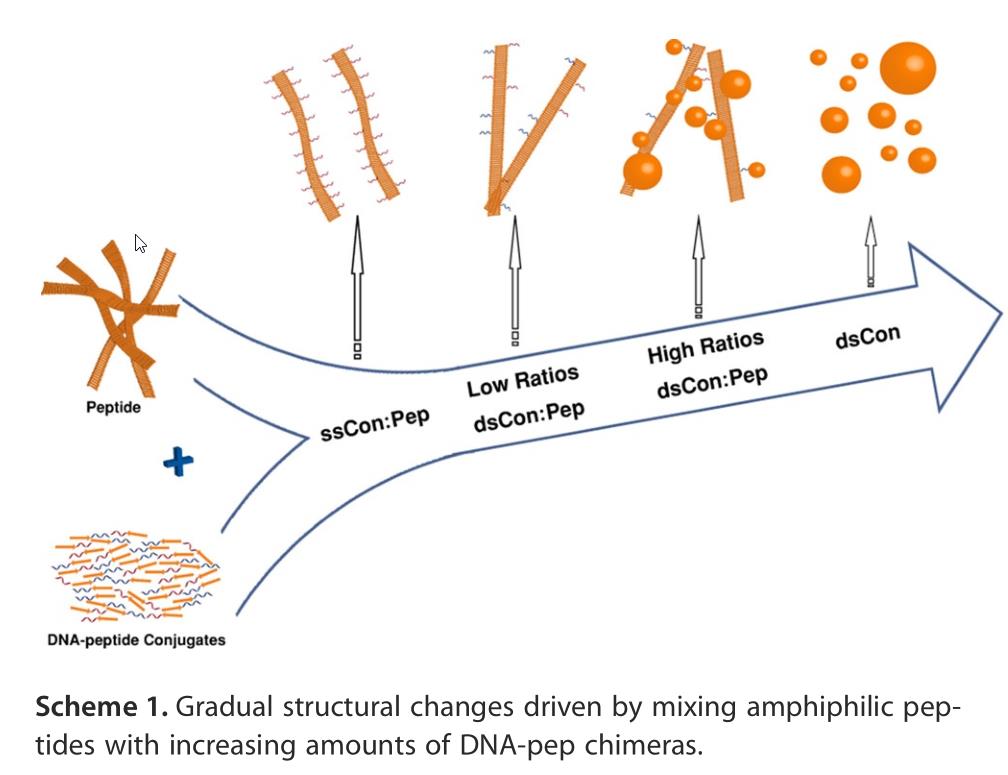

There are also avenues to explore that won’t reveal alien life, but could help us understand how it arose and arises throughout the Universe. We can recreate the atmospheric conditions found on other worlds or even as they were on Earth long ago in the lab, with an eye toward recreating the origin of life-from-non-life. We can continue exploring the possibility of having nucleic acids (RNAs, DNAs, even PNAs: peptide-based nucleic acids) coevolve with peptides in an early prebiotic environment: perhaps the most compelling candidate for how life first arose on Earth.

The rewards of finding out that we’re not alone in the Universe would be immeasurable. Perhaps we could learn how to survive the great environmental threats that face us: hazardous asteroids, a changing climate, or violent space weather events. Perhaps there are even more important lessons to be learned about how to overcome our own insufficiencies as human beings: the great challenge of moving beyond our primal nature. Perhaps other civilizations have success stories to offer us, recounting how, in the early days of their technological infancy, they overcame such issues as:

Travel the Universe with astrophysicist Ethan Siegel. Subscribers will get the newsletter every Saturday. All aboard! Fields marked with an * are required

- overconsumption, where they devoured their planet’s resources beyond the point of sustainability,

- short-term thinking, where they addressed the immediate, urgent problems at the expense of long-term ones that threatened their existence,

- or the emergence of disease, famine, pestilence, and ecological collapse, resulting from the global changes wrought by a post-industrial society.

Our impulses toward greed, plunder, and self-gratification may not be unique, and there may be more experienced, wiser species out there that have figured out solutions that elude us today. Perhaps, if we’re lucky, they may have lessons waiting-in-the-wings for us that could guide us toward a more successful future.

Many of us can imagine two different futures unfolding for the enterprise of human civilization. There’s the one we should strive to avoid: where we resort to infighting, squabbling over the limited resources of our world, descending into ideologically-driven wars and ensuring our own eventual destruction. If we never find life beyond Earth — never find anyone else to communicate with, exchange information and culture with, and to give us hope that there’s a future for humanity out there among the stars — perhaps extinction will indeed be our most likely outcome.

But there’s another possible outcome for humanity: a future where we come together collectively to face the gargantuan challenges facing humans, the environment, planet Earth, and our long-term future. Perhaps the discovery of life beyond Earth — and potentially, of one or more intelligent, spacefaring, extraterrestrial civilizations — might give us not only the guidance and knowledge we need to survive our growing pains, but something far grander than any terrestrial achievements to hope for. Until that day arrives, we must make do with the knowledge that, at present, we have only one another to extend our kindnesses and compassion to. Tags Space & Astrophysics

September 9th 2022

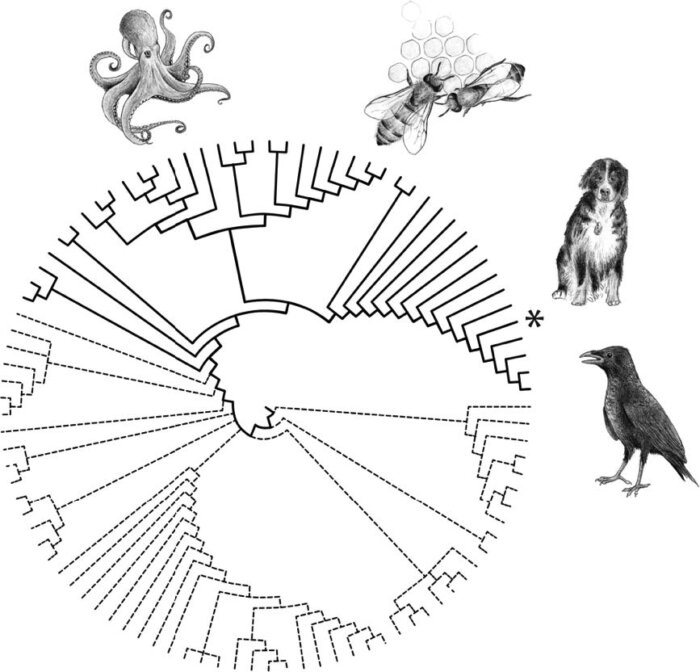

How Darwin’s ‘Descent of Man’ Holds Up Over 150 Years After Publication

Questions still swirl around the author’s theories about sexual selection and the evolution of minds and morals.

- Dan Falk

Read when you’ve got time to spare.

More from Smithsonian Magazine

- Why Birds Survived, and Dinosaurs Went Extinct, After an Asteroid Hit Earth616 saves

- How Much Did Grandmothers Influence Human Evolution?268 saves

- Why Bats Are One of Evolution’s Greatest Puzzles2,848 saves

Photo by dan_wrench/Getty Images

Charles Darwin’s On the Origin of Species rattled Victorian readers in 1859, even though it said almost nothing about how the idea of evolution applied to human beings. A dozen years later, in 1871, he tackled that subject head-on. In The Descent of Man, and Selection in Relation to Sex, published over 150 years ago, Darwin argued forcefully that all creatures were subject to the same natural laws, and that humans had evolved over countless eons, just as other animals had. “Man,” he wrote, “still bears in his bodily frame the indelible stamp of his lowly origin.”

In Descent, Darwin details a theory that he calls “sexual selection”—the idea that, in many species, males battle other males for access to females, while in other species females choose the biggest or most attractive males to bond with. The male-combat theory would explain, for example, the development of a bull’s horns, or a moose’s antlers, while the quintessential example of “female choice” is seen in peahens, which, Darwin argued, prefer to mate with peacocks having the biggest, most colorful tails. For Darwin, sexual selection was just as important as natural selection, which he had outlined in Origin—the idea that organisms with favorable traits are more likely to reproduce, thus passing on those traits to their offspring. Both mechanisms helped to explain how species evolved over time.

“I think for Darwin, sexual selection was what connected humans with non-human animals,” says Ian Hesketh, a historian of science at the University of Queensland in Australia. “It provided the continuity in Darwin’s system, from animals to humans.”

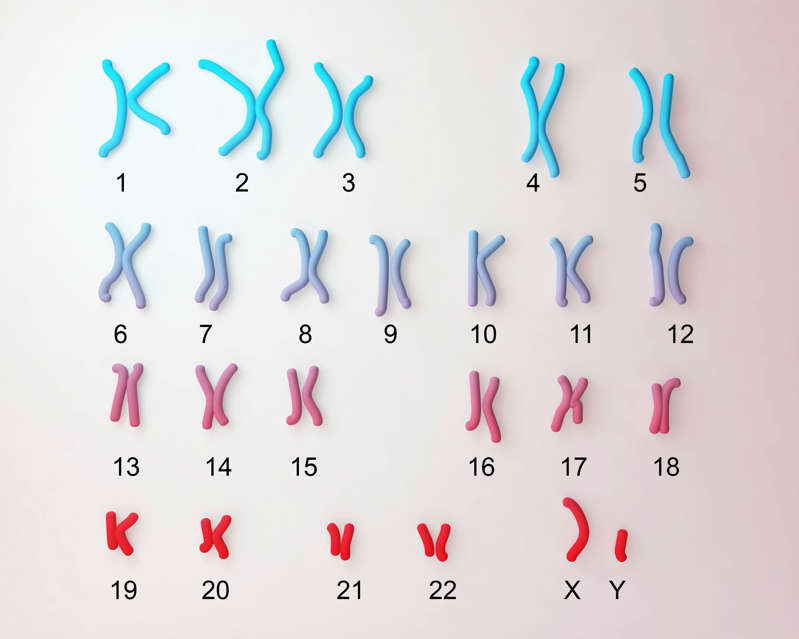

In Descent, Darwin illustrates this continuity by noting the similarities of the human body to those of our primate cousins and to other mammals, focusing on anatomical structure—such as the similarity of their skeletons—and also on embryology—the embryos of related animals can be almost indistinguishable.

Descent, like Origin, became a huge bestseller. As writer Cyril Aydon put it in A Brief Guide to Charles Darwin: His Life and Times: “With Darwin’s name on the cover, and monkeys and sex on the inside pages, it was a publisher’s dream.” Descent is still seen as a landmark in the history of the life sciences—though, inevitably, some passages strike modern readers as offensive, especially where Darwin speculates on issues of race and on gender roles. He also tackled difficult problems that continue to spark debate today, such as the evolution of minds and of moral beliefs.

Many aspects of sexual selection seemed implausible to Darwin’s contemporaries. For example, the theory attempted to explain the development of so-called secondary sexual characteristics, such as the peacock’s tail or other traits that made a male animal more appealing to a female. If these traits are selected by the female, they can develop to extremes over the course of time—at which point they may hamper, rather than aid in, survival. For example, an overly-colorful tail could attract predators. Darwin’s argument also seemed to suggest that animals possessed a sophisticated ability to rate the attractiveness of each potential mate with a kind of check-list of criteria.

“The most contentious aspect [of the book] has to do with how it relates to the development of coloration and what he called ‘charms’—anything that had to do with wooing the female,” says Hesketh, “No one seemed to be on board with that, because it suggested that animals had an aesthetic sense, and that they were making mate-choices based on really miniscule observations.”

The two aspects of sexual selection were not equally well received: The male-combat idea, which casts males as aggressive and females as passive, seemed plausible enough to Darwin’s contemporaries, as it meshed with the prevailing prejudices of the time. But the other part of the theory, in which females appear to have the power of choice by selecting from among an array of prospective males, struck many as a radical notion. For humans, however, Darwin switched it up; in our own species, he argued, it was the male that did the choosing.

“The argument here is that males have ‘seized the power of selection’ from females, because they’re more powerful, in body and mind, than women,” says Evelleen Richards, a historian at the University of Sydney and the author of Darwin and the Making of Sexual Selection. In Descent, Darwin writes of “man attaining a higher eminence in whatever he takes up than woman can attain—whether requiring deep thought, reason or imagination, or merely the use of the senses and hands.” He added, “Thus man has ultimately become superior to woman.”

Passages like that reveal Darwin’s “androcentric bias,” as Richards puts it, noting that his views on sex and sex differences were very much derived from a male perspective and were a product of Victorian society. To complicate matters, Darwin’s views about sex were intimately tied up with his theories about race. A much-debated question in Darwin’s time was the puzzling diversity of humanity. Did the various races emerge independently of one another? That view, known as “polygenism,” was popular among members of the Anthropological Society of London, which Richards describes as an “out-and-out racist” organization. The Society supported the Confederacy in the U.S. Civil War, and its leader, a speech therapist named James Hunt, declared that we “know that the Races of Europe have now much in their mental and moral nature which the races of Africa have not got.” Others, including Darwin, argued that all races shared a common origin, a view known as “monogenism.” But monogenists still had to explain what caused the diversity seen today. This is where sexual selection comes in. Darwin argued that differing judgements of attractiveness held the key; he believed that men of one tribe or group were naturally most attracted to members of their own tribe. He wrote that “the differences between the tribes, at first very slight, would gradually and inevitably be increased to a greater and greater degree.” Few of Darwin’s readers found this plausible, says Richards, because they imagined European ideals of beauty to be universal; they simply couldn’t imagine, for example, “that black skin could be attractive to anyone,” she says.

All of this, Richards says, highlights the complexity of Darwin’s views on race. In contrast to many of his contemporaries, he believed in “the brotherhood of man,” as Richards puts it, and found slavery repugnant—and yet he believed, as most Victorians did, in a racial hierarchy with Europeans at the top. Even so, some of his ideas—like the notion of Africans being attracted to Africans—struck his contemporaries as too radical.

Perhaps the most difficult puzzle for Darwin was the remarkable cognitive power of humans, and, especially, the human capacity for moral reasoning. Some of Darwin’s contemporaries, notably Alfred Russel Wallace, saw the human mind as evidence that a divine intelligence was guiding evolution. Wallace, who co-discovered natural selection, came to embrace spiritualism in his later years. Historians see Descent largely as a rebuttal to Wallace, that is, as an attempt to set forth a purely naturalistic explanation for the mind and for moral behavior. While the details were far from clear, Darwin saw minds and morals as rooted, ultimately, in biology. For example, he argues that a primitive kind of moral sense can be seen in certain animals—those “endowed with the social instincts” and which “take pleasure in one another’s company, warn one another of danger, defend and aid one another in many ways.” As such instinctive behavior is “highly beneficial to the species, they have in all probability been acquired through natural selection.”

Unlike Origin, which was immediately hailed as a groundbreaking scientific work, Descent has had a checkered history. The idea of sexual selection, in particular, languished in the decades following its publication. That’s partly because of lingering doubts over animals’ aesthetic sense and the idea of female choice, and partly because Darwin was never able to convince his old allies—people like Wallace and also Thomas Henry Huxley—that sexual selection was an important facet of evolution. Others, meanwhile, were uncomfortable with naturalistic accounts of minds and morals. “By the turn of the century, sexual selection, for all intents and purposes, is basically dead,” says Henry-James Meiring, a PhD student working with Hesketh at Queensland.

In the 20th century, however, it began to make a comeback. Biologists absorbed many of the ideas in Descent into the so-called modern synthesis that combined Darwin’s theory of evolution with the new science of genetics; later, aspects of sexual selection received support from evolutionary theories of social behavior. By the 1970s, sexual selection “starts making a comeback in modern science, and in some form has continued ever since,” says Meiring. Evelleen Richards adds that sexual selection has only recently “come back on the agenda as a scientific fact in its own right.”

On the bigger picture—the unity of all living things—Darwin was on the right track. That unity, he reasoned, applies not just to bodies but also to minds. True, scientists continue to debate the question of exactly how the brain (a biological organ) gives rise to a mind (with its intangible mental processes), but it is clear that brains are what make minds possible, and these evolved just as our bodies did. In this sense we’re no different from our primate cousins; Darwin argued that the cognitive powers of human beings differ from those of apes in degree, not in kind. Darwin’s thinking on these problems today “enjoys broad support in disciplines such as neuroscience and evolutionary psychology,” says Meiring.

Other aspects of Darwin’s thinking in Descent continue to spark debate. Some scholars have criticized attempts to explain social behavior in terms of biology as overly reductionist, and many facets of evolutionary psychology, in particular, have faced skepticism in recent years. For example, some anthropologists argue that we don’t know enough about the environment in which early humans lived, or the advantages that particular behavioral traits conferred, to be certain that behaviors observed today are the result of early conditions. And puzzles persist over the origins of language, music and religion.

“Darwin, like any other scientific figure of the past, got some things right and got a lot of things wrong,” says Meiring. “And his own biases around gender and race had an impact on the way that he theorized and thought about science.” In Descent, he says, Darwin grappled with “things that we are still arguing about, and that we have still not resolved. I think that’s possibly its greatest legacy.”

Dan Falk is a science journalist based in Toronto. His books include The Science of Shakespeare and In Search of Time.

Can We Time Travel? A Theoretical Physicist Provides Some Answers

Theories exploring the possibility of time travel rely on the existence of types of matter and energy that we do not understand yet.

- Peter Watson

More from The Conversation

- Bizarre ‘Dark Fluid’ With Negative Mass Could Dominate the Universe848 saves

- Want to Be Happy? Then Live Like a Stoic for a Week1,453 saves

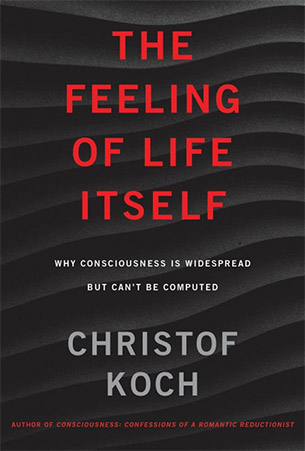

- Science as We Know It Can’t Explain Consciousness – but a Revolution Is Coming1,235 saves

Our curiosity about time travel is thousands of years old. Photo by Shutterstock

Time travel makes regular appearances in popular culture, with innumerable time travel storylines in movies, television and literature. But it is a surprisingly old idea: one can argue that the Greek tragedy Oedipus Rex, written by Sophocles over 2,500 years ago, is the first time travel story.

But is time travel in fact possible? Given the popularity of the concept, this is a legitimate question. As a theoretical physicist, I find that there are several possible answers to this question, not all of which are contradictory.

The simplest answer is that time travel cannot be possible because if it was, we would already be doing it. One can argue that it is forbidden by the laws of physics, like the second law of thermodynamics or relativity. There are also technical challenges: it might be possible but would involve vast amounts of energy.

There is also the matter of time-travel paradoxes; we can — hypothetically — resolve these if free will is an illusion, if many worlds exist or if the past can only be witnessed but not experienced. Perhaps time travel is impossible simply because time must flow in a linear manner and we have no control over it, or perhaps time is an illusion and time travel is irrelevant.

Some time travel theories suggest that one can observe the past like watching a movie, but cannot interfere with the actions of people in it.Photo by Rodrigo Gonzales/Unsplash

Laws of Physics

Since Albert Einstein’s theory of relativity — which describes the nature of time, space and gravity — is our most profound theory of time, we would like to think that time travel is forbidden by relativity. Unfortunately, one of his colleagues from the Institute for Advanced Study, Kurt Gödel, invented a universe in which time travel was not just possible, but the past and future were inextricably tangled.

We can actually design time machines, but most of these (in principle) successful proposals require negative energy, or negative mass, which does not seem to exist in our universe. If you drop a tennis ball of negative mass, it will fall upwards. This argument is rather unsatisfactory, since it explains why we cannot time travel in practice only by involving another idea — that of negative energy or mass — that we do not really understand.

Mathematical physicist Frank Tipler conceptualized a time machine that does not involve negative mass, but requires more energy than exists in the universe.

Time travel also violates the second law of thermodynamics, which states that entropy or randomness must always increase. Time can only move in one direction — in other words, you cannot unscramble an egg. More specifically, by travelling into the past we are going from now (a high entropy state) into the past, which must have lower entropy.

This argument originated with the English cosmologist Arthur Eddington, and is at best incomplete. Perhaps it stops you travelling into the past, but it says nothing about time travel into the future. In practice, it is just as hard for me to travel to next Thursday as it is to travel to last Thursday.

Resolving Paradoxes

There is no doubt that if we could time travel freely, we run into the paradoxes. The best known is the “grandfather paradox”: one could hypothetically use a time machine to travel to the past and murder their grandfather before their father’s conception, thereby eliminating the possibility of their own birth. Logically, you cannot both exist and not exist.

Kurt Vonnegut’s anti-war novel Slaughterhouse-Five, published in 1969, describes how to evade the grandfather paradox. If free will simply does not exist, it is not possible to kill one’s grandfather in the past, since he was not killed in the past. The novel’s protagonist, Billy Pilgrim, can only travel to other points on his world line (the timeline he exists in), but not to any other point in space-time, so he could not even contemplate killing his grandfather.

The universe in Slaughterhouse-Five is consistent with everything we know. The second law of thermodynamics works perfectly well within it and there is no conflict with relativity. But it is inconsistent with some things we believe in, like free will — you can observe the past, like watching a movie, but you cannot interfere with the actions of people in it.

Could we allow for actual modifications of the past, so that we could go back and murder our grandfather — or Hitler? There are several multiverse theories that suppose that there are many timelines for different universes. This is also an old idea: in Charles Dickens’ A Christmas Carol, Ebeneezer Scrooge experiences two alternative timelines, one of which leads to a shameful death and the other to happiness.

Time Is a River

Roman emperor Marcus Aurelius wrote that:

“Time is like a river made up of the events which happen, and a violent stream; for as soon as a thing has been seen, it is carried away, and another comes in its place, and this will be carried away too.”

We can imagine that time does flow past every point in the universe, like a river around a rock. But it is difficult to make the idea precise. A flow is a rate of change — the flow of a river is the amount of water that passes a specific length in a given time. Hence if time is a flow, it is at the rate of one second per second, which is not a very useful insight.

Theoretical physicist Stephen Hawking suggested that a “chronology protection conjecture” must exist, an as-yet-unknown physical principle that forbids time travel. Hawking’s concept originates from the idea that we cannot know what goes on inside a black hole, because we cannot get information out of it. But this argument is redundant: we cannot time travel because we cannot time travel!

Researchers are investigating a more fundamental theory, where time and space “emerge” from something else. This is referred to as quantum gravity, but unfortunately it does not exist yet.

So is time travel possible? Probably not, but we don’t know for sure!

Peter Watson is an emeritus professor of physics at Carleton University.

August 28th 2022

The Big Bang no longer means what it used to

As we gain new knowledge, our scientific picture of how the Universe works must evolve. This is a feature of the Big Bang, not a bug.

Key Takeaways

- The idea that the Universe had a beginning, or a “day without a yesterday” as it was originally known, goes all the way back to Georges Lemaître in 1927.

- Although it’s still a defensible position to state that the Universe likely had a beginning, that stage of our cosmic history has very little to do with the “hot Big Bang” that describes our early Universe.

- Although many laypersons (and even a minority of professionals) still cling to the idea that the Big Bang means “the very beginning of it all,” that definition is decades out of date. Here’s how to get caught up.

Share The Big Bang no longer means what it used to on Facebook Share The Big Bang no longer means what it used to on Twitter Share The Big Bang no longer means what it used to on LinkedIn

If there’s one hallmark inherent to science, it’s that our understanding of how the Universe works is always open to revision in the face of new evidence. Whenever our prevailing picture of reality — including the rules it plays by, the physical contents of a system, and how it evolved from its initial conditions to the present time — gets challenged by new experimental or observational data, we must open our minds to changing our conceptual picture of the cosmos. This has happened many times since the dawn of the 20th century, and the words we use to describe our Universe have shifted in meaning as our understanding has evolved.

Yet, there are always those who cling to the old definitions, much like linguistic prescriptivists, who refuse to acknowledge that these changes have occurred. But unlike the evolution of colloquial language, which is largely arbitrary, the evolution of scientific terms must reflect our current understanding of reality. Whenever we talk about the origin of our Universe, the term “the Big Bang” comes to mind, but our understanding of our cosmic origins have evolved tremendously since the idea that our Universe even had an origin, scientifically, was first put forth. Here’s how to resolve the confusion and bring you up to speed on what the Big Bang originally meant versus what it means today.

The first time the phrase “the Big Bang” was uttered was over 20 years after the idea was first described. In fact, the term itself comes from one of the theory’s greatest detractors: Fred Hoyle, who was a staunch advocate of the rival idea of a Steady-State cosmology. In 1949, he appeared on BBC radio and advocated for what he called the perfect cosmological principle: the notion that the Universe was homogeneous in both space and time, meaning that any observer not only anywhere but anywhen would perceive the Universe to be in the same cosmic state. He went on to deride the opposing notion as a “hypothesis that all matter of the universe was created in one Big Bang at a particular time in the remote past,” which he then called “irrational” and claimed to be “outside science.”

But the idea, in its original form, wasn’t simply that all of the Universe’s matter was created in one moment in the finite past. That notion, derided by Hoyle, had already evolved from its original meaning. Originally, the idea was that the Universe itself, not just the matter within it, had emerged from a state of non-being in the finite past. And that idea, as wild as it sounds, was an inevitable but difficult-to-accept consequence of the new theory of gravity put forth by Einstein back in 1915: General Relativity.

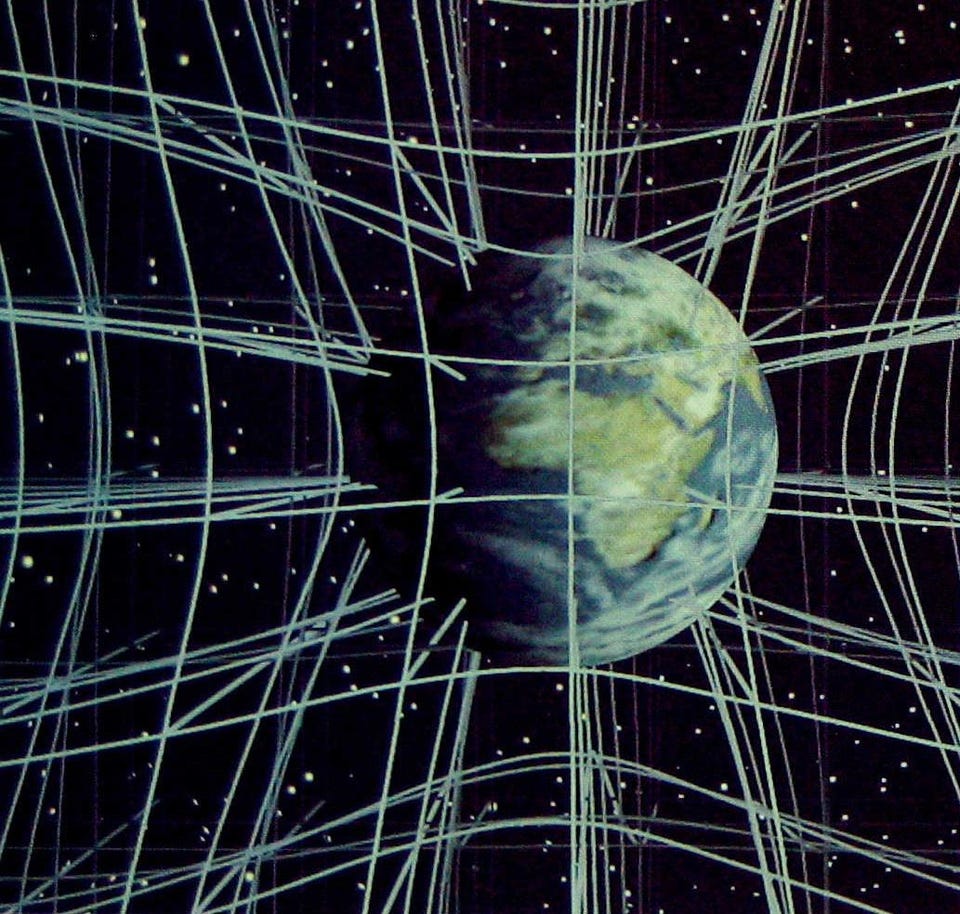

When Einstein first cooked up the general theory of relativity, our conception of gravity forever shifted from the prevailing notion of Newtonian gravity. Under Newton’s laws, the way that gravitation worked was that any and all masses in the Universe exerted a force on one another, instantaneously across space, in direct proportion to the product of their masses and inversely proportional to the square of the distance between them. But in the aftermath of his discovery of special relativity, Einstein and many others quickly recognized that there was no such thing as a universally applicable definition of what “distance” was or even what “instantaneously” meant with respect to two different locations.

With the introduction of Einsteinian relativity — the notion that observers in different frames of reference would all have their own unique, equally valid perspectives on what distances between objects were and how the passage of time worked — it was only almost immediate that the previously absolute concepts of “space” and “time” were woven together into a single fabric: spacetime. All objects in the Universe moved through this fabric, and the task for a novel theory of gravity would be to explain how not just masses, but all forms of energy, shaped this fabric that underpinned the Universe itself.

Although the laws that governed how gravitation worked in our Universe were put forth in 1915, the critical information about how our Universe was structured had not yet come in. While some astronomers favored the notion that many objects in the sky were actually “island Universes” that were located well outside the Milky Way galaxy, most astronomers at the time thought that the Milky Way galaxy represented the full extent of the Universe. Einstein sided with this latter view, and — thinking the Universe was static and eternal — added a special type of fudge factor into his equations: a cosmological constant.

Travel the Universe with astrophysicist Ethan Siegel. Subscribers will get the newsletter every Saturday. All aboard! Fields marked with an * are required

Although it was mathematically permissible to make this addition, the reason Einstein did so was because without one, the laws of General Relativity would ensure that a Universe that was evenly, uniformly distributed with matter (which ours seemed to be) would be unstable against gravitational collapse. In fact, it was very easy to demonstrate that any initially uniform distribution of motionless matter, regardless of shape or size, would inevitably collapse into a singular state under its own gravitational pull. By introducing this extra term of a cosmological constant, Einstein could tune it so that it would balance out the inward pull of gravity by proverbially pushing the Universe out with an equal and opposing action.

Edwin Hubble’s original plot of galaxy distances versus redshift (left), establishing the expanding Universe, versus a more modern counterpart from approximately 70 years later (right). In agreement with both observation and theory, the Universe is expanding, and the slope of the line relating distance to recession speed is a constant.

Two developments — one theoretical and one observational — would quickly change this early story that Einstein and others had told themselves.

- In 1922, Alexander Friedmann worked out, fully, the equations that governed a Universe that was isotropically (the same in all directions) and homogeneously (the same in all locations) filled with any type of matter, radiation, or other form of energy. He found that such a Universe would never remain static, not even in the presence of a cosmological constant, and that it must either expand or contract, dependent on the specifics of its initial conditions.

- In 1923, Edwin Hubble became the first to determine that the spiral nebulae in our skies were not contained within the Milky Way, but rather were located many times farther away than any of the objects that comprised our home galaxy. The spirals and ellipticals found throughout the Universe were, in fact, their own “island Universes,” now known as galaxies, and that moreover — as had previously been observed by Vesto Slipher — the vast majority of them appeared to be moving away from us at remarkably rapid speeds.

In 1927, Georges Lemaître became the very first person to put these pieces of information together, recognizing that the Universe today is expanding, and that if things are getting farther apart and less dense today, then they must have been closer together and denser in the past. Extrapolating this back all the way to its logical conclusion, he deduced that the Universe must have expanded to its present state from a single point-of-origin, which he called either the “cosmic egg” or the “primeval atom.”

This was the original notion of what would grow into the modern theory of the Big Bang: the idea that the Universe had a beginning, or a “day without yesterday.” It was not, however, generally accepted for some time. Lemaître originally sent his ideas to Einstein, who infamously dismissed Lemaître’s work by responding, “Your calculations are correct, but your physics is abominable.”

Despite the resistance to his ideas, however, Lemaître would be vindicated by further observations of the Universe. Many more galaxies would have their distances and redshifts measured, leading to the overwhelming conclusion the Universe was and still is expanding, equally and uniformly in all directions on large cosmic scales. In the 1930s, Einstein conceded, referring to his introduction of the cosmological constant in an attempt to keep the Universe static as his “greatest blunder.”

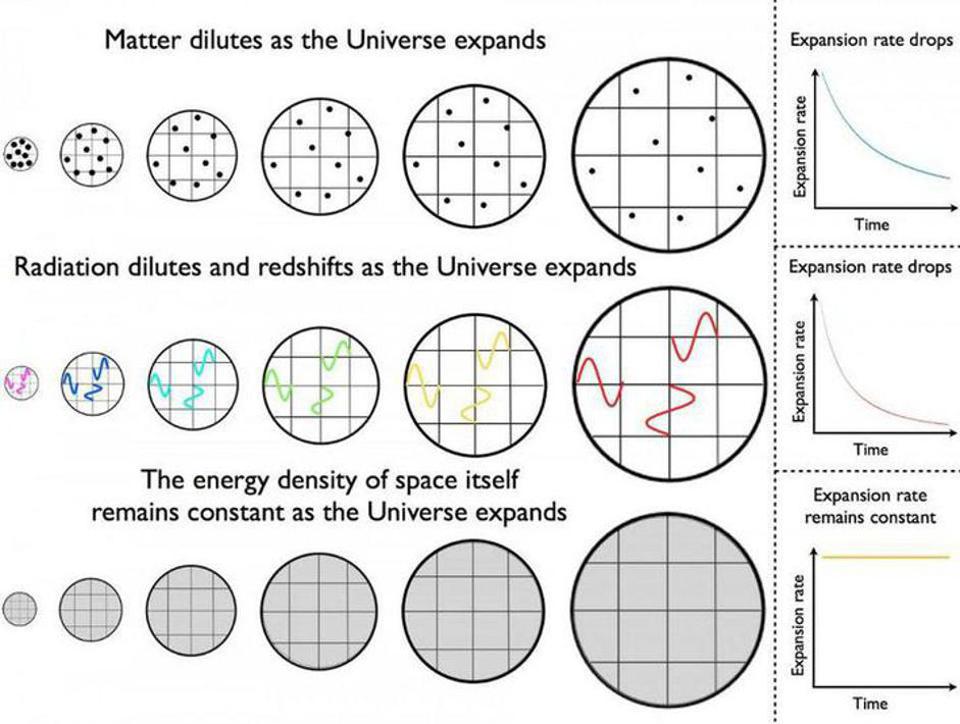

However, the next great development in formulating what we know of as the Big Bang wouldn’t come until the 1940s, when George Gamow — perhaps not so coincidentally, an advisee of Alexander Friedmann — came along. In a remarkable leap forward, he recognized that the Universe was not only full of matter, but also radiation, and that radiation evolved somewhat differently from matter in an expanding Universe. This would be of little consequence today, but in the early stages of the Universe, it mattered tremendously.

While matter (both normal and dark) and radiation become less dense as the Universe expands owing to its increasing volume, dark energy, and also the field energy during inflation, is a form of energy inherent to space itself. As new space gets created in the expanding Universe, the dark energy density remains constant. Note that individual quanta of radiation are not destroyed, but simply dilute and redshift to progressively lower energies, stretching to longer wavelengths and lower energies as space expands.

Matter, Gamow realized, was made up of particles, and as the Universe expanded and the volume that these particles occupied increased, the number density of matter particles would drop in direct proportion to how the volume grew.

But radiation, while also made up of a fixed number particles in the form of photons, had an additional property: the energy inherent to each photon is determined by the photon’s wavelength. As the Universe expands, the wavelength of each photon gets lengthened by the expansion, meaning that the amount of energy present in the form of radiation decreases faster than the amount of energy present in the form of matter in the expanding Universe.

But in the past, when the Universe was smaller, the opposite would have been true. If we were to extrapolate backward in time, the Universe would have been in a hotter, denser, more radiation-dominated state. Gamow leveraged this fact to make three great, generic predictions about the young Universe.

- At some point, the Universe’s radiation was hot enough so that every neutral atom would have been ionized by a quantum of radiation, and that this leftover bath of radiation should still persist today at only a few degrees above absolute zero.

- At some even earlier point, it would have been too hot to even form stable atomic nuclei, and so an early stage of nuclear fusion should have occurred, where an initial mix of protons-and-neutrons should have fused together to create an initial set of atomic nuclei: an abundance of elements that predates the formation of atoms.

- And finally, this means that there would be some point in the Universe’s history, after atoms had formed, where gravitation pulled this matter together into clumps, leading to the formation of stars and galaxies for the first time.

These three major points, along with the already-observed expansion of the Universe, form what we know today as the four cornerstones of the Big Bang. Although one was still free to extrapolate the Universe back to an arbitrarily small, dense state — even to a singularity, if you’re daring enough to do so — that was no longer the part of the Big Bang theory that had any predictive power to it. Instead, it was the emergence of the Universe from a hot, dense state that led to our concrete predictions about the Universe.

Over the 1960s and 1970s, as well as ever since, a combination of observational and theoretical advances unequivocally demonstrated the success of the Big Bang in describing our Universe and predicting its properties.

- The discovery of the cosmic microwave background and the subsequent measurement of its temperature and the blackbody nature of its spectrum eliminated alternative theories like the Steady State model.

- The measured abundances of the light elements throughout the Universe verified the predictions of Big Bang nucleosynthesis, while also demonstrating the need for fusion in stars to provide the heavy elements in our cosmos.

- And the farther away we look in space, the less grown-up and evolved galaxies and stellar populations appear to be, while the largest-scale structures like galaxy groups and clusters are less rich and abundant the farther back we look.

The Big Bang, as verified by our observations, accurately and precisely describes the emergence of our Universe, as we see it, from a hot, dense, almost-perfectly uniform early stage.

But what about the “beginning of time?” What about the original idea of a singularity, and an arbitrarily hot, dense state from which space and time themselves could have first emerged?

That’s a different conversation, today, than it was back in the 1970s and earlier. Back then, we knew that we could extrapolate the hot Big Bang back in time: back to the first fraction-of-a-second of the observable Universe’s history. Between what we could learn from particle colliders and what we could observe in the deepest depths of space, we had lots of evidence that this picture accurately described our Universe.

But at the absolute earliest times, this picture breaks down. There was a new idea — proposed and developed in the 1980s — known as cosmological inflation, that made a slew of predictions that contrasted with those that arose from the idea of a singularity at the start of the hot Big Bang. In particular, inflation predicted:

- A curvature for the Universe that was indistinguishable from flat, to the level of between 99.99% and 99.9999%; comparably, a singularly hot Universe made no prediction at all.

- Equal temperatures and properties for the Universe even in causally disconnected regions; a Universe with a singular beginning made no such prediction.

- A Universe devoid of exotic high-energy relics like magnetic monopoles; an arbitrarily hot Universe would possess them.

- A Universe seeded with small-magnitude fluctuations that were almost, but not perfectly, scale invariant; a non-inflationary Universe produces large-magnitude fluctuations that conflict with observations.

- A Universe where 100% of the fluctuations are adiabatic and 0% are isocurvature; a non-inflationary Universe has no preference.

- A Universe with fluctuations on scales larger than the cosmic horizon; a Universe originating solely from a hot Big Bang cannot have them.

- And a Universe that reached a finite maximum temperature that’s well below the Planck scale; as opposed to one whose maximum temperature reached all the way up to that energy scale.

The first three were post-dictions of inflation; the latter four were predictions that had not yet been observed when they were made. On all of these accounts, the inflationary picture has succeeded in ways that the hot Big Bang, without inflation, has not.

During inflation, the Universe must have been devoid of matter-and-radiation and instead contained some sort of energy — whether inherent to space or as part of a field — that didn’t dilute as the Universe expanded. This means that inflationary expansion, unlike matter-and-radiation, didn’t follow a power law that leads back to a singularity but rather is exponential in character. One of the fascinating aspects about this is that something that increases exponentially, even if you extrapolate it back to arbitrarily early times, even to a time where t → -∞, it never reaches a singular beginning.

Now, there are many reasons to believe that the inflationary state wasn’t one that was eternal to the past, that there might have been a pre-inflationary state that gave rise to inflation, and that, whatever that pre-inflationary state was, perhaps it did have a beginning. There are theorems that have been proven and loopholes discovered to those theorems, some of which have been closed and some of which remain open, and this remains an active and exciting area of research.

But one thing is for certain.

Whether there was a singular, ultimate beginning to all of existence or not, it no longer has anything to do with the hot Big Bang that describes our Universe from the moment that:

- inflation ended,

- the hot Big Bang occurred,

- the Universe became filled with matter and radiation and more,

- and it began expanding, cooling, and gravitating,

eventually leading to the present day. There are still a minority of astronomers, astrophysicists and cosmologists who use “the Big Bang” to refer to this theorized beginning and emergence of time-and-space, but not only is that not a foregone conclusion anymore, but it doesn’t have anything to do with the hot Big Bang that gave rise to our Universe. The original definition of the Big Bang has now changed, just as our understanding of the Universe has changed. If you’re still behind, that’s ok; the best time to catch up is always right now.

Additional recommended reading:

- Ask Ethan: Do we know why the Big Bang really happened? (evidence for cosmic inflation)

- Surprise: the Big Bang isn’t the beginning of the universe anymore (why a “singularity” is no longer necessarily a given)

August 22nd 2022

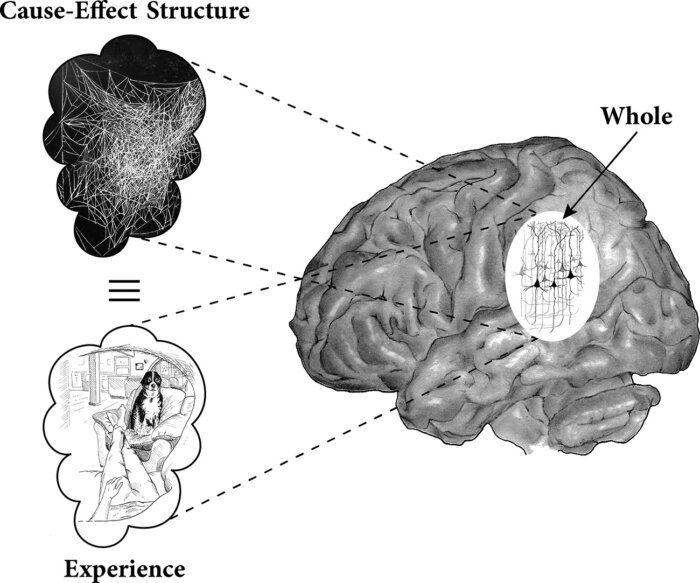

Quantum Physics Could Finally Explain Consciousness, Scientists Say

We asked a theoretical physicist, an experimental physicist, and a professor of philosophy to weigh in.

By Robert Lea Aug 16, 2022

During the 20th century, researchers pushed the frontiers of science further than ever before with great strides made in two very distinct fields. While physicists discovered the strange counter-intuitive rules that govern the subatomic world, our understanding of how the mind works burgeoned.

Yet, in the newly-created fields of quantum physics and cognitive science, difficult and troubling mysteries still linger, and occasionally entwine. Why do quantum states suddenly resolve when they’re measured, making it at least superficially appear that observation by a conscious mind has the capacity to change the physical world? What does that tell us about consciousness?

Popular Mechanics spoke to three researchers from different fields for their views on a potential quantum consciousness connection. Stop us if you’ve heard this one before: a theoretical physicist, an experimental physicist, and a professor of philosophy walk into a bar …

Quantum Physics and Consciousness Are Weird

Early quantum physicists noticed through the double-slit experiment that the act of attempting to measure photons as they pass through wavelength-sized slits to a detection screen on the other side changed their behavior.

This measurement attempt caused wave-like behavior to be destroyed, forcing light to behave more like a particle. While this experiment answered the question “is light a wave or a particle?” — it’s neither, with properties of both, depending on the circumstance — it left behind a more troubling question in its wake. What if the act of observation with the human mind is actually causing the world to manifest changes , albeit on an incomprehensibly small scale?

Renowned and reputable scientists such as Eugene Wigner, John Bell, and later Roger Penrose, began to consider the idea that consciousness could be a quantum phenomenon. Eventually, so did researchers in cognitive science (the scientific study of the mind and its processes), but for different reasons.

Ulf Danielsson, an author and a professor of theoretical physics at Uppsala University in Sweden, believes one of the reasons for the association between quantum physics and consciousness—at least from the perspective of cognitive science—is the fact that processes on a quantum level are completely random. This is different from the deterministic way in which classical physics proceeds, and means even the best calculations that physicists can come up with in regard to quantum experiments are mere probabilities.

This rationalization isn’t convincing to him, however. “I don’t think that there’s any reason to suppose from the cognitive science direction that quantum mechanics has anything to do with explaining consciousness,” Barrett continues.

From the quantum perspective, however, Barrett sees a clear reason why physicists first proposed the connection to consciousness.

“If it wasn’t for the quantum measurement problem, nobody, including the physicists involved in this early discussion, would be thinking that consciousness and quantum mechanics had anything to do with each other,” he says.

Superposition and Schrödinger’s Cat

At the heart of quantum “weirdness” and the measurement problem, there is a concept called “superposition.”

Because the possible states of a quantum system are described using wave mathematics — or more precisely, wave functions — a quantum system can exist in many overlapping states, or a superposition. The weird thing is, these states can be contradictory.

To see how counter-intuitive this can be, we can refer to one of history’s most famous thought experiments, the Schrödinger’s Cat paradox.

Devised by Erwin Schrödinger, the experiment sees an unfortunate cat placed in a box with what the physicist described as a “diabolical device” for an hour. The device releases a deadly poison if an atom in the box decays during that period. Because the decay of atoms is completely random, there is no way for the experimenter to predict if the cat is dead or alive until the hour is up and the box is opened. This content is imported from {embed-name}. You may be able to find the same content in another format, or you may be able to find more information, at their web site.

August 16th 2022

Hormones in hair may reveal how chronically stressed you are — study

Long-term stress isn’t good for you, and your hair knows it.

Stress can do a number on your body — and that includes the hairs on your head. Stress releases hormones that affect hair pigmentation, turning your luscious locks gray or white. It can also make your hair fall out, triggering hair follicles to enter a “dormant” phase that results in hair loss. And now, according to a paper published Wednesday in the journal PLOS Global Public Health, researchers have discovered that stress levels are also reflected in how much of the hormone cortisol is stored in your hair.

Sloppy Use of Machine Learning Is Causing a ‘Reproducibility Crisis’ in Science

AI hype has researchers in fields from medicine to sociology rushing to use techniques that they don’t always understand—causing a wave of spurious results.

ApplicationPrediction

End UserResearch

SectorHealth carePublic safetyResearch

TechnologyMachine learning

History shows civil wars to be among the messiest, most horrifying of human affairs. So Princeton professor Arvind Narayanan and his PhD student Sayash Kapoor got suspicious last year when they discovered a strand of political science research claiming to predict when a civil war will break out with more than 90 percent accuracy, thanks to artificial intelligence.

A series of papers described astonishing results from using machine learning, the technique beloved by tech giants that underpins modern AI. Applying it to data such as a country’s gross domestic product and unemployment rate was said to beat more conventional statistical methods at predicting the outbreak of civil war by almost 20 percentage points.

Yet when the Princeton researchers looked more closely, many of the results turned out to be a mirage. Machine learning involves feeding an algorithm data from the past that tunes it to operate on future, unseen data. But in several papers, researchers failed to properly separate the pools of data used to train and test their code’s performance, a mistake termed “data leakage” that results in a system being tested with data it has seen before, like a student taking a test after being provided the answers.

“They were claiming near-perfect accuracy, but we found that in each of these cases, there was an error in the machine-learning pipeline,” says Kapoor. When he and Narayanan fixed those errors, in every instance they found that modern AI offered virtually no advantage.

That experience prompted the Princeton pair to investigate whether misapplication of machine learning was distorting results in other fields—and to conclude that incorrect use of the technique is a widespread problem in modern science.

AI has been heralded as potentially transformative for science because of its capacity to unearth patterns that may be hard to discern using more conventional data analysis. Researchers have used AI to make breakthroughs in predicting protein structures, controlling fusion reactors, probing the cosmos.

Yet Kapoor and Narayanan warn that AI’s impact on scientific research has been less than stellar in many instances. When the pair surveyed areas of science where machine learning was applied, they found that other researchers had identified errors in 329 studies that relied on machine learning, across a range of fields.

Kapoor says that many researchers are rushing to use machine learning without a comprehensive understanding of its techniques and their limitations. Dabbling with the technology has become much easier, in part because the tech industry has rushed to offer AI tools and tutorials designed to lure newcomers, often with the goal of promoting cloud platforms and services. “The idea that you can take a four-hour online course and then use machine learning in your scientific research has become so overblown,” Kapoor says. “People have not stopped to think about where things can potentially go wrong.”

Excitement around AI’s potential has prompted some scientists to bet heavily on its use in research. Tonio Buonassisi, a professor at MIT who researches novel solar cells, uses AI extensively to explore novel materials. He says that while it is easy to make mistakes, machine learning is a powerful tool that should not be abandoned. Errors can often be ironed out, he says, if scientists from different fields develop and share best practices. “You don’t need to be a card-carrying machine-learning expert to do these things right,” he says.

Most Popular

- cultureA Glimpse of a Future Without White PeopleJason Parham

- securityA New Tractor Jailbreak Rides the Right-to-Repair WaveLily Hay Newman

- backchannelThe Double Life of an American Lake MonsterMarion Renault

- scienceParticle Physicists Puzzle Over a New DualityKatie McCormick

Kapoor and Narayanan organized a workshop late last month to draw attention to what they call a “reproducibility crisis” in science that makes use of machine learning. They were hoping for 30 or so attendees but received registrations from over 1,500 people, a surprise that they say suggests issues with machine learning in science are widespread.

During the event, invited speakers recounted numerous examples of situations where AI had been misused, from fields including medicine and social science. Michael Roberts, a senior research associate at Cambridge University, discussed problems with dozens of papers claiming to use machine learning to fight Covid-19, including cases where data was skewed because it came from a variety of different imaging machines. Jessica Hullman, an associate professor at Northwestern University, compared problems with studies using machine learning to the phenomenon of major results in psychology proving impossible to replicate. In both cases, Hullman says, researchers are prone to using too little data, and misreading the statistical significance of results.

This popular anti-aging goo can help regrow muscle — study

How muscle stem cells activate themselves to repair damaged tissue has boggled scientists. Now we know hyaluronic acid might be the key.

Alec Smith, a regenerative medicine researcher at the University of Washington’s Institute for Stem Cell and Regenerative Medicine, who was not involved in the study, says that this finding may help individuals who lose and can’t grow back muscle due to extensive trauma, such as gunshot wounds or car accidents.

“The big goal in this area of muscle regeneration is to work out how we can enhance the process to allow people to overcome more significant wounds,” he explains to Inverse.

Here’s the background — Scientists already knew that muscle stem cells help out with the healing process and are critical to muscle regeneration. But they tend to arrive long after inflammation sets in, Jeff Dilworth, the study’s lead researcher, and an epigeneticist at The Ottawa Hospital Research Institute in Canada, tells Inverse.

“What happens after muscle injury is that there’s inflammation, immune cells come into the injured muscle to remove damaged tissues,” he says. “Your muscle doesn’t want to waste making new muscle until you’ve got the inflammation resolved so stem cells don’t actually start working until almost two days after injury.”

During inflammation, immune cells like macrophages (which eat injured cells) spit out a deluge of chemicals called cytokines, which tell other cells what to do. To muscle stem cells, also called satellite cells, cytokines tell them not to wake up until most of the inflammatory storm is over.

The discovery — How exactly muscle stem cells can counter their chemical snooze button and activate themselves has boggled scientists for some time. Dilworth and his team stumbled across hyaluronic acid’s role when investigating an enzyme — a protein that acts as a biological catalyst to speed up chemical reactions in cells — that seemed to work only during inflammation.

Looking at mice and muscle cells grown in Petri dishes, the researchers found that in response to the influx of cytokines, the enzyme JMJD3 rouses muscle stem cells awake. More specifically, the enzyme cozies up to a gene involved in hyaluronic acid production called Has2.

“The stem cells, as it’s receiving those signals [from cytokines], is going to start making hyaluronic acid and coats itself,” explains Dilworth. “[This] sort of creates a force field or a barrier around [the muscle stem cells] that’s going to protect it from these negative signals coming from the cytokines.”

Once that happens, muscle stem cells covered in hyaluronic body armor amass at the site of injury in droves. They start replicating, expanding the workforce available to restore and make new muscle.

Why it matters — While this is a mouse study and needs studies involving humans to validate the results, “this is a really exciting finding,” says Anthony Atala, director of Wake Forest University’s Intitute for Regenerative Medicine. He was not involved in the study.

“It’s basically advancing our knowledge of how muscle regenerates. It’s also advancing our knowledge of how to make sure we can overcome the challenges for regeneration.”

These challenges lie in tackling diseases that affect the muscles, like muscular dystrophy, which currently has no cure and where gene mutations interfere with healthy muscle growth, leading to a gradual, debilitating, and life-threatening muscle loss. Experiencing acute trauma, like a car accident, results in a similar issue.

Currently, there are efforts to hack or jumpstart the regeneration process using medication, small molecule therapies, and gene therapies — but these are all very much experimental.

“This [study] is a really valuable piece of the puzzle,” says Smith. “But we’re going to still need to understand more about how exactly the regeneration is activated to be able move towards designing therapies that can enhance muscle regeneration.”

What’s next — Dilworth and his team are continuing their investigations to fully suss out the dynamic between hyaluronic acid, muscle stem cells, and inflammation and whether other chemical or cellular players are involved.

“We’re going to use [this research] as a tool for basic science to try and understand the complete pathway of communication between the immune system and the stem cell, which is poorly defined at the moment,” he says.

The researchers want to channel their findings into clinical applications, like finding ways to turn up hyaluronic acid production in the muscle stem cells of older people.

Until then, Dilworth does not recommend you slap some hyaluronic acid to that achy, bruised muscle and hope for a miracle. That definitely won’t work — better to save the tonic for your face instead (for now).More like this

Walk into any cosmetics store and turn into the skincare aisle. Among all the lotions and potions, you will find it hard to avoid the reigning heavyweight of anti-aging ingredients: hyaluronic acid. This sugar molecule typically found in the body reportedly delivers some serious benefits, from ironing out wrinkles and plumping skin to safeguarding eye and joint health.

Now hyaluronic acid can add revitalizing muscles to its glowy resume, according to a paper published Thursday in Science. Researchers discovered that after muscle damage, hyaluronic acid swoops in and nudges muscle stem cells to get cracking on making repairs. The finding could lead to interventions that boost our bodies’ mending mechanisms, particularly in people who experience severe trauma or injury or those with a muscle-wasting condition like muscular dystrophy.

https://www.inverse.com/mind-body/hyaluronic-acid?utm_source=pocket-newtab-global-en-GB

May 18th 2022

Science confirms these parts of the Bible are true

Stars Insider

Science confirms these parts of the Bible are true

Like any other religious texts in history, the Bible is open to interpretation and it’s not confirmed by science to be factually accurate in every account. This, however, is not the case for every bit of text in the best-selling book of all time. In fact, some of these verses have been proved by science to be true.

Intrigued? Click through the following gallery and discover the parts of the Bible that have been confirmed by science.

Read More Science confirms these parts of the Bible are true (msn.com)

April 29th 2022

Liver Care

If you’re struggling to get rid of those extra pounds or feel tired and low on energy, you could have an overworked liver. Here are 7 liver-damaging mistakes to watch out for:

1. Not drinking enough water — The golden rule is to drink at least 8 full glasses of water every day. According to Dr. Michele Neil-Sherwood from the Functional Medical Institute, “Dehydration can have a direct effect on our liver’s ability to properly detoxify our body.” When your liver can’t clear those toxins, the risk of illness increases.

2. Eating heavy meals or high glycemic foods before bed — Eating heavy meals before bed is a guaranteed way to make your liver work overtime. High glycemic foods are the worst culprits here. This includes foods like breads, white rice, sweets, even “healthy” fruits like watermelon and pineapple. Experts recommend avoiding these liver-taxing foods before bed. If you’re craving a snack, go for fresh carrots which help cleanse your liver.

3. Eating too many trans fats — Trans fats are dangerous preservatives commonly found in prepackaged foods. They often show up as “hydrogenated oils” in the ingredient list. Consuming too many trans fats increases weight gain, packing more fat onto your liver and belly.

4. Eating too much sugar — Refined sugar and high-fructose corn syrup wreak havoc on your liver. Some studies suggest it can damage your liver just as much as alcohol, without being overweight. Fructose is converted to fat in the body, which increases your risk of developing a fatty liver.

5. Not getting enough exercise — Getting a proper amount of exercise is important, even if you’re not overweight. Not only does exercise help you work up a good sweat, but it improves liver detoxification too. Even several brisk walks every week can pay huge benefits.

6. Consuming too much vitamin A — Out of this entire list, this one might surprise you the most! Vitamin A delivers many great benefits at normal doses, such as protecting your eyes and supporting a healthy immune system. But too much vitamin A is toxic to your liver. How much is too much? Generally, doses over 40,000 IU daily. Most people won’t have to worry about going overboard with vitamin A. However, if you take multiple vitamins and supplements that contain vitamin A, pay close attention to the total amount you’re consuming.

7. Taking the wrong herbal supplements — Certain herbal extracts such as kava kava, can be harmful to your liver. That’s why taking the right nutrients is crucial for rejuvenating your liver and restoring a healthy metabolism.

Now there’s a new at-home breakthrough that detoxifies and rejuvenates your liver from the inside. Even better, it can ignite a healthy metabolism and could skyrocket energy in just 30 seconds every morning.

April 23rd 2022

Is Time Travel Possible?

The Short Answer:Although humans can’t hop into a time machine and go back in time, we do know that clocks on airplanes and satellites travel at a different speed than those on Earth.

We all travel in time! We travel one year in time between birthdays, for example. And we are all traveling in time at approximately the same speed: 1 second per second.

We typically experience time at one second per second. Credit: NASA/JPL-Caltech

NASA’s space telescopes also give us a way to look back in time. Telescopes help us see stars and galaxies that are very far away. It takes a long time for the light from faraway galaxies to reach us. So, when we look into the sky with a telescope, we are seeing what those stars and galaxies looked like a very long time ago.

However, when we think of the phrase “time travel,” we are usually thinking of traveling faster than 1 second per second. That kind of time travel sounds like something you’d only see in movies or science fiction books. Could it be real? Science says yes!

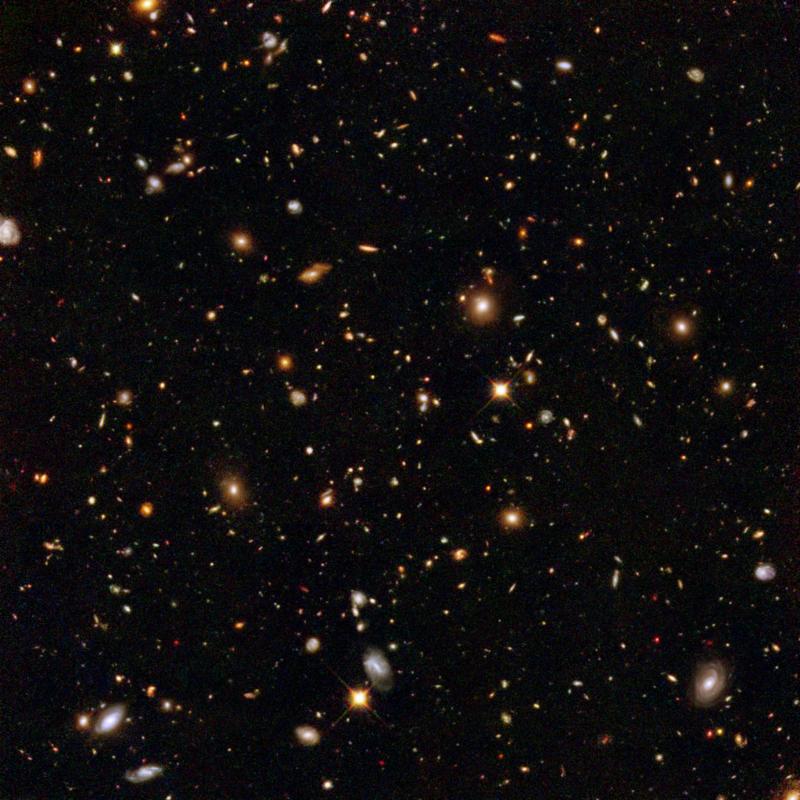

This image from the Hubble Space Telescope shows galaxies that are very far away as they existed a very long time ago. Credit: NASA, ESA and R. Thompson (Univ. Arizona)

How do we know that time travel is possible?

More than 100 years ago, a famous scientist named Albert Einstein came up with an idea about how time works. He called it relativity. This theory says that time and space are linked together. Einstein also said our universe has a speed limit: nothing can travel faster than the speed of light (186,000 miles per second).

Einstein’s theory of relativity says that space and time are linked together. Credit: NASA/JPL-Caltech

What does this mean for time travel? Well, according to this theory, the faster you travel, the slower you experience time. Scientists have done some experiments to show that this is true.

For example, there was an experiment that used two clocks set to the exact same time. One clock stayed on Earth, while the other flew in an airplane (going in the same direction Earth rotates).

After the airplane flew around the world, scientists compared the two clocks. The clock on the fast-moving airplane was slightly behind the clock on the ground. So, the clock on the airplane was traveling slightly slower in time than 1 second per second.

Credit: NASA/JPL-Caltech

Can we use time travel in everyday life?

We can’t use a time machine to travel hundreds of years into the past or future. That kind of time travel only happens in books and movies. But the math of time travel does affect the things we use every day.

For example, we use GPS satellites to help us figure out how to get to new places. (Check out our video about how GPS satellites work.) NASA scientists also use a high-accuracy version of GPS to keep track of where satellites are in space. But did you know that GPS relies on time-travel calculations to help you get around town?

GPS satellites orbit around Earth very quickly at about 8,700 miles (14,000 kilometers) per hour. This slows down GPS satellite clocks by a small fraction of a second (similar to the airplane example above).

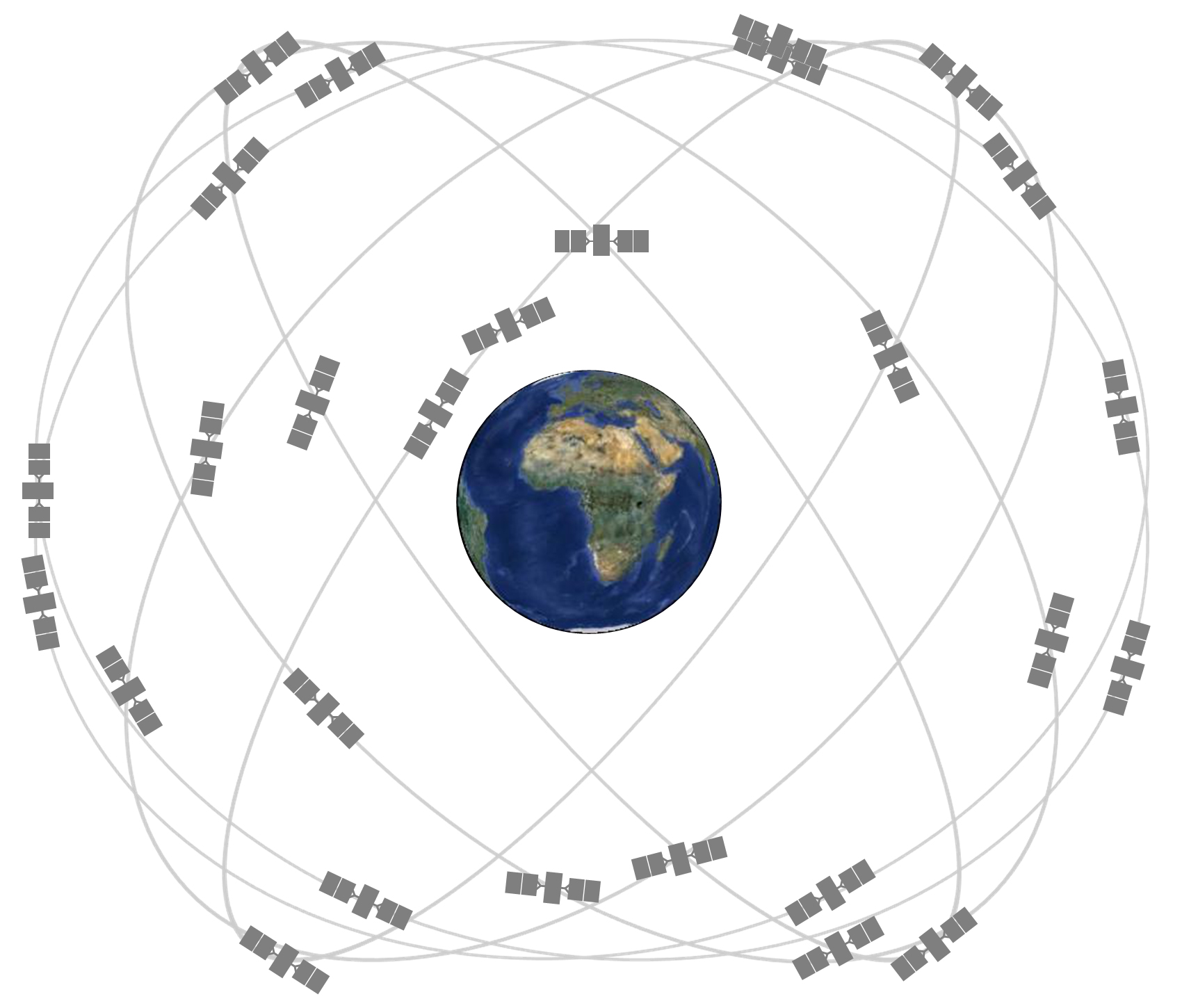

GPS satellites orbit around Earth at about 8,700 miles (14,000 kilometers) per hour. Credit: GPS.gov

However, the satellites are also orbiting Earth about 12,550 miles (20,200 km) above the surface. This actually speeds up GPS satellite clocks by a slighter larger fraction of a second.

Here’s how: Einstein’s theory also says that gravity curves space and time, causing the passage of time to slow down. High up where the satellites orbit, Earth’s gravity is much weaker. This causes the clocks on GPS satellites to run faster than clocks on the ground.

The combined result is that the clocks on GPS satellites experience time at a rate slightly faster than 1 second per second. Luckily, scientists can use math to correct these differences in time.

Credit: NASA/JPL-Caltech

If scientists didn’t correct the GPS clocks, there would be big problems. GPS satellites wouldn’t be able to correctly calculate their position or yours. The errors would add up to a few miles each day, which is a big deal. GPS maps might think your home is nowhere near where it actually is!