March 24th 2024

A physicist wants to change your perspective about our place in the universe

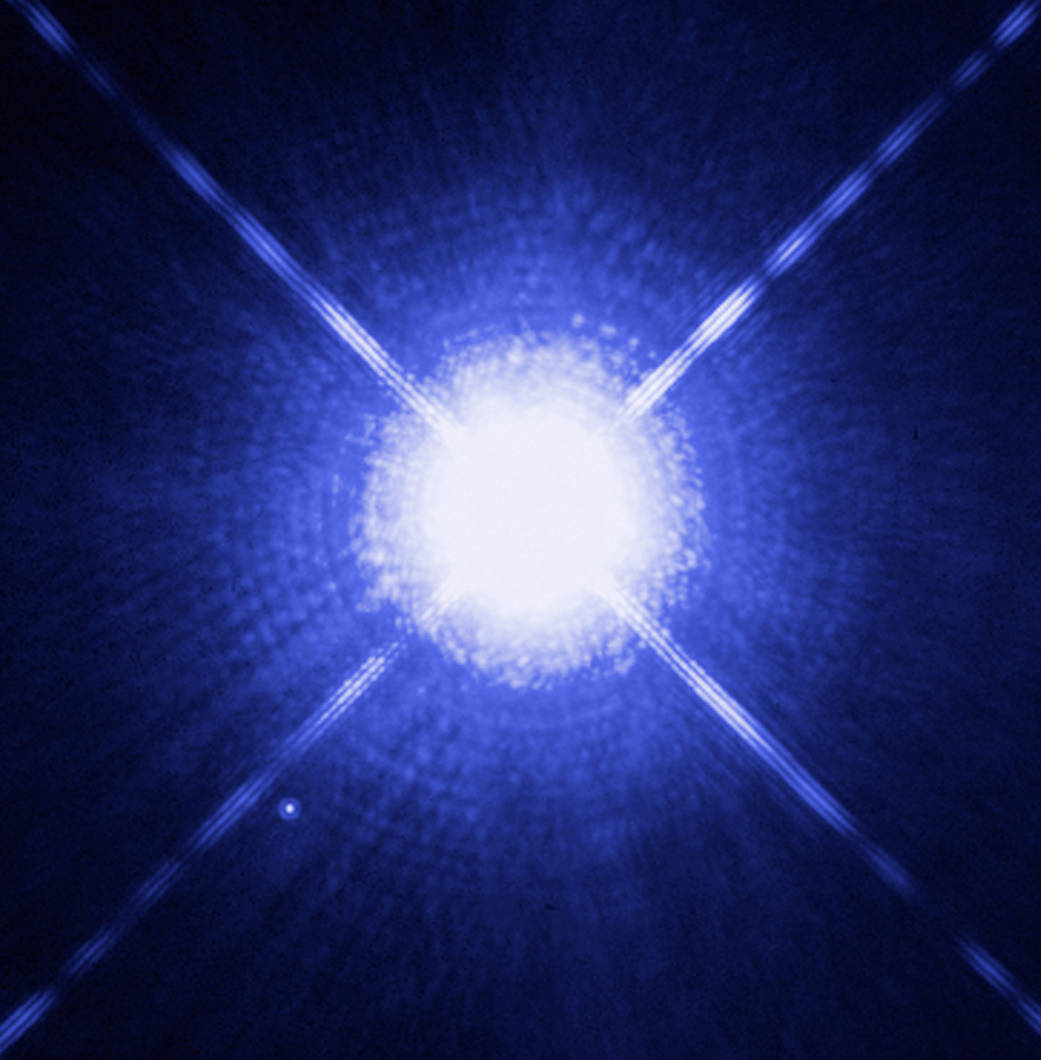

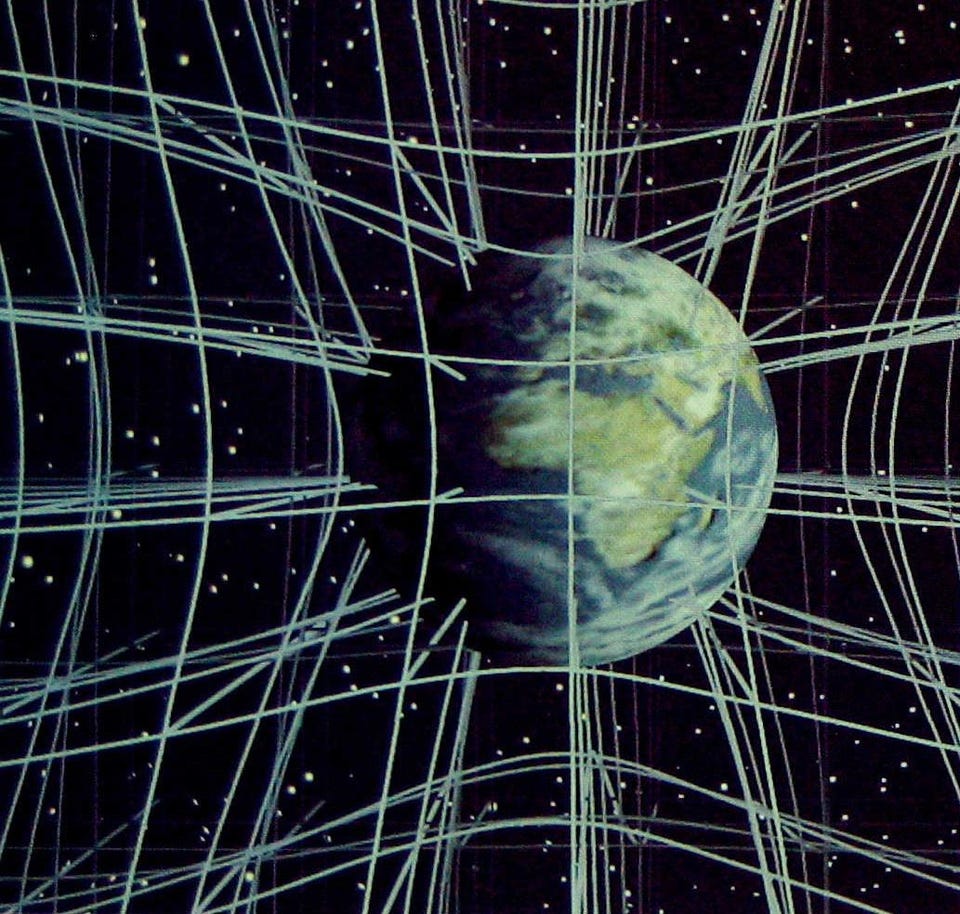

In Waves in an Impossible Sea, Matt Strassler explains how human life is intimately connected to the larger cosmos

January 8th 2024

Why some people don’t trust science – and how to change their minds

Published: December 29, 2023 11.42am GMT

Author

- Laurence D. Hurst Professor of Evolutionary Genetics at The Milner Centre for Evolution, University of Bath

Disclosure statement

Laurence D. Hurst receives funding from The Evolution Education Trust. He is affiliated with The Genetics Society. Dr Cristina Fonseca also contributed to this article as well as to some of the research mentioned that was funded by The Genetics Society.

Partners

University of Bath provides funding as a member of The Conversation UK.

The Conversation UK receives funding from these organisations

We believe in the free flow of information

We believe in the free flow of information

Republish our articles for free, online or in print, under Creative Commons licence.

During the pandemic, a third of people in the UK reported that their trust in science had increased, we recently discovered. But 7% said that it had decreased. Why is there such variety of responses?

For many years, it was thought that the main reason some people reject science was a simple deficit of knowledge and a mooted fear of the unknown. Consistent with this, many surveys reported that attitudes to science are more positive among those people who know more of the textbook science.

But if that were indeed the core problem, the remedy would be simple: inform people about the facts. This strategy, which dominated science communication through much of the later part of the 20th century, has, however, failed at multiple levels.

In controlled experiments, giving people scientific information was found not to change attitudes. And in the UK, scientific messaging over genetically modified technologies has even backfired.

We believe in experts. We believe knowledge must inform decisions

The failure of the information led strategy may be down to people discounting or avoiding information if it contradicts their beliefs – also known as confirmation bias. However, a second problem is that some trust neither the message nor the messenger. This means that a distrust in science isn’t necessarily just down to a deficit of knowledge, but a deficit of trust.

With this in mind, many research teams including ours decided to find out why some people do and some people don’t trust science. One strong predictor for people distrusting science during the pandemic stood out: being distrusting of science in the first place.

Understanding distrust

Recent evidence has revealed that people who reject or distrust science are not especially well informed about it, but more importantly, they typically believe that they do understand the science.

This result has, over the past five years, been found over and over in studies investigating attitudes to a plethora of scientific issues, including vaccines and GM foods. It also holds, we discovered, even when no specific technology is asked about. However, they may not apply to certain politicised sciences, such as climate change.

Recent work also found that overconfident people who dislike science tend to have a misguided belief that theirs is the common viewpoint and hence that many others agree with them.

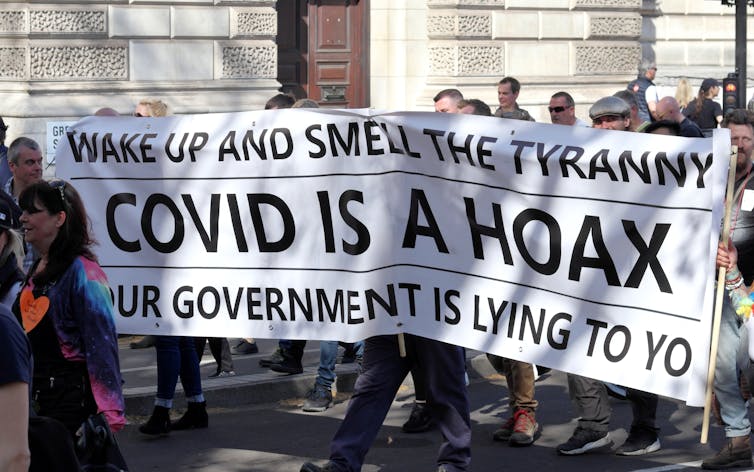

Other evidence suggests that some of those who reject science also gain psychological satisfaction by framing their alternative explanations in a manner that can’t be disproven. Such is often the nature of conspiracy theories – be it microchips in vaccines or COVID being caused by 5G radiation.

But the whole point of science is to examine and test theories that can be proven wrong – theories scientists call falsifiable. Conspiracy theorists, on the other hand, often reject information that doesn’t align with their preferred explanation by, as a last resort, questioning instead the motives of the messenger.

When a person who trusts the scientific method debates with someone who doesn’t, they are essentially playing by different rules of engagement. This means it is hard to convince sceptics that they might be wrong.

Finding solutions

So what we can one do with this new understanding of attitudes to science?

The messenger is every bit as important as the message. Our work confirms many prior surveys showing that politicians, for example, aren’t trusted to communicate science, whereas university professors are. This should be kept in mind.

The fact that some people hold negative attitudes reinforced by a misguided belief that many others agree with them suggests a further potential strategy: tell people what the consensus position is. The advertising industry got there first. Statements such as “eight out ten cat owners say their pet prefers this brand of cat food” are popular.

A recent meta-analysis of 43 studies investigating this strategy (these were “randomised control trials” – the gold standard in scientific testing) found support for this approach to alter belief in scientific facts. In specifying the consensus position, it implicitly clarifies what is misinformation or unsupported ideas, meaning it would also address the problem that half of people don’t know what is true owing to circulation of conflicting evidence.

A complementary approach is to prepare people for the possibility of misinformation. Misinformation spreads fast and, unfortunately, each attempt to debunk it acts to bring the misinformation more into view. Scientists call this the “continued influence effect”. Genies never get put back into bottles. Better is to anticipate objections, or inoculate people against the strategies used to promote misinformation. This is called “prebunking”, as opposed to debunking.

Different strategies may be needed in different contexts, though. Whether the science in question is established with a consensus among experts, such as climate change, or cutting edge new research into the unknown, such as for a completely new virus, matters. For the latter, explaining what we know, what we don’t know and what we are doing – and emphasising that results are provisional – is a good way to go.

By emphasising uncertainty in fast changing fields we can prebunk the objection that a sender of a message cannot be trusted as they said one thing one day and something else later.

But no strategy is likely to be 100% effective. We found that even with widely debated PCR tests for COVID, 30% of the public said they hadn’t heard of PCR.

A common quandary for much science communication may in fact be that it appeals to those already engaged with science. Which may be why you read this.

That said, the new science of communication suggests it is certainly worth trying to reach out to those who are disengaged.

December 29th 2023

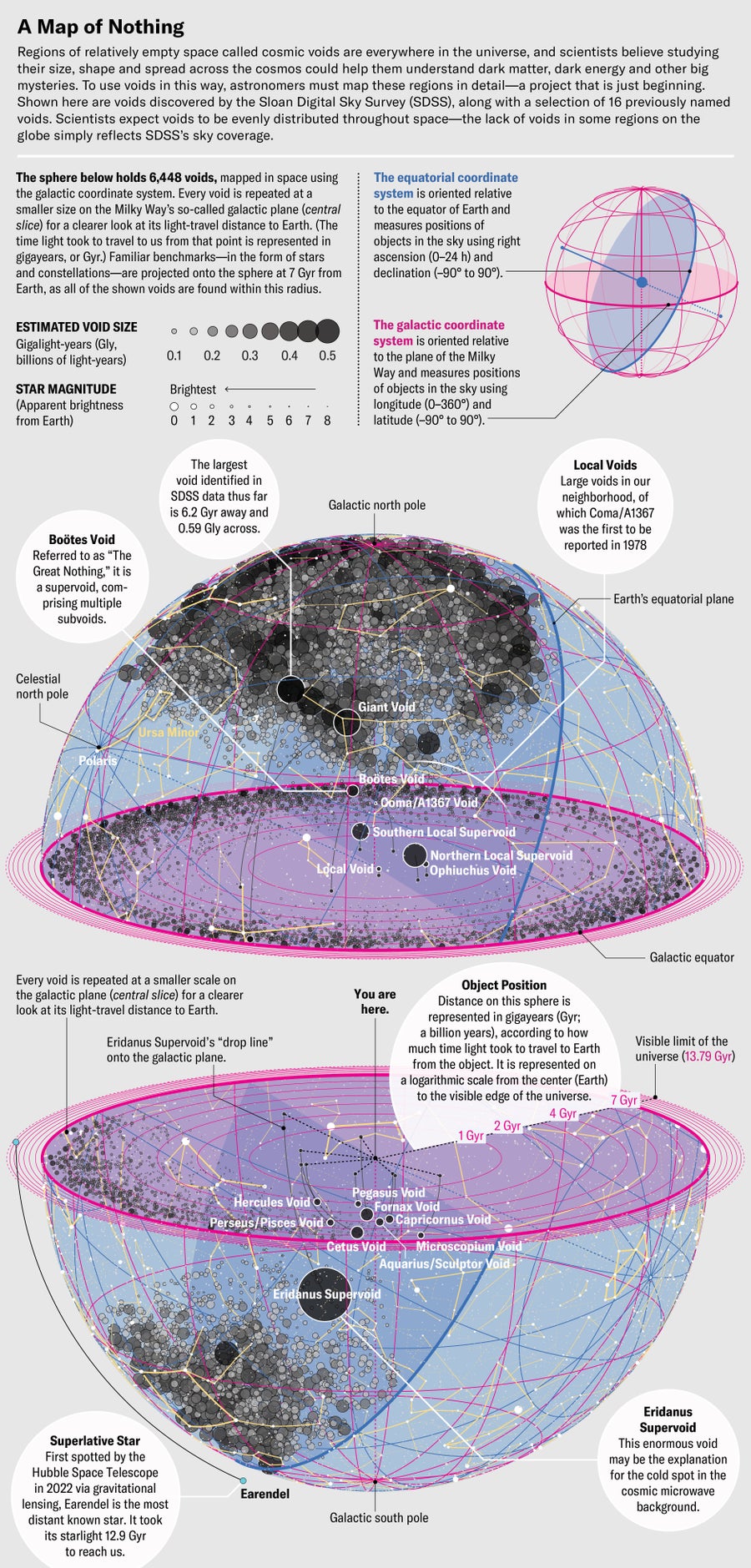

How Analyzing Cosmic Nothing Might Explain Everything

Huge empty areas of the universe called voids could help solve the greatest mysteries in the cosmos

Computational astrophysicist Alice Pisani put on a virtual-reality headset and stared out into the void—or rather a void, one of many large, empty spaces that pepper the cosmos. “It was absolutely amazing,” Pisani recalls. At first, hovering in the air in front of her was a jumble of shining dots, each representing a galaxy. When Pisani walked into the jumble, she found herself inside a large swath of nothing with a shell of galaxies surrounding it. The image wasn’t just a guess at what a cosmic void might look like; it was Pisani’s own data made manifest. “I was completely surprised,” she says. “It was just so cool.”

The visualization, made in 2022, was a special project by Bonny Yue Wang, then a computer science undergraduate at the Cooper Union for the Advancement of Science and Art in New York City. Pisani teaches a course there in cosmology—the structure and evolution of the universe. Wang had been aiming to use Pisani’s data on voids, which can stretch from tens to hundreds of millions of light-years across, to create an augmented-reality view of these surprising features of the cosmos.

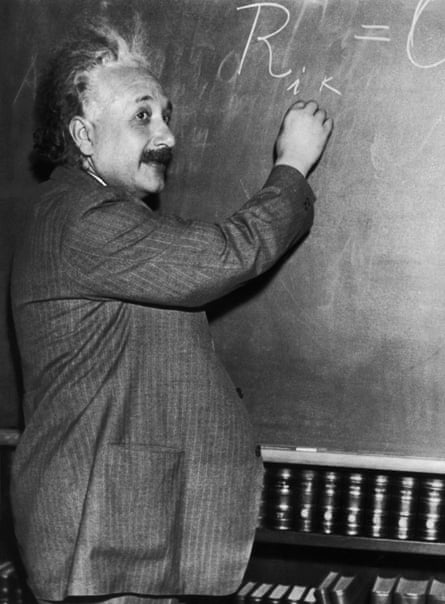

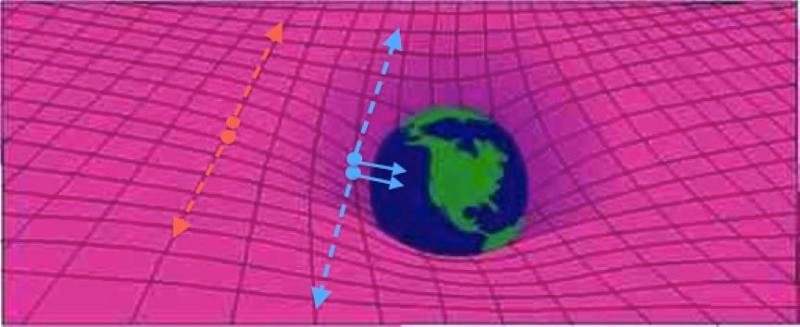

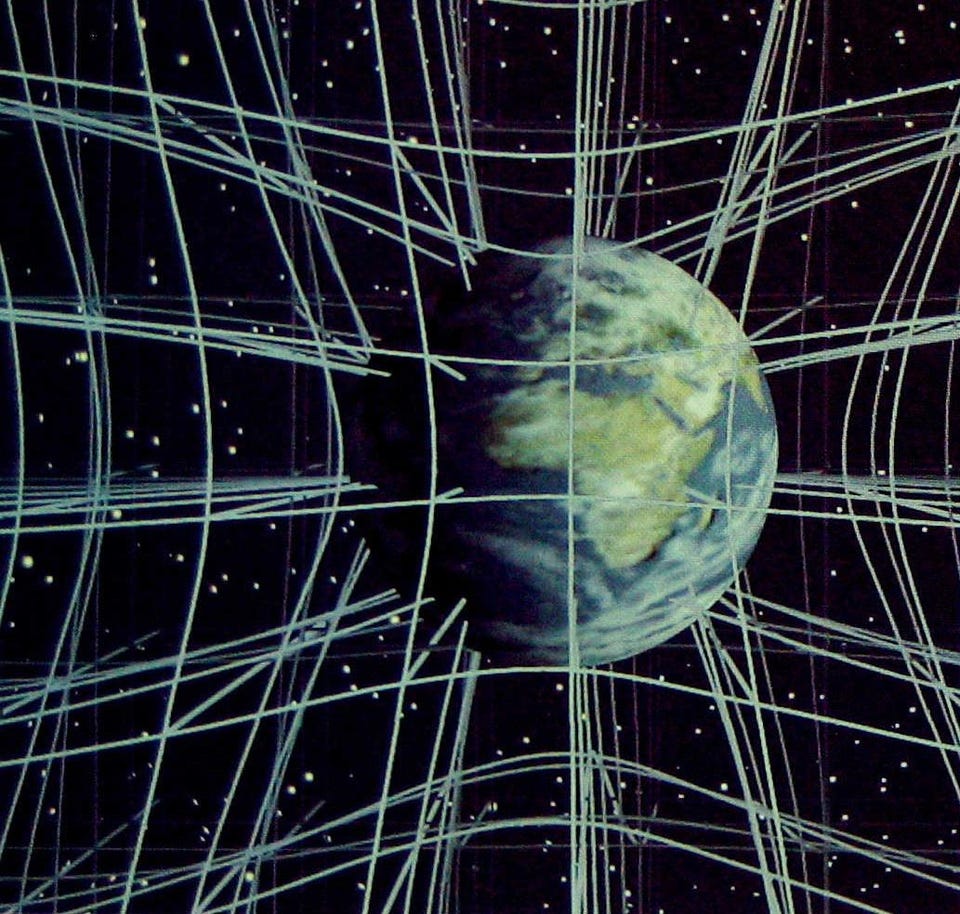

The project would have been impossible a decade ago, when Pisani was starting out in the field. Scientists have known since the 1980s that these fields of nothing exist, but inadequate observational data and insufficient computing power kept them from being the focus of serious research. Lately, though, the field has made tremendous progress, and Pisani has been helping to bring it into the scientific mainstream. Within just a few years, she and an increasing number of scientists are convinced, the study of the universe’s empty spaces could offer important clues to help solve the mysteries of dark matter, dark energy and the nature of the enigmatic subatomic particles called neutrinos. Voids have even shown that Einstein’s general theory of relativity probably operates the same way at very large scales as it does locally—something that has never been confirmed. “Now is the right moment to use voids” for cosmology, says David Spergel, former chair of astrophysics at Princeton University and current president of the Simons Foundation. Benjamin Wandelt of the Lagrange Institute in Paris echoes the sentiment: “Voids have really taken off. They’re becoming kind of a hot topic.”

The discovery of cosmic voids in the late 1970s to mid-1980s came as something of a shock to astronomers, who were startled to learn that the universe didn’t look the way they’d always thought. They knew that stars were gathered into galaxies and that galaxies often clumped together into clusters of dozens or even hundreds. But if you zoomed out far enough, they figured, this clumpiness would even out: at the largest scales the cosmos would look homogeneous. It wasn’t just an assumption. The so-called cosmic microwave background (CMB)—electromagnetic radiation emitted about 380,000 years after the big bang—is extremely homogeneous, reflecting smoothness in the distribution of matter when it was created. And even though that was nearly 14 billion years ago, the modern universe should presumably reflect that structure.

But we can’t tell whether that’s the case just by looking up. The night sky appears two-dimensional even through a telescope. To confirm the presumption of homogeneity, astronomers needed to know not only how galaxies are distributed across the sky but how they’re distributed in the third dimension of space—depth. So they needed to measure the distance from Earth to many galaxies near and far to figure out what’s in the foreground, what’s in the background and what’s in the middle. In 1978 Laird A. Thompson of the University of Illinois Urbana-Champaign and Stephen A. Gregory of the University of New Mexico did just that and discovered the first hints of cosmic voids, shaking the presumption that the universe was smooth. In 1981 Harvard University’s Robert Kirshner and four of his colleagues discovered a huge void, about 400 million light-years across, in the direction of the constellation Boötes. It was so big and so empty that “if the Milky Way had been in the center of the Boötes void, we wouldn’t have known there were other galaxies [in the universe] until the 1960s,” as Gregory Scott Aldering, now at Lawrence Berkeley National Laboratory, once put it.

In 1986 Margaret J. Geller, John Huchra and Valérie de Lapparent, all then at Harvard, confirmed that the voids Thompson, Kirshner and their colleagues had found were no flukes. The team had painstakingly surveyed the distance to many hundreds of galaxies spread out over a wide swath of sky and found that voids appeared to be everywhere. “It was so exciting,” says de Lapparent, now director of research at the Institut d’Astrophysique de Paris (IAP). She had been a graduate student at the time and was spending a year working with Geller, who was trying to understand the large-scale structure of the universe. A cross section of the local cosmos that astronomers had put together earlier showed hints of a filamentary structure consisting of regions either overdense or underdense with galaxies. “Margaret had this impression that this was just an observing bias,” de Lapparent says, “but we had to check. We wanted to look farther out.” They used a relatively small telescope on Mount Hopkins in Arizona. “I learned to observe on that telescope,” de Lapparent recalls. “I was on my own after a night of training, which was so exciting.” When she was done, she, Geller and Huchra made a map of the galaxies’ locations. “It was amazing,” she says. “We had these big, circular voids and these sharp walls full of galaxies.”

Related Stories

- Vitamin D Hope and Hype, Cosmic Voids and Preventing DepressionLaura Helmuth

- The Most Shocking Discovery in Astrophysics Is 25 Years OldRichard Panek

- A Possible Crisis in the Cosmos Could Lead to a New Understanding of the UniverseMichael D. Lemonick

- Why Is the Sky Dark Even Though the Universe Is Full of Stars?Brian Jackson & The Conversation US

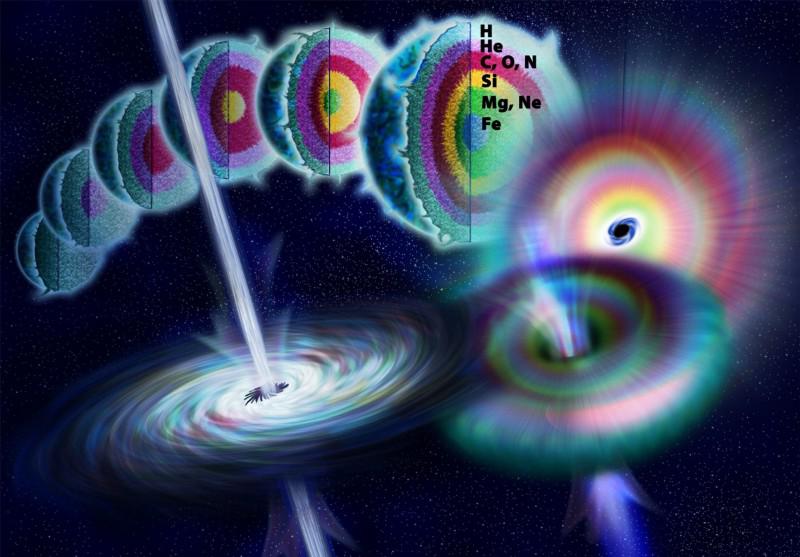

“All of these features,” the researchers wrote in their paper, entitled “A Slice of the Universe,” “pose serious challenges for current models for the formation of large-scale structure.” As later, deeper surveys would confirm, galaxies and clusters of galaxies are themselves concentrated into a gigantic web of concentrated regions of matter connected by streaming filaments, with gargantuan voids in between. In other words, the cosmos today vaguely resembles Swiss cheese, whereas the CMB looks more like cream cheese.

The question, then, was: What forces made the universe evolve from cream cheese into Swiss cheese? One factor was almost certainly dark matter, the invisible mass whose existence had in the 1980s only recently been accepted by most astrophysicists, despite years of tantalizing evidence from observers such as Vera Rubin and Fritz Zwicky. It was more massive than ordinary, visible matter by a factor of six or so. That would have made the gravitational pull of slightly overdense regions in the early universe stronger than anyone had guessed. Stars and galaxies would have formed preferentially in these areas of high density, leaving low-density regions largely empty.

Most observers and theorists continued to explore what would come to be known as the “cosmic web,” but very few concentrated on voids. It wasn’t for lack of interest; the problem was that there wasn’t much to look at. Voids were important not because of what they contained but because their very existence, their shapes and sizes and distances from one another, had to be the result of the same forces that gave structure to the universe. To use voids to understand how those forces worked, astrophysicists needed to include many examples in statistical analyses of voids’ average size and shape and separation, yet too few had been found to draw useful conclusions from them. It was analogous to the situation with exoplanets in the 1990s: the first few discovered were proof that planets did indeed orbit stars beyond the sun, but it wasn’t until the Kepler space telescope began raking them in by the thousands after its 2009 launch that planetary scientists could say anything meaningful about how many and what kinds of planets populated the Milky Way.

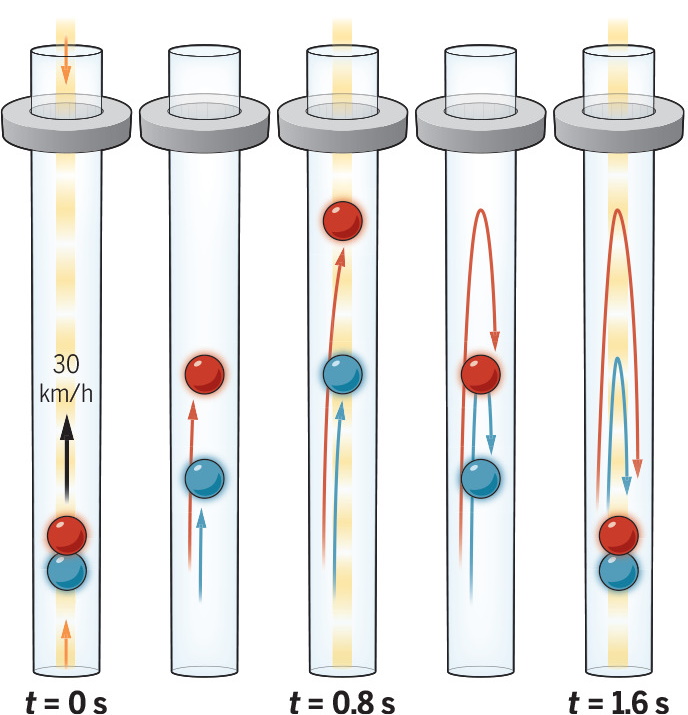

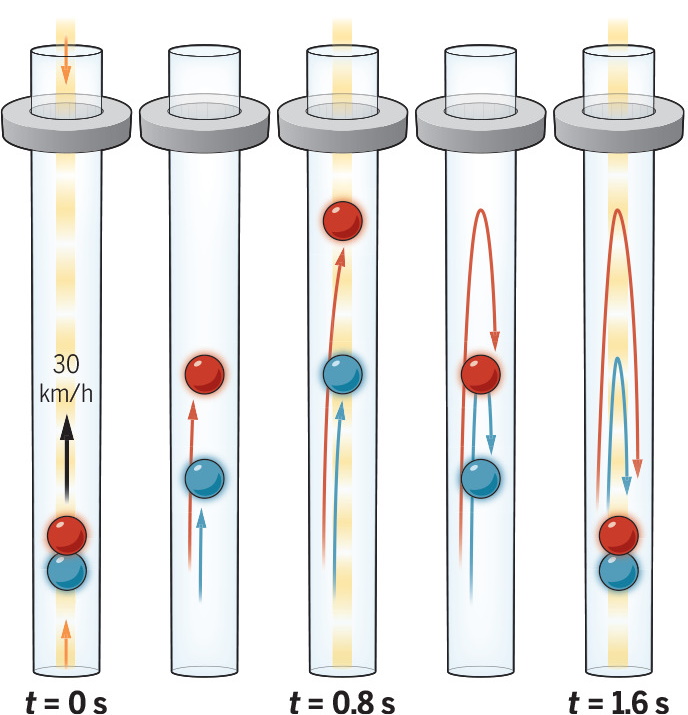

Another issue with studying voids was raised in 1995 by Barbara Ryden of the Ohio State University and Adrian L. Melott of the University of Kansas. Galaxy surveys, they pointed out, are conducted in “redshift space,” not actual space. To understand what they meant, consider that as the universe expands, light waves are stretched from their original wavelengths and colors into longer, redder wavelengths. The farther away something is from an observer, the more its light is stretched. The James Webb Space Telescope was designed to be sensitive to infrared light in part so it can see the very earliest galaxies, whose light has been stretched all the way out of the visible spectrum—it’s redder than red. And the CMB, the most distant light we can detect, has been stretched so much that we now perceive it in the form of microwaves. “Measuring the physical distances to galaxies is difficult,” Ryden and Melott wrote in a paper in the Astrophysical Journal. “It’s much easier to measure redshifts.” But, they noted, redshifts can distort the actual distances to galaxies that enclose a void and thus give a misleading idea of their size and shape. The problem, explains Nico Hamaus of the Ludwig Maximilian University of Munich, is that as a void expands, “the near side is coming toward us, and the far side is streaming away.” That differential subtracts from the redshift on the near side and adds to it on the far side, making the void look artificially elongated.

Despite the difficulties, astrophysicists began to feel more equipped to tackle voids by the late 2000s. Projects such as the Sloan Digital Sky Survey had probed much more deeply into the cosmos than the map made by Geller, Huchra and de Lapparent and confirmed that voids were everywhere you looked. Independent observations by two teams of astrophysicists, meanwhile, had revealed the existence of dark energy, a kind of negative gravity that was forcing the universe to expand faster and faster rather than slowing down from the mutual gravitational attraction of trillions of galaxies. Voids seemed to offer astronomers a promising way of studying what might be driving dark energy.

These developments caught Wandelt’s eye. His specialty has always been trying to understand how the large-scale structure of the modern universe came to be. One of the aspects of voids that he found attractive, he says, was that “these underdense regions are much quieter in some ways, more amenable to modeling” than the clusters and filaments that separate them. Galaxies and gases are crashing into each other in nonlinear and complicated interactions, Wandelt says. There’s “a chaos” that erases the information about their formation. Further complicating things, the gravitational attraction between galaxies is strong enough on smaller scales that it counteracts the general expansion of the universe—and even counteracts the extra oomph of dark energy. Andromeda, for example, the nearest large galaxy to our own, is actually drawing closer to the Milky Way; in four billion years or so, they’ll merge. Voids, in contrast, “are dominated by dark energy,” Wandelt says. “The biggest ones are actually expanding faster than the rest of the universe.” That makes them ideal laboratories for getting a handle on this still puzzling force.

Sign Up for Our Daily Newsletter

Email AddressBy giving us your email, you are agreeing to receive the Today In Science newsletter and to our Terms of Use and Privacy Policy.

And it’s not just an understanding of dark energy that could emerge from this line of study; voids could also cast light (so to speak) on the nature of dark matter. Although voids have much less dark matter in them than the clusters and filaments of the cosmic web do, there’s still some. And unlike the chaotic web, with its swirling hot gases and colliding galaxies, the voids are calm enough that the particles astrophysicists think make up dark matter might be detectable. They wouldn’t show up directly, because they neither absorb nor emit light. But the particles should occasionally collide, resulting in tiny bursts of gamma rays. They would also probably decay eventually, releasing gamma rays in that process as well. A sufficiently sensitive gamma-ray telescope in space would theoretically be able to detect their collective signal. Nicolao Fornengo of the University of Turin in Italy, co-author of a preprint study laying out this rationale, says that “if dark matter produces [gamma rays], the signal should be in there.”

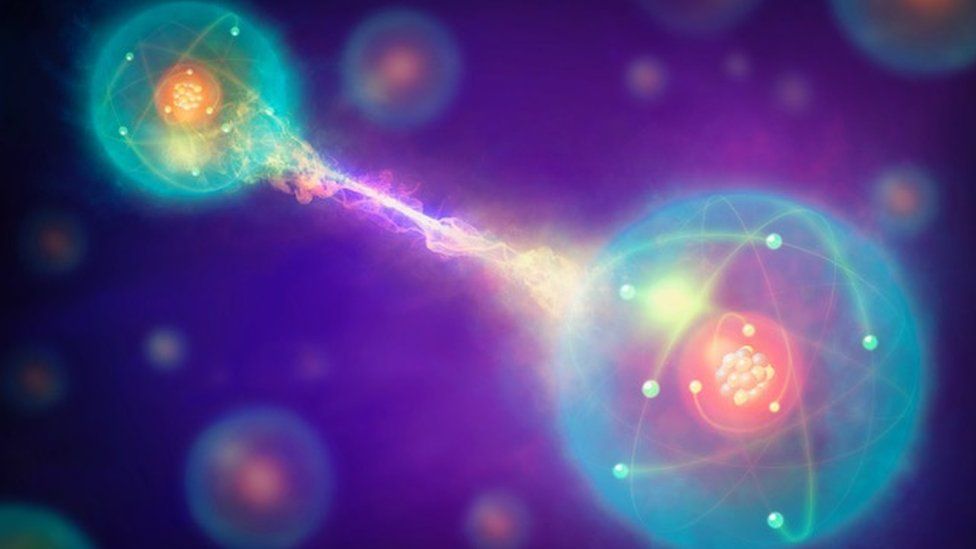

Voids could even help to nail down the nature of neutrinos—elementary particles, once thought to be massless, that pervade the universe while barely interacting with ordinary matter. (If you sent a beam of neutrinos through a slab of lead one light-year, or nearly six trillion miles, thick, about half of them would sail through it effortlessly.) Physicists have confirmed that the three known types of neutrinos do have masses, but they aren’t sure why or exactly what those masses are.

Voids could help them find the answer, says Elena Massara, a postdoctoral researcher at the Waterloo Center for Astrophysics at the University of Waterloo in Canada. They’re places that have a lack of both luminous matter and dark matter, she explains, “but they’re full of neutrinos, which are almost uniformly distributed” through the universe, including in voids.That’s because neutrinos zip through the cosmos at nearly the speed of light, which means they don’t clump together under their mutual gravity—or under the gravity of the dark matter concentrations that act as the scaffolding for the cosmic web. Although voids always contain a lot of neutrinos, the particles are only passing through—those that fly out are constantly replenished by more neutrinos streaming in. And their combined gravity can make the voids grow more slowly over time than they would otherwise. The rate of growth—determined through comparison of the average size of voids in the early universe to those in the modern universe—can reveal how much mass neutrinos actually have.

Void science has changed a lot since Pisani started studying it as a graduate student working with Wandelt. He offered two or three suggestions for a dissertation topic, she recalls, and one of them was cosmic voids. “I felt that they were the riskiest choice,” she says, “because there were very few data at the time. But they were also incredibly challenging,” which she found exciting. The data Pisani and others needed to analyze the voids, however—that is, to test their real-world properties against computer models incorporating dark matter, dark energy, neutrinos and the formation of large-scale structure in the universe—were simply not available. “When I started my Ph.D. thesis,” Pisani says, “we knew of fewer than 300 voids, something like that. Today we have on the order of 6,000 or more.”

That’s huge, but it’s still not enough for the comprehensive statistical analysis necessary for voids to be used for serious cosmology—with one exception. In 2020 Hamaus, Pisani, Wandelt and several of their colleagues published an analysis showing that general relativity behaves at least approximately the same way on very large scales as it seems to do in the local universe. Voids can be used to test this question because astrophysicists think they result from the way dark matter clusters in the universe: the dark matter pulls in ordinary matter, creating the cosmic web and leaving empty spaces behind. But what if general relativity, our best theory of gravity, breaks down somehow over very large distances? Few scientists expect that to be the case, but it has been suggested as a means to explain away the existence of dark matter.

By looking at the thickness of the walls of matter surrounding voids, however, Hamaus and his colleagues determined that Einstein’s theory is safe to rely on. To understand why, imagine a void as “a circle whose radius increases with the expansion of the universe,” Wandelt says. As the circle grows, it pushes against the boundaries of galaxies and clusters at its perimeter. Over time these structures aggregate, thickening the “wall” that defines the void’s edge. Dark energy and neutrinos affect the thickness as well, but because they are smoothly distributed both inside and outside the voids, they have a much smaller effect overall.

Scientists plan to use voids to learn even more about the universe soon because they expect to rapidly multiply the number of known voids in their catalog. “In the next five or 10 years,” Pisani says, “we’re going to have hundreds of thousands. It’s one of those fields where numbers really make a difference.” So, Spergel says, do advances in machine learning, which will make it far easier to analyze void properties.

These exploding numbers won’t be coming from projects explicitly designed to search for voids. They will arrive, as they did with the Sloan Digital Sky Survey, as a by-product of more general surveys. The European Space Agency’s Euclid mission, for example, which launched in July 2023, will create a 3-D map of the cosmic web with unprecedented breadth and depth. NASA’s Nancy Grace Roman Space Telescope will begin its own survey in 2026, looking in infrared light. And in 2024 the ground-based Vera C. Rubin Observatory will launch a 10-year study of cosmic structure, among other things. Combined, these projects should increase the inventory of known voids by two orders of magnitude.

“I remember one of the first talks I gave on void cosmology, at a conference in Italy,” Pisani says. “At the end the audience had no questions.” She wasn’t sure at the time whether the reason was skepticism or simply that the topic was so new to her listeners that they couldn’t think of anything to ask. In retrospect, she thinks it was a little of both. “Initially, I think the problem was just convincing people that this was reasonable science to look into,” she says.

That’s much less of an issue now. For example, Pisani points out, the Euclid voids group has about 100 scientists in it. “I have to say that Alice was one of the fearless pioneers of this field,” Wandelt notes about his former Ph.D. student. When they started writing the first papers on void science, he recalls, some of the leading figures in astrophysics “expressed severe doubt that you could do anything cosmologically interesting with voids.” The biggest confirmation that they were wrong, he says, is that some of those same people are now enthusiastic.

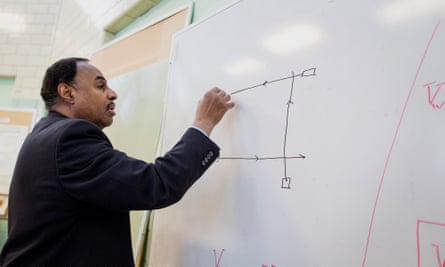

Pisani is perhaps the ideal representative for this fast-emerging field. She approaches the topic with absolute scientific rigor but also with infectious enthusiasm. Whenever she talks about voids, she lights up, speaking rapidly, jumping to her feet to draw diagrams on a whiteboard, and fielding questions (of which there are now many) with ease and confidence. She emphasizes that void science won’t answer all of astrophysicists’ big questions about the universe by itself. But it could do something even more valuable in a way: test ideas about dark matter, dark energy, neutrinos and the growth of cosmic structure independently of the other strategies scientists use. If the results match, great. If not, astrophysicists will have to reconcile their differences to find out what’s actually going on in the cosmos.

“I find the idea attractive and even somewhat poetic,” Wandelt says, “that looking into these areas where there’s nothing might yield information about some of the outstanding mysteries of the universe.”

Michael D. Lemonick is a freelance writer, as well as former chief opinion editor at Scientific American and a former senior science writer at Time. His most recent book is The Perpetual Now: A Story of Amnesia, Memory and Love (Doubleday, 2017). Lemonick also teaches science journalism at Princeton University.

This article was originally published with the title “Cosmic Nothing” in Scientific American Magazine Vol. 330 No. 1 (January 2024), p. 20

doi:10.1038/scientificamerican0124-20

Popular Stories

Public Health January 1, 2024How Much Vitamin D Do You Need to Stay Healthy?

Most people naturally have good vitamin D levels. Overhyped claims that the compound helps to fight diseases from cancer to depression aren’t borne out by recent research

Christie AschwandenWater January 1, 2024Why Are Alaska’s Rivers Turning Orange?

Streams in Alaska are turning orange with iron and sulfuric acid. Scientists are trying to figure out why

Alec LuhnGenetics January 1, 2024Sperm Cell Powerhouses Contain Almost No DNA

Scientists discover why fathers usually don’t pass on their mitochondria’s genome

Sneha KhedkarPharmaceuticals December 21, 2023How Two Pharmacists Figured Out That Decongestants Don’t Work

A loophole in FDA processes means older drugs like the ones in oral decongestants weren’t properly tested. Here’s how we learned the most popular one doesn’t work

Randy HattonEpidemiology December 19, 2023The Real Story Behind ‘White Lung Pneumonia’

Separate outbreaks of pneumonia in children have cropped up in the U.S., China and Europe. Public health experts say the uptick in cases is not caused by a novel pathogen

Today’s Silicon Valley billionaires grew up reading classic American science fiction. Now they’re trying to make it come true, embodying a dangerous political outlook

Charles Stross

Expand Your World with Science

Learn and share the most exciting discoveries, innovations and ideas shaping our world today.SubscribeSign up for our newslettersSee the latest storiesRead the latest issue

June 20th 2023

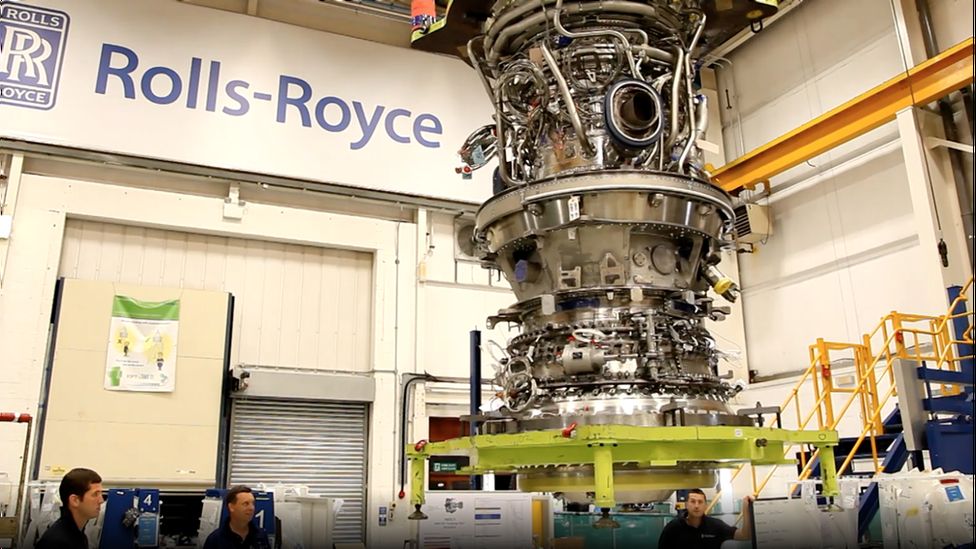

Airbus experiments with more control for the autopilot

By Shiona McCallum & Ashleigh Swan

Technology reporters

It’s difficult not to be a bit overwhelmed by the Airbus campus in Toulouse.

It is a huge site and the workplace for 28,000 staff, plus hundreds of visitors eager to see the planes being built.

The enormous Beluga cargo plane is parked at a loading dock, ready to transport vehicles and satellites around the world.

Close to where we conduct our interviews is the hangar where the supersonic passenger jet Concorde was developed.

This site is also home to much Airbus research and development, including the recently finished Project Dragonfly – an experiment to extend the ability of the autopilot.

Over the past 50 years automation in aviation has transformed the role of the pilot. These days pilots have a lot more assistance from tech in the cockpit.

Project Dragonfly, conducted on an Airbus A350-1000, extended the plane’s autonomy even further.

The project focused on three areas: improved automatic landing, taxi assistance and automated emergency diversion.

Perhaps the last of those is the most dramatic.

Malcolm Ridley, Chief Test Pilot of Airbus’s commercial aircraft, reassured us that the risk of being involved in an air accident is “vanishingly small”.

However, aircraft and crew need to be ready for any scenario, so Project Dragonfly tested an automatic emergency descent system.

The idea is this technology will take over if the pilots need to focus on heavy decision-making or if they were to become incapacitated.

Under its own control, the plane can descend and land, while recognising other aircraft, weather and terrain.

The system also allows the plane to speak to air traffic control over the radio with a synthetic voice created through the use of artificial intelligence.

It is a lot for the plane’s systems to take on.

One of the challenges was teaching the system to understand all of the information and create a solution, says Miguel Mendes Dias, Automated Emergency Operations Designer.

“The aircraft needs to, on its own, recover all the information. So it needs to listen for the airport messages from air traffic control.

“Then it needs to choose the most suitable airport for diversion,” he said.

Project Dragonfly performed two successful emergency descents.

During the test flights, French air traffic controllers fully understood the situation and the aircraft landed safely.

“It was really an amazing feat,” says Mr Mendes.

Thankfully, almost all landings are much less dramatic, and Project Dragonfly looked at the more usual kind as well.

Most big airports have technology which guides the aircraft on to the runway, called Precision Approach.

But not every airport in the world has that tech, so Airbus has been looking at a different way to land.

Project Dragonfly explored using different sensors to help an aircraft make an automated landing.

It included using a combination of normal cameras, infrared and radar technology.

The team also gathered data from around the world, so all sorts of weather conditions could be modelled.

As well as giving the plane more information, the extra sensors give the pilot extra clarity, when monitoring the landing.

For example, infrared cameras are useful in cloudy conditions, as the closer you get to objects the more of its heat an infrared sensor can pick up.

The tech “will make the pilot comfortable in the fact that he’s really aligned and on the good path to go to the runway,” says Nuria Torres Mataboch, a computer vision engineer on the Dragonfly project.

Project Dragonfly also looked at taxiing. Although this might seem like a basic task, it can be the most challenging part of the job, especially at the world’s busiest airports.

In this case, the pilot was in control of the aircraft.

The technology provided the crew with audio alerts. So when the aircraft came across obstacles it issued an alert. It also advised pilots on speed and showed them the way to the runaway.

“We wanted something that would assist and reduce the pilots’ workloads during the taxi phase,” said Mr Ridley.

What do pilots make of such developments? Some do not want the technology pushed too far.

“I don’t know if any pilot is particularly comfortable with the computer being the sole arbiter of whether or not a flight successfully lands,” said Tony Lucas, president of the Australian and International Pilots Association.

- Can Amsterdam make the circular economy work?

- The ‘exploding’ demand for giant heat pumps

- The chip maker that became an AI superpower

- Why car parks are the hottest space in solar power

- Protein’s power is being uncovered and unleashed

In addition, he is not convinced that self-flying planes will be able to deal with complex scenarios that come up.

“Automation can’t replace the decision making of two well-trained and rested pilots on the flight deck,” he said from his base at Sydney airport.

Mr Lucas used the example of the Boeing 737 Max, where an automated system led to two fatal crashes in 2018 and 2019.

Airbus is quick to point out that further automation will only be introduced when safe and that the objective is not to remove pilots from the cockpit.

But could passenger planes be pilot-free one day?

“Fully automated aircraft would only ever occur if that was clearly and certainly the safe way to go to protect our passengers and crew,” says Mr Ridley.

June 18th 2023

Clean Energy

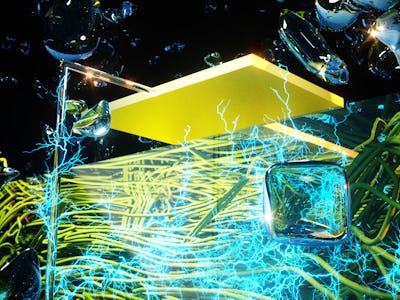

Scientists Made An Artificial “Cloud” That Pulls Electricity From Air

The secret? Tiny holes.

May 24, 2023

Derek Lovley/Ella Maru Studio

Taking a hint from the magician’s playbook, scientists have devised a way to pull electricity from thin air. A new study out today suggests a method in which any material can offer a steady supply of electricity from the humidity in the air.

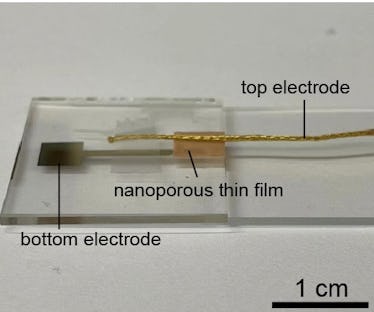

All that’s required? A pair of electrodes and a special material engineered to have teeny tiny holes that are less than 100 nanometers in diameter. That’s less than a thousandth of the width of a human hair.

Here’s how it works: The itty-bitty holes allow water molecules to pass through and generate electricity from the buildup of charge carried by the water molecules, according to a new paper published in the journal Advanced Materials.

The process essentially mimics how clouds make the electricity that they release in lightning bolts.

Because humidity lingers in the air perpetually, this electricity harvester could run at any time of day regardless of weather conditions — unlike somewhat unreliable renewable energy technologies such as wind and solar.

“The technology may lead to truly ‘ubiquitous powering’ to electronics,” senior study author Jun Yao, an electrical engineer at the University of Massachusetts Amherst, tells Inverse.

Man-made “clouds”

The recent discovery relies on the fact that the air is chock-full of electricity: Clouds contain a build-up of electric charge. However, it’s tough to capture and use electricity from these bolts.

Instead of trying to wrangle power from nature, Yao and his colleagues realized they could recreate it. The researchers previously created a device that uses a bacteria-derived protein to spark electricity from moisture in the air. But they realized afterward that many materials can get the job done, as long as they’re made with tiny enough holes. According to the new study, this type of energy-harvesting device — which the study authors have dubbed “Air-gen”, referring to the ability to pluck electricity from the air — can be made of “a broad range of inorganic, organic, and biological materials.”

“The initial discovery was really a serendipitous one,” says Yao, “so the current work really followed our initial intuition and lead to the discovery of the Air-gen effect working with literally all kinds of materials.”

Water molecules can travel around 100 nanometers in the air before bumping into each other. When water moves through a thin material that’s filled with these precisely sized holes, the charge tends to build up in the upper part of the material where they enter. Since fewer molecules reach the lower layer, this creates a charge imbalance that’s similar to the phenomenon in a cloud — essentially creating a battery that runs on humidity, which apparently isn’t just useful for making hair frizzy. Electrodes on both sides of the material then carry the electricity to whatever needs powering.

And since these materials are so thin, they can be stacked by the thousands and even generate multiple kilowatts of energy. In the future, Yao envisions everything from small-scale Air-gen devices that can power wearables to those that can offer enough juice for an entire household.

Before any of that can happen, though, Yao says his team needs to figure out how to collect electricity over a larger surface area and how best to stack the sheets vertically to increase the device’s power without taking up additional space. Still, he’s excited about the technology’s future potential. “My dream is that one day we can get clean electricity literally anywhere, anytime by using Air-gen technology,” he says.

more like this

Elon Musk’s Neuralink Brain Implant Approved for Human Trials in the US — Is It Safe?ScienceSummer 2023 Will Likely Break Heat Records — Here’s What To ExpectScienceThis One Metric Is More Important Than Temperature To Know If It’s Safe OutsideLEARN SOMETHING NEW EVERY DAY

Subscribe for free to Inverse’s award-winning daily newsletter!

By subscribing to this BDG newsletter, you agree to our Terms of Service and Privacy Policy

Related Tags

Space Science

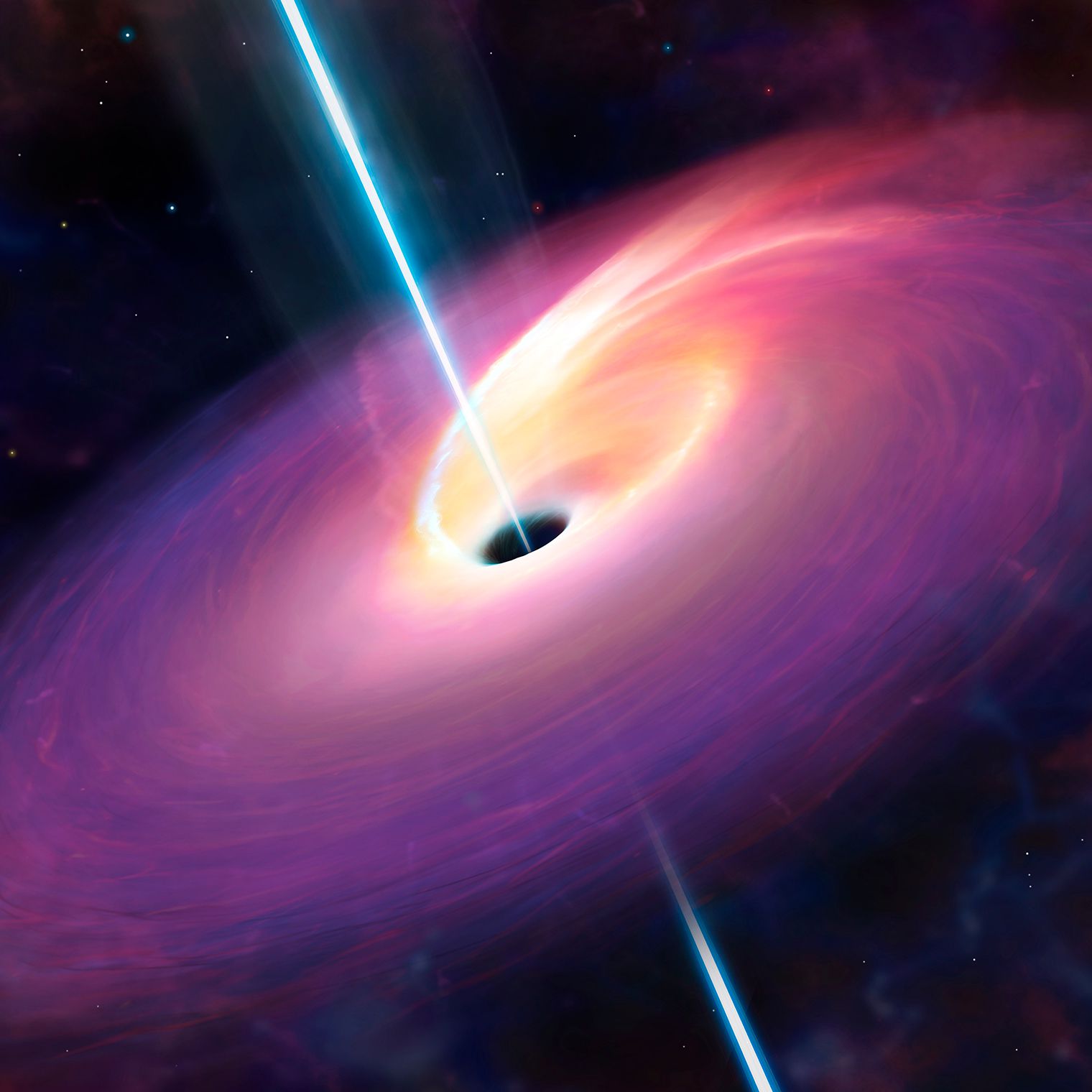

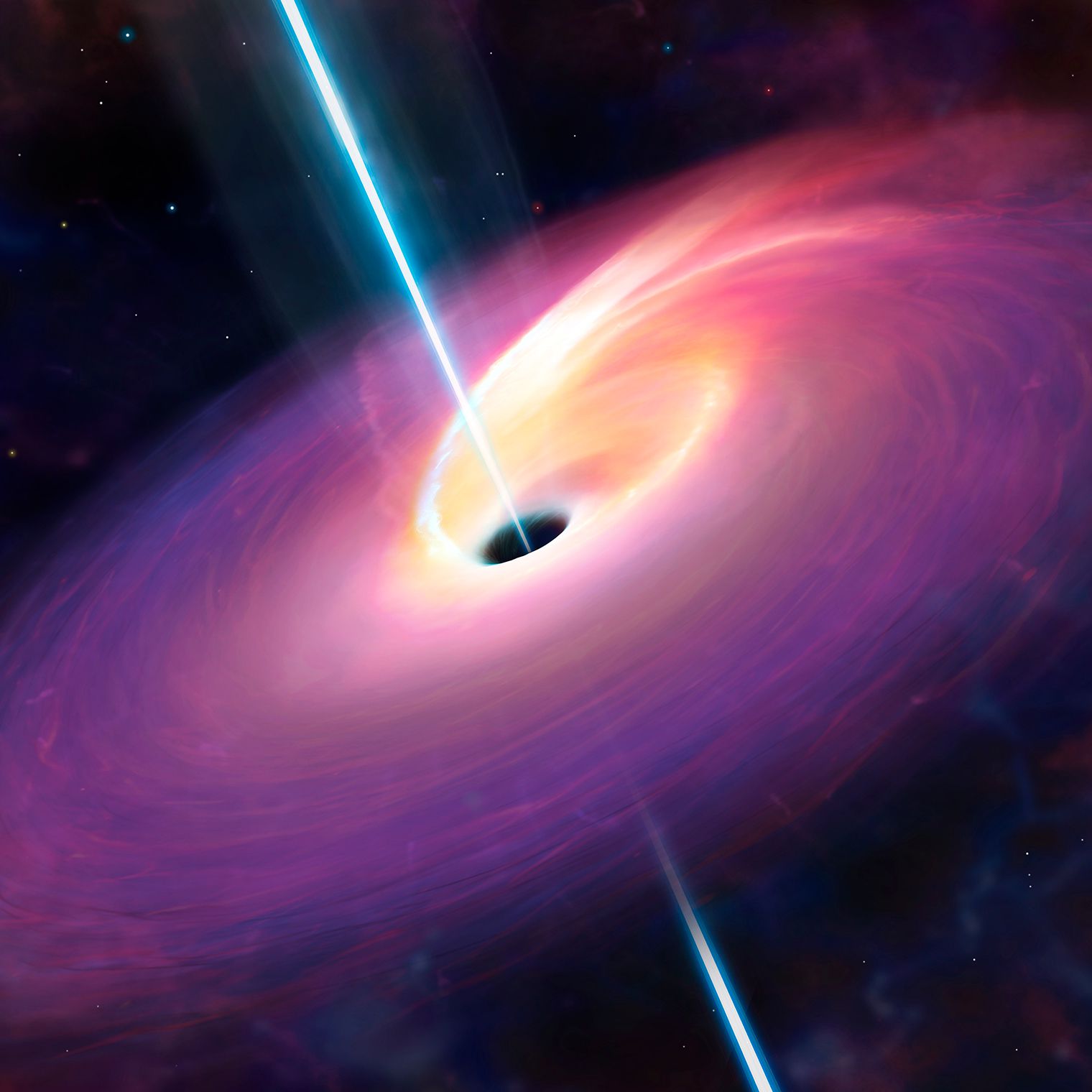

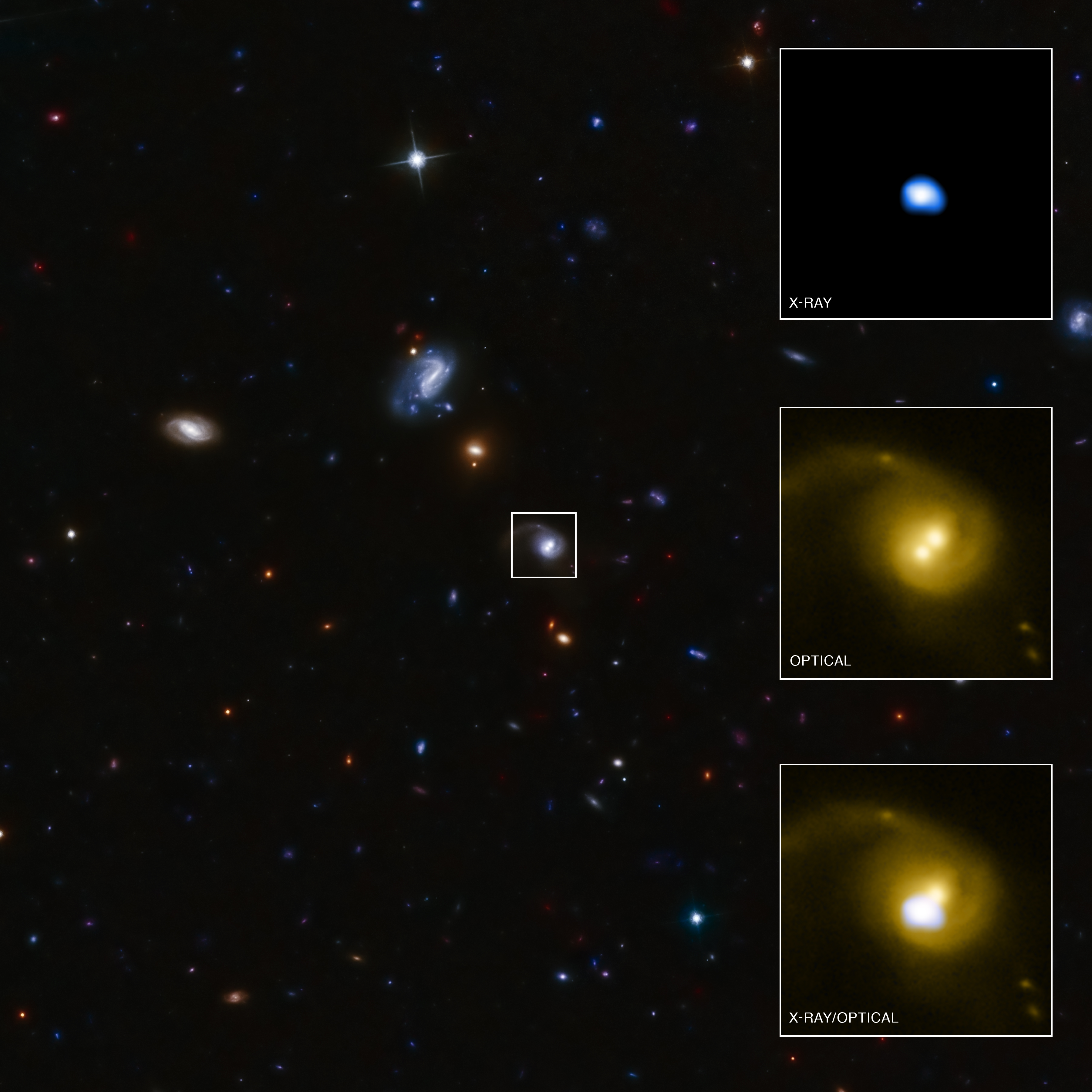

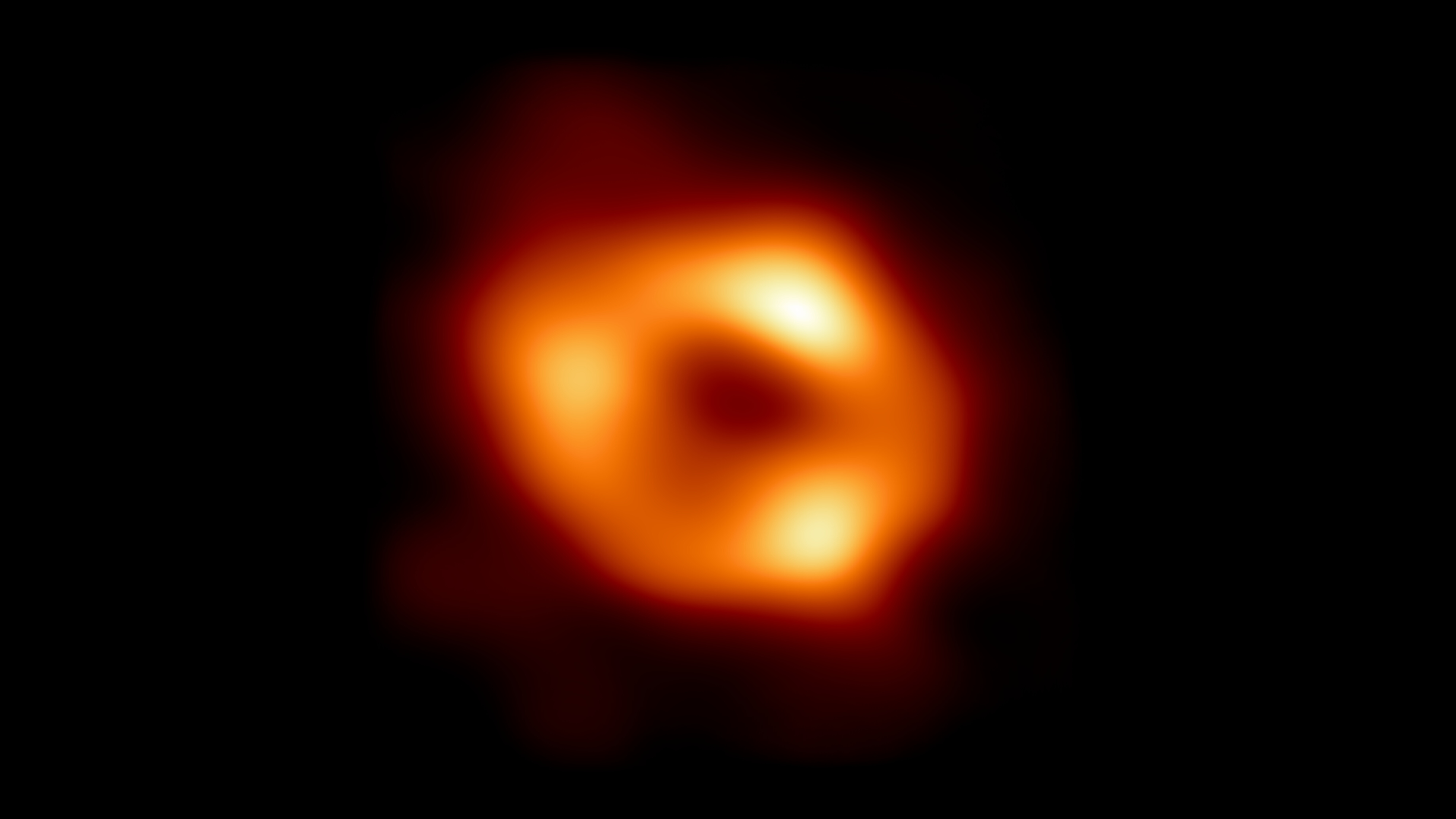

Shocking Threads of Gas Could Transform Our Understanding of a Supermassive Black Hole

“I was actually stunned when I saw these.”

June 2, 2023

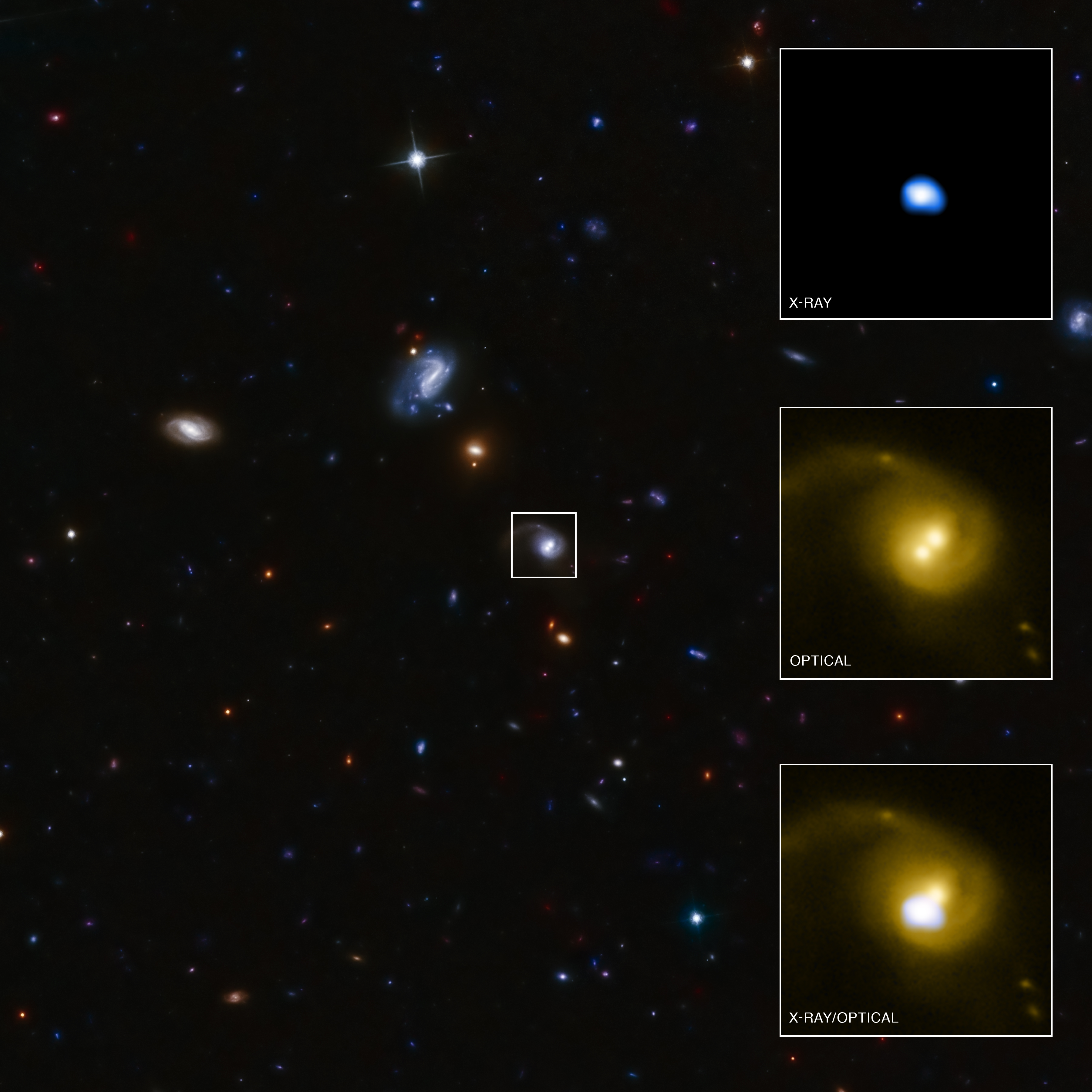

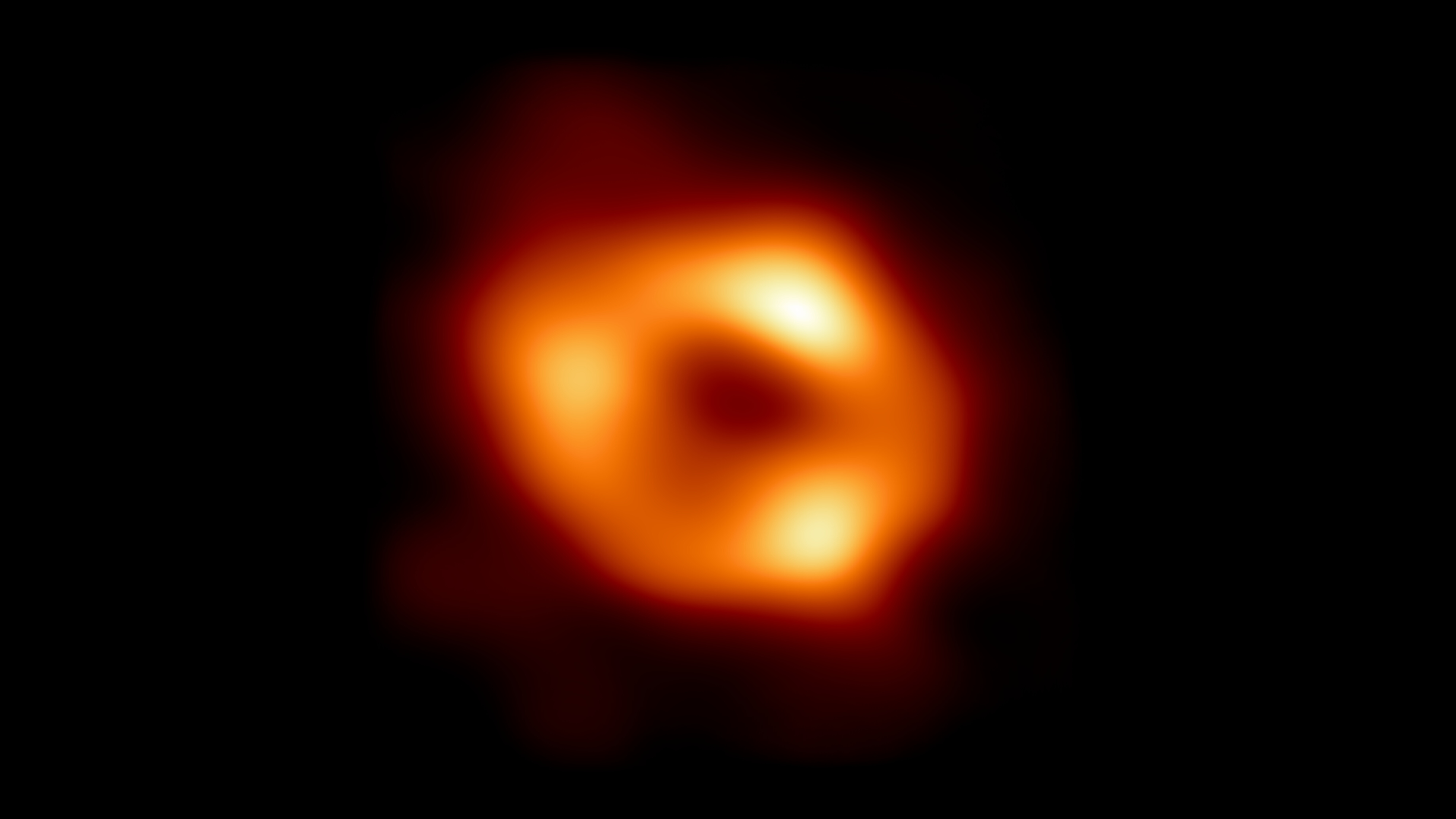

Credit: Farhad Yusef-Zadeh

Astrophysicists have stumbled upon a strange sight in the center of our galaxy: hundreds of horizontal threads of hot gas hanging out near Sagittarius A*, the Milky Way’s central supermassive black hole that’s located roughly 26,000 light-years away from Earth.

These one-dimensional filaments lie parallel to the galactic plane that surrounds the black hole, according to a new study published in The Astrophysical Journal Letters.

In the 1980s, Northwestern University astrophysicist Farhad Yusef-Zadeh discovered similar filaments arranged vertically, meaning they were aligned with the galaxy’s magnetic field. These measured up to 150 light-years tall and were likely made of high-energy electrons. Last year, his team revealed they had found nearly 1,000 of these vertical filaments, which showed up in pairs and clusters.

But when parsing through data from the MeerKAT radio telescope in South Africa, Yusef-Zadeh and his colleagues encountered a shocking revelation.

“It was a surprise to suddenly find a new population of structures that seem to be pointing in the direction of the black hole,” Yusef-Zadeh said in a press release. “I was actually stunned when I saw these. We had to do a lot of work to establish that we weren’t fooling ourselves.”

The newly discovered threads measure just 5 to 10 light-years long, sit on just one side of the black hole, and seem to point to Sagittarius A*. They may be about 6 million years old, the team estimates and could have formed when a jet erupted from Sagittarius A* and stretched surrounding gas into these odd threads.

This revelation, along with other newer discoveries, wouldn’t have been possible without recent advances in radio astronomy technology, according to Yusef-Zadeh. He and his colleagues relied on a technique that allowed them to remove the background and smooth out noise from MeerKAT images, offering a clear view of the filaments.

“The new MeerKAT observations have been a game changer,” he said. “The advancement of technology and dedicated observing time have given us new information. It’s really a technical achievement from radio astronomers.”

All in all, these new findings from Yusef-Zadeh’s team could teach us more about the mysterious black hole and its inner workings. “By studying them, we could learn more about the black hole’s spin and accretion disk orientation,” he said. “It is satisfying when one finds order in a middle of a chaotic field of the nucleus of our galaxy.”

June 13th 2023

Data Compression Drives the Internet. Here’s How It Works.

One student’s desire to get out of a final exam led to the ubiquitous algorithm that shrinks data without sacrificing information.

Read Later

May 31, 2023

Abstractions blogcomputer sciencecomputerscryptographyexplainersinformation theoryAll topics

Introduction

With more than 9 billion gigabytes of information traveling the internet every day, researchers are constantly looking for new ways to compress data into smaller packages. Cutting-edge techniques focus on lossy approaches, which achieve compression by intentionally “losing” information from a transmission. Google, for instance, recently unveiled a lossy strategy where the sending computer drops details from an image and the receiving computer uses artificial intelligence to guess the missing parts. Even Netflix uses a lossy approach, downgrading video quality whenever the company detects that a user is watching on a low-resolution device.

Very little research, by contrast, is currently being pursued on lossless strategies, where transmissions are made smaller, but no substance is sacrificed. The reason? Lossless approaches are already remarkably efficient. They power everything from the PNG image standard to the ubiquitous software utility PKZip. And it’s all because of a graduate student who was simply looking for a way out of a tough final exam.

Seventy years ago, a Massachusetts Institute of Technology professor named Robert Fano offered the students in his information theory class a choice: Take a traditional final exam, or improve a leading algorithm for data compression. Fano may or may not have informed his students that he was an author of that existing algorithm, or that he’d been hunting for an improvement for years. What we do know is that Fano offered his students the following challenge.

Consider a message made up of letters, numbers and punctuation. A straightforward way to encode such a message would be to assign each character a unique binary number. For instance, a computer might represent the letter A as 01000001 and an exclamation point as 00100001. This results in codes that are easy to parse — every eight digits, or bits, correspond to one unique character — but horribly inefficient, because the same number of binary digits is used for both common and uncommon entries. A better approach would be something like Morse code, where the frequent letter E is represented by just a single dot, whereas the less common Q requires the longer and more laborious dash-dash-dot-dash.

Abstractions navigates promising ideas in science and mathematics. Journey with us and join the conversation.

See all Abstractions blog

Yet Morse code is inefficient, too. Sure, some codes are short and others are long. But because code lengths vary, messages in Morse code cannot be understood unless they include brief periods of silence between each character transmission. Indeed, without those costly pauses, recipients would have no way to distinguish the Morse message dash dot-dash-dot dot-dot dash dot (“trite”) from dash dot-dash-dot dot-dot-dash dot (“true”).

Fano had solved this part of the problem. He realized that he could use codes of varying lengths without needing costly spaces, as long as he never used the same pattern of digits as both a complete code and the start of another code. For instance, if the letter S was so common in a particular message that Fano assigned it the extremely short code 01, then no other letter in that message would be encoded with anything that started 01; codes like 010, 011 or 0101 would all be forbidden. As a result, the coded message could be read left to right, without any ambiguity. For example, with the letter S assigned 01, the letter A assigned 000, the letter M assigned 001, and the letter L assigned 1, suddenly the message 0100100011 can be immediately translated into the word “small” even though L is represented by one digit, S by two digits, and the other letters by three each.

To actually determine the codes, Fano built binary trees, placing each necessary letter at the end of a visual branch. Each letter’s code was then defined by the path from top to bottom. If the path branched to the left, Fano added a 0; right branches got a 1. The tree structure made it easy for Fano to avoid those undesirable overlaps: Once Fano placed a letter in the tree, that branch would end, meaning no future code could begin the same way.

Share this article

Newsletter

Get Quanta Magazine delivered to your inbox

Introduction

To decide which letters would go where, Fano could have exhaustively tested every possible pattern for maximum efficiency, but that would have been impractical. So instead he developed an approximation: For every message, he would organize the relevant letters by frequency and then assign letters to branches so that the letters on the left in any given branch pair were used in the message roughly the same number of times as the letters on the right. In this way, frequently used characters would end up on shorter, less dense branches. A small number of high-frequency letters would always balance out some larger number of lower-frequency ones.

Introduction

The result was remarkably effective compression. But it was only an approximation; a better compression strategy had to exist. So Fano challenged his students to find it.

Fano had built his trees from the top down, maintaining as much symmetry as possible between paired branches. His student David Huffman flipped the process on its head, building the same types of trees but from the bottom up. Huffman’s insight was that, whatever else happens, in an efficient code the two least common characters should have the two longest codes. So Huffman identified the two least common characters, grouped them together as a branching pair, and then repeated the process, this time looking for the two least common entries from among the remaining characters and the pair he had just built.

Consider a message where the Fano approach falters. In “schoolroom,” O appears four times, and S/C/H/L/R/M each appear once. Fano’s balancing approach starts by assigning the O and one other letter to the left branch, with the five total uses of those letters balancing out the five appearances of the remaining letters. The resulting message requires 27 bits.

Huffman, by contrast, starts with two of the uncommon letters — say, R and M — and groups them together, treating the pair like a single letter.

Introduction

His updated frequency chart then offers him four choices: the O that appears four times, the new combined RM node that is functionally used twice, and the single letters S, C, H and L. Huffman again picks the two least common options, matching (say) H with L.

Introduction

The chart updates again: O still has a weight of 4, RM and HL now each have a weight of 2, and the letters S and C stand alone. Huffman continues from there, in each step grouping the two least frequent options and then updating both the tree and the frequency chart.

Introduction

Ultimately, “schoolroom” becomes 11101111110000110110000101, shaving one bit off the Fano top-down approach.

Introduction

One bit may not sound like much, but even small savings grow enormously when scaled by billions of gigabytes.

Related:

- How Shannon Entropy Imposes Fundamental Limits on Communication

- Researchers Defeat Randomness to Create Ideal Code

- The Scientist Who Developed a New Way to Understand Communication

- How Do You Prove a Secret?

Indeed, Huffman’s approach has turned out to be so powerful that, today, nearly every lossless compression strategy uses the Huffman insight in whole or in part. Need PKZip to compress a Word document? The first step involves yet another clever strategy for identifying repetition and thereby compressing message size, but the second step is to take the resulting compressed message and run it through the Huffman process.

Not bad for a project originally motivated by a graduate student’s desire to skip a final exam.

Correction: June 1, 2023

An earlier version of the story implied that the JPEG image compression standard is lossless. While the lossless Huffman algorithm is a part of the JPEG process, overall the standard is lossy.

The Quanta Newsletter

Get highlights of the most important news delivered to your email inboxEmail

May 11th 2023

Ancient Androids: Even Before Electricity, Robots Freaked People Out

Automata—those magical simulations of living beings—have long enchanted the people who see them up close.

- Lisa Hix

Read when you’ve got time to spare.

Advertisement

More from Collectors Weekly

- Fun Delivered: World’s Foremost Experts on Whoopee Cushions and Silly Putty Tell All

- Mudlarking the Thames for Relics of Long-Ago Londoners

- How a Small-Town Navy Vet Created Rock’s Most Iconic Surrealist Posters

Advertisement

The term “robot” was coined in the 1920s, so it’s tempting to think of the robot as a relatively recent phenomenon, less than 100 years old. After all, how could we bring metal men to life before we could harness electricity and program computers? But the truth is, robots are thousands of years old.

The first records of automata, or self-operating machines that give the illusion of being alive, go back to ancient Greece and China. While it’s true none of these ancient androids could pass the Turing Test, neither could early 20th-century robots—it’s only in the last 60 years that scientists began to develop “artificial brains.” But during the European Renaissance, machinists built life-size, doll-like automata that could write, draw, or play music, producing the startling illusion of humanity. By the late 19th century, these magical machines had reached their golden age, with a wide variety of automata available in high-end Parisian department stores, sold as parlor amusements for the upper middle class.

One of the largest publicly held collections of automata, including 150 such Victorian proto-robots, lives at the Morris Museum in Morristown, New Jersey, as part of the Murtogh D. Guinness Collection, which also features 750 mechanical musical instruments, from music boxes and reproducing pianos to large orchestrions and band organs.

Top: Swiss mechanician Henri Maillardet, an apprentice of Pierre Jaquet-Droz, built this boy robot, the Draughtsman-Writer, circa 1800. His automaton could write four poems and draw four sketches. Above: A drawing by Maillardet’s automaton. (Via the Franklin Institute of Philadelphia)

“Murtogh Guinness was one of the elders of the Guinness brewing family based in Dublin, Ireland,” says Jere Ryder, an automata expert and the conservator of the Guinness Collection. “Guinness traveled extensively throughout the world with his parents, and they had homes around the globe. But just after World War II, he decided he loved New York City best—with its opera, ballet, Broadway shows, and antiques—so he established a permanent residence there. That’s when he rediscovered mechanical music and automata and started to collect them with a passion.” Guinness died at age 89 in January 2002, and a year later, his collection was awarded to the Morris Museum.

Ryder’s connection to Murtogh Guinness goes way back. His parents, Hughes and Frances Ryder, were collectors and members of the Musical Box Society International. In the late 1950s, Guinness, then in his 40s, had learned of their music-box collection. When Jere and his brother, Stephen, first met the Guinness heir, they were toddlers.

“Guinness—who never drove himself anywhere—had to call a taxi to bring him all the way out to New Jersey from New York City,” Ryder says, remembering that first meeting. “He came knocking on our door in the evening. I was about 2 years old, and my brother, Steve, was just a year older. That was the night Guinness first met my father and my mother, and they struck up a lifelong friendship. He and my dad both had a passion for collecting these things. Of course, our family was not in the same league, collecting-wise. Mr. Guinness had the wherewithal to take it to a whole different level.” Given his early exposure, it’s no wonder Ryder would go on to apprentice with automata makers in Switzerland, repair and sell automata for a living, and write extensively on the subject with his brother.

Pierrot writes his love, Columbine, under the light of an oil lamp in this 1875 scene by Parisian automata manufacturer Vichy. At the time, artisans building automata would have been working under oil or gas lamps. (Via the Murtogh D. Guinness Collection at the Morris Museum)

In May 2018, the Morris Museum hosted its second-ever AutomataCon, which brought together 300 makers, collectors, and fans of all things automata. The convention corresponded with an annual exhibition of modern-day kinetic art. Of course, a rotating exhibition of half-a-dozen pieces from the Guinness Collection are on display at the museum year-round, and live demonstrations of selected machines take place at 2 p.m., Tuesdays through Sundays [at the time of this writing in 2018].

“In keeping with Murtogh Guinness’ wishes, we provide regular demonstrations of selected instruments and automata,” says Michele Marinelli, the curator of the collection. “These are moving pieces. They need to be seen and heard.”

The demonstrations always draw a crowd of gawkers. “When people today first see these things operate or hear them play, they’re just mesmerized,” Ryder says. “If people are in the next room and you turn one on, it’s like a magnet. They ask, ‘When were these made?’ We tell them, ‘Before electric lights.’

“These were the state-of-the-art entertainment devices of their day,” Ryder continues. “Back then, people didn’t have iPods, or even radios. The phonograph hadn’t been invented. Think of that. Now, place yourself in that period and imagine you’re watching or hearing this technology. At the Morris Museum, we try to remove people from the cacophony of today’s electronics to help them imagine the impact of these machines.”

Jacquemarts, or “jacks-of-the-clock,” also known as bellstrikers, were among the earliest clockwork automata. This jacquemart is on St. Peter’s Collegiate Church in Leuven, Belgium. Photo via WikiCommons

Automata—those magical simulations of living beings—have long enchanted the people who see them up close. Ancient humans first captured their own likenesses with paintings, sculptures, and dolls. Then, they made dolls that could move and, eventually, puppets.

“Man’s fascination with replicating human or living creatures’ characteristics is an ancient thing,” Ryder says. “Back in the earliest times, the makers of carved dolls started to use articulated limbs, with joints at the shoulders, knees, and hips in order to pose the dolls, before they had a way to mechanize the figures. It’s all an outgrowth of this human desire to see life replicated in a realistic manner.”

If you’ve ever watched the original “Clash of the Titans” and assumed Bubo the metallic owl was a preposterous 1981 Cold War anachronism, you might be surprised to learn that metal or wooden fowl were the stuff of legend for ancient Greeks, as much as Medusa and Perseus were. Around the globe, stories from mythology, religious scripture, and apocryphal historical texts describe wondrous moving statues, incredible androids with leather organs, and mobile metallic animals—particularly in temples and royal courts—but it’s hard to sort fact from fiction.

In the early 16th century, Leonardo da Vinci sketched a mechanical dove (inset), a concept made into a mechanical toy in the 19th century (main image). (From Leonardo’s Lost Robots)

For example, the Greek engineer Daedalus was said to have built human statues that walked by the magical power of “ quicksilver” around 520 BCE, but it’s more likely the statues appeared to move through the power of his clever engineering. In “ The Seventh Olympian,” 5th-century BCE Greek poet Pindar described the island of Rhodes as “The animated figures stand / Adorning every public street / And seem to breathe in stone, or / move their marble feet.”

Nor were automata a uniquely Western preoccupation. Around 500 BCE, King Shu in China is said to have made a flying wood-and-bamboo magpie (like Lu Ban’s bird a hundred years later, it was probably similar to a kite) and a wooden horse driven by springs, long before spring technology was perfected.

The Greek mathematician Archytas of Tarentum is credited with creating a wooden dove around 350 BCE that could flap its wings and fly 200 meters. It’s likely the device was connected to a cable and powered by a pulley and counterweight, but some have speculated it was animated by an internal system of compressed air or an early steam engine.

A display of two outflow water clocks, or clepsydrae, from the Ancient Agora Museum in Athens. The top is an original from the late 5th century BC. The bottom is a reconstruction of a clay original. (Via WikiCommons)

Besides the obvious connection to dolls and puppetry, automata were long connected to clock-making. In ancient times, that meant water-driven mechanisms, similar to fountains. The earliest timepiece, the clepsydra or water clock—developed as early as 1700s BCE and found in Babylonia, Egypt, and China—used the flow of water in or out of a bowl to measure time.

According to Mark E. Rosheim in his book, Robot Evolution, Greek inventor Ctesibius, also spelled Ktesibios, is thought of as the founder of modern-day automata. Around 280 BCE, and he started building water clocks that had moving figures, like an owl, and whose waterworks forced air into pipes to blow whistles. Essentially, he built the first cuckoo clock. Ctesibius also amused people with a hydraulic device that caused a fake blackbird to sing, as well as mechanical figures that appeared to move and drink.

The Morris Museum’s Jere Ryder explains that because none of these ancient devices survived, it’s hard to know how they worked. “The speaking heads or the talking animals might not have had articulated limbs,” he explains. “They were more like sculptures, which might have had water-driven pneumatic instruments to create guttural sounds of an animal. This could be accomplished by opening a sluice gate or tap so water could turn a wheel, which then turned cams on a cog that worked a bellows. Or perhaps a person, hidden out of sight, would talk through a tube. You’d be walking by this bronze or stone statue, and all of a sudden, realistic sounds would be emanating from it. The experience would have been magical, almost wizard-like. That’s why automata were sometimes regarded as witchcraft.”

A 2007 mechanical model based on the ancient Greek Antikythera machine. (Photo by Mogi Vicentini, WikiCommons)

According to historian Joseph Needham in his epic study of ancient Chinese engineering, Science and Civilisation in China, in the 3rd century BCE, Chinese engineers and mathematicians like Chang Hêng, who worked for the royal court, were focused on how to animate full-scale puppet shows. Han emperor Chhin Shih Huang Ti, as known as Qin Shi Huang, was said to have had a device that featured a band of a dozen 3-foot-tall bronze men that played real music, but it still required two unseen puppeteers to operate, one blowing into a tube for the sound and another pulling a rope for the movement.

Back in Greece, Ctesibius’ student, Philo (or Philon) of Byzantium, was a pioneer who advanced from pneumatics to steam-driven automata and other devices around 220 BCE, writing a book called Mechanike syntaxis . Only part of Philo’s work has survived. Unfortunately, the true depth of Greek and Roman engineering and the extent to which they employed steam power are unknown, as many records were destroyed in the centuries of wars after the fall of the Roman Empire.

The first real evidence of the ancient Greeks’ mechanical abilities was the discovery of the Antikythera mechanism, dated between 205 and 60 BCE. This clock mechanism, which used 30 bronze gears and cams, is thought of as the first computer and may have been employed to operate automata.

This sketch, based on Hero of Alexandra’s writings describing water- and weight-driven bird automata, appeared in Sigvard Strandh’s 1989 book, The History of the Machine.

The earliest full-length book of Greek robots that’s survived is On Automatic Theaters, on Pneumatics, and on Mechanics, written circa 85 CE, by the inventor Hero (or Heron) of Alexandria. In his treatise, Hero describes mechanical singing birds, robot servants that pour wine, and full-scale automated puppet theaters that employed everything from weights and pulleys to water pipes, siphons, and steam-driven wheels. Mostly, the automata executed simple, repetitive motions. Because Romans kept human slaves and servants to do hard labor and menial tasks, apparently no one thought to give robots actual work.

Since Hero’s writings left out certain details and didn’t include drawings, depictions of his machines still require a lot of guesswork, explains Rosheim in Robot Evolution. For example, the Hero automaton known as “Hercules and the Beast” has been drawn showing the legendary hunk shooting a snake with a bow and arrow and, alternately, depicting Hercules pounding a dragon with a club. What we do know is the action depended on water draining into hidden vessels that served as counterweights.

This interpretation of Hero’s “Hercules and the Beast” was drawn in 1598 as an illustration for a translation of On Automatic Theaters. (Via Robot Evolution)

But progress on building robots in the Western World halted as the Roman Empire began to crumble around 117 CE. In the meantime, circa 3rd-7th century CE, according to Needham, the Chinese continued to develop elaborate puppet theaters with myriad figures of musicians, singers, acrobats, animals, and even government officials at work, which would move and make music. They were likely operated by water-driven wheels, and possibly underwater chains, ropes, or paddle wheels.

In the 600s, Chinese engineer Huang Kun, serving under Sui Yang Ti, described an outdoor mechanical puppet theater in the palace courtyards and gardens with 72 finely dressed figures that drifted on barges floating down a channel. To impress his guests, the emperor’s automata would stop to serve them wine. In Science and Civilization in China, Needham quotes Huang’s manual: “At each bend, where one of the emperor’s guests was seated, he was served with wine in the following way. The ‘Wine Boat’ stopped automatically when it reached the seat of a guest, and the cup-bearer stretched out its arm with the full cup. When the guest had drunk, the figure received it back and held it for the second one to fill again with wine. Then immediately the boat proceeded, only to repeat the same at the next stop. All these were performed by machinery set in the water.”

Medieval Chinese engineer Su Song designed this escapement for his famous astronomical clock tower that included jacquemart-type figures to announce the hours. (Via WikiCommons)

The Tu-Yang Tsa Pien ( Miscellaneous Records from Tu-Yang) has this intriguing story of automata in 9th century China: “A guardsman, Han Chih-Ho, who was Japanese by origin … made a wooden cat which could catch rats and birds. This was carried to the emperor, who amused himself by watching it. Later, Han made a framework which was operated by pedals and called the ‘Dragon Exhibition.’ This was several feet in height and beautifully ornamented. At rest there was nothing to be seen, but when it was set in motion, a dragon appeared as large as life with claws, beard, and fangs complete. This was presented to the emperor, and sure enough, the dragon rushed about as if it was flying through clouds and rain; but now the emperor was not amused and fearfully ordered the thing to be taken away.”

Naturally, Han feared for his life. “Han Chih-Ho threw himself upon his knees and apologized for alarming his imperial master, offering to present some smaller examples of his skill. The emperor laughed and inquired about his lesser techniques. So Han took a wooden box several inches square from his pocket, and turned out from it several hundred ‘tiger-flies,’ red in color, which he said was because they had been fed on cinnabar. Then he separated them into five columns to perform a dance. When the music started they all skipped and turned in time with it, making small sounds like the buzzing of flies. When the music stopped they withdrew one after the other into their box as if they had rank. … The emperor, greatly impressed, bestowed silver and silks on him, but as soon as he had left the palace he gave them all away to other people. A year later he disappeared and no one could ever find him again.”

In the 12th century, Isma’il Ibn al-Razzaz al-Jazari designed this water-driven miniature “robot band” that sat in a boat on a lake and played music for royal guests.

Around the same time, circa 800s-830s, the Khalif of Baghdad, Abdullah al-Manum, recruited three brothers known as Banū Mūsā to hunt down the Greek texts on mechanical engineering, including Hero’s On Automatic Theaters, on Pneumatics, and on Mechanics. The brothers wrote The Book of Ingenious Devices, which included both their own inventions, like an automatic flute player, and the ancient concepts they’d collected. The 9th century was something of a golden era of Muslim invention, with alchemists and engineers building impressive automata for Muslim rulers, including snakes, scorpions, and humans, as well as trees with metal birds that sang and flapped their wings. Around the same time, the Byzantine Emperor Constantine VII in Constantinople was said to have a similar tree as well as an imposing rising “ throne of Solomon” guarded by two roaring-lion automata.

By the 11th century, India had automata, too. According to History of Indian Theatre by Manohar Laxman Varadpande, a book on architecture, Samarangana Sutradhara, written by Parmar King Bhoja of Malava, describes miniature wooden automata called “das yantra” that decorated palaces and could dance, play musical instruments, or offer guests betel leaves. Other yantra were put in the service of mythological plays and acted out everything from war-making to love-making. Similarly, small humanoid automata were employed in royal residences and temples in Egypt.

Building on the works of Banū Mūsā, in the 12th century, Muslim polymath Isma’il Ibn al-Razzaz al-Jazari produced The Book of Knowledge of Ingenious Mechanical Devices, with lushly colored illustrations of previously invented devices and his own novel inventions. It describes the mechanics of water clocks with moving figures, robot bands, and tabletop automata. For example, al-Jazari’s Peacock Fountain, designed to aid in royal hand-washing, relied on a series of water vessels and floats. According to Rosheim, the water poured from the jewel-encrusted peacock’s beak into a basin. As the water drained into containers under the basin, float devices triggered little doors where miniature-servant automata appeared in a sequence, the first offering soap, the second a towel. Turning another valve caused the servants to retreat.

A drawing from Isma’il Ibn al-Razzaz al-Jazari’s The Book of Knowledge of Ingenious Mechanical Devices shows his concept for the Peacock Fountain, used for royal hand-washing.

The science of automata is thought to have re-emerged in Europe in the 13th century, thanks to the sketchbooks of the French artist Villard de Honnecourt, which describe several machines and automata such as singing birds and an angel that always turned to face the sun. De Honnecourt may have recorded some of the first jacquemarts, or “jacks-of-the-clocks,” automata activated to blow horns or strike bells on medieval-town clock towers. The Strasbourg Cock, built in France in 1352, features a prime example of the jacquemarts of this era: A rooster, one of 12 figures in rotation on an astronomical clock in the Cathedral of Our Lady of Strasbourg, would raise its head, flap its wings, and crow three times to announce its hour. In China, inventors continued to build more and more impressive water-wheel animated puppet theaters, as well as elaborate jacquemarts on their water clocks. But unfortunately, Joseph Needham explains, most records and examples of these mechanical advancements were destroyed by the conquering Ming Dynasty in 1368.

Besides clocks and puppet theaters, in Medieval and Renaissance Europe, automata were a key piece of aristocratic “ pleasure gardens,” which were the equivalent of modern-day fun houses, filled with slapstick booby traps. In the late 13th century, the Count Robert II of Artois (1250-1302), commissioned the first known pleasure garden at Hesdin in France. Walking through the maze, the Count’s guest would be startled by statues that spat water at them, fun-house mirrors, a device that smacked them in the head, a wooden garden hermit and metallic owl that spoke, other mechanical beasts, a guard automata that gave orders and hit them, a collapsing bridge, and other devices that shot out or dumped water, soot, flour, and feathers.

Hellbrunn Palace in Salzburg, Austria, still features trick fountains hidden in seats once used by guests of Prince-Archbishop Markus Sittikus for outdoor meals. (Via WikiCommons)

By the 16th and 17th centuries, a handful of eccentric gardens with fountain automata popped up around modern-day Italy, Germany, and France, like the Villa d’Este at Tivoli near Rome, which featured elaborate fountains and grottos as well as hydraulic organs and animated birds. Perhaps inspired by Hesdin, the Prince-Archbishop of Salzberg (now in Austria), Markus Sittikus von Hohenems, built a prank-filled “water park” at Hellbrunn Palace in the 1610s with water-powered automata and music, where guests would be startled by statues that squirted water in their faces and chairs that shot water on their butts. In 1750, more than 100 years after Sittikus’ death, a water-driven puppet theater, with more than 200 busy townspeople automata, was installed at the estate.

On a smaller scale than the fountain-filled pleasure gardens were the Gothic table fountains of the 14th and 15th centuries, which were like miniature animated puppet theaters, showpieces thought to have come to Western aristocrats through Byzantine and Islamic trade. Dozens of figures on the fountain would dance, play music, or spout wine or perfumed water. It’s believed that most of these devices were made of precious metal and later melted down. The one surviving example, made around 1320 to 1340 and now housed at the Cleveland Museum of Art, was a gift from the Duke of Burgundy to Abu al-Hamid II, the sultan of the Ottoman Empire.

This Gothic table fountain with small automata, now at the Cleveland Museum of Art, was thought to be a typical showpiece for European aristocrats in the 14th and 15th centuries. Because such animated fountains were made of precious metals, most were melted down. (Via the Cleveland Museum of Art)

Up until the 15th century, automata technology had been hindered by the limitations of hydraulic, pneumatic, and weight- and steam-driven motion. That changed with the introduction of steel-spring clockwork mechanisms. Previously, engineers had experimented with using tightly wound metal springs to drive automata and timepieces, but the rudimentary metalwork meant the mechanism might only work right once before breaking. In the 15th and 16th centuries, technological advances made in the steel-working foundries in Nuremberg and Augsburg, Germany, and in Blois, France, were a major breakthrough.

“It wasn’t until the 1400s that Europeans had the sufficient metal refining and foundry techniques to produce a spring that wouldn’t self-destruct,” Morris Museum’s Jere Ryder says. “As time went on, they refined the process further, and the quality of their materials improved.”

Where metalworking flourished, so did horological, or clock-making, technology. Starting around the 1430s, clockmakers in Europe, particularly in Germany and France, were producing key-wound spring-driven clocks. They continued to develop and improve upon clock mechanics throughout the Renaissance, adding more and more elaborate decorative flourishes. In BBC Four’s “ Mechanical Marvels: Clockwork Dreams,” science history professor Simon Schaffer explains that the time-keeping mechanism, which once needed a tower to contain it, got smaller and smaller until pocket watches could be made with tiny screws and gears that artisans meticulously hand-crafted.

The miniaturization of clockwork eventually led to companies like Bontems in Paris producing small musical automata like this singing-hummingbird box from 1890.Photo by WikiCommons

“Clockmakers were usually the technicians making automata,” the Morris Museum’s Jere Ryder says. “They had the access to the materials; they knew the clockwork mechanisms; they knew the drive systems that would be required. They had all the basic metalworking and metallurgical skills and knowledge at their disposal.”

Unlike the whimsical jacquemarts seen on public clock towers, these robots were strictly for the entertainment of royalty and aristocrats, and were only produced by the most trusted court inventors and artisans. “You have to remember, quality metals were a precious commodity, and you had to have somebody of great importance in your region grant you the access to those materials,” Ryder says. “These metals weren’t available to the masses for fear that they would be used to make arms for insurgents to rise up against the aristocracy. As an inventor, you had to be trustworthy because you were getting a potentially dangerous raw substance in your workshop that you could turn into weapons, and that would be a detriment to your patron.”

A page from Giovanni de Fontana’s sketchbook, The Book of Warfare Devices, from 1420.

Some inventors in the early 15th century were still conceptualizing automata through the older technologies of hand cranks and weights and pulleys. Giovanni (or Johannes) de Fontana produced a book of plans for animated monsters and devils that could spit fire, intended to debunk magicians. But it’s hard to say if Fontana successfully built any of these devices, which seem mechanically impractical and unlikely to work. In the mid-15th century, German mathematician and astronomer Johannes Müller von Königsberg, also known as Regiomontanus, is said to have built an iron mechanical fly and a wing-flapping eagle automata—possibly driven by clockwork—that accompanied the Holy Roman Emperor to the gates of Nuremberg, but there are no records of his designs for such machines.

Leonardo da Vinci’s sketchbooks show a full-size clockwork lion, supposedly a present for King Francois I in the 1510s. Witnesses claimed the mechanical lion, a symbol of Florence, approached the king, opened a “heart cavity” on its side, and revealed a Fleur-de-Lis, the symbol of the French monarchy. Da Vinci’s lion has been lost to history, but a replica was constructed by automata-maker Renato Boaretto for Chateau du Clos Luce, in Amboise, France, in 2009. According to Robot Evolution author Mark E. Rosheim, da Vinci’s notebooks offer hints that he was working on an android, dressed in a suit of arms, using a system of pulleys and cables based on his drawing of human musculature, possibly operated by a manual hand crank, as many of da Vinci’s inventions were. However, it’s unclear if he ever built the android, as the relevant pages of his sketchbook are missing.

The writings of Hero of Alexandria were finally translated from Greek into Latin in the 16th century. French engineer Salomon de Caus studied the work of Hero religiously and replicated the hydraulic-pneumatic singing bird concept. Other inventors relied on new developments in wind-up spring technology. In the service of Holy Roman Emperor Charles V in Spain, Italian clockmaker Juanelo Turriano—also known as Gianello Della Tour of Cremona and Giovanni Torriani—made several miniature clockwork robots to entertain the easily bored emperor, from flying birds to soldiers to musicians.

“In the Renaissance, only royalty and aristocrats would be able to afford automata, which they’d commission to show that they were more powerful than their neighbors,” Ryder says. “There was a lot of one-upmanship going on at that time. The owner of automata could assert he was important because he could command these miniature lifelike pieces with amazing clockwork mechanisms to perform at will, anytime he wanted them to. At that time, that was probably pretty darn impressive.”