The previous contents of this page are now in Psychology Archives I & II. There will be more posts on this page as and when appropriate.

January 1st 2023

That Feeling When You Have So Many Things to Do You Can’t Do Any of Them? Psychologists Have a Name for It, and a Solution

When your to-do list is so scary you can’t do any of it, that’s called overwhelm freeze. Psychology has a solution.

By Jessica Stillman, Contributor, Inc.com@EntryLevelRebel

It’s the season of joy and togetherness, which means, of course, I have approximately 687 unfinished items on my to-do list. There are (many) work projects to wrap up, gifts to buy, visits to prepare for, school holiday concerts to attend. The list feels endless–and paralyzing.

Logically, you’d think that when your to-do list was at its longest would be when you’d kick into high gear and start ticking things off like a productivity superstar. But in my personal experience, often the opposite happens. When my to-do list grows this long, I panic, my motivation tanks, and my brain fogs. I end up stressing more than accomplishing. Am I a weirdo?

Possibly, but not because of my to-do list panic, according to a recent, gratifying New York Times article. Apparently, having so many things to do that you can’t do any of them is a recognized psychological phenomenon with a name and, better yet, a suggested cure.

Memo to my brain: Your to-do list isn’t a saber-toothed tiger

In the article, I learn from writer Dana G. Smith that I am suffering from overwhelm freeze. Just from the name, that sounds about right, but Smith talks to Ellen Hendriksen, a professor at Boston University’s Center for Anxiety and Related Disorders, who explains that we freeze in the face of an overwhelming to-do list for the same reason our ancestors froze in response to a stalking predator.

“Our bodies react to threat the same way, whether the threat is external, like the proverbial saber-toothed tiger, or the threat is internal,” she said. So basically my brain is so stressed by the thought of everything I probably won’t have time to do that my prefrontal cortex, which should be planning, organizing, and generally orchestrating the show, just lies down and plays possum.

So what do I do about it?

Knowing that I am experiencing something called overwhelm freeze, and that it is common enough to merit its own terminology, is soothing. But I still have to figure out what to get for my impossible-to-buy-for father and when I am going to squeeze in that meeting with my accountant. Do psychologists have any practical advice on how to get my brain moving again?

As overwhelm freeze is a response to stress, all the usual stress-reducing techniques like deep breathing, physical exercise, and reminding yourself it’s normal and human to be less-than-perfect are a good place to start. Beyond that, Smith and the experts she talks to advise those paralyzed by their to-do lists to start small–very small.

“How do you eat an elephant? One bite at a time,” jokes University of Calgary professor and procrastination expert Piers Steel. No matter the size of the elephant, those individual bites should be so ridiculously small and concrete that you can’t possibly stress about them. I can feel my blood pressure rise when I contemplate a task like “buy presents for entire family” but it’s much harder to get worked up about “order cookbook for mom.”

Think about rewriting your to-do list as if you were giving “instructions to a teenager who doesn’t really want to do it, so you have to be really specific,” explains Steel. Which makes sense — my brain does feel quite a bit like a sulky 16-year-old these days.

Once you’ve got your grumpy teen-proof to-do list, the next step is to actually get started. The experts suggest beginning with something easy and pleasant to help you build momentum. For activities that promise absolutely zero joy, good old fashioned self-bribery can help.

“If there’s an email you need to send that you keep putting off (and off and off), promise yourself ten guilt-free minutes of internet celebrity gossip afterward,” suggests Smith.

Whatever you need to do, get the ball rolling because the longer you let yourself stay frozen, the worse it’s likely to get. I guess I am off to order a cookbook then. What small task could you undertake to break your freeze?

This Morning

The Daily Digest for Entrepreneurs and Business Leaders

December 17th 2022

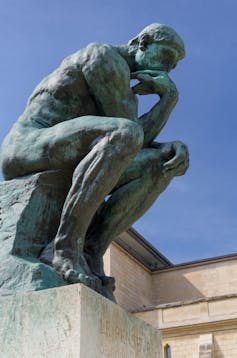

How to Stop Overthinking Everything

Deliberation is an admirable and essential leadership quality that undoubtedly produces better outcomes. But there comes a point in decision making where helpful contemplation turns into overthinking.

- Melody Wilding

Read when you’ve got time to spare.

- How to Act Quickly Without Sacrificing Critical Thinking4,277 saves

- A Way to Plan If You’re Bad at Planning2,822 saves

- How Perfectionists Can Get Out of Their Own Way3,992 saves

Advertisement

Ghislain & Marie David de Lossy/Getty Images

As a product lead at a major technology company, Terence’s job is to make decisions. How should the team prioritize features to develop? Who should be staffed on projects? When should products launch? Hundreds of choices drive the vision, strategy, and direction for each product Terence oversees.

While Terence loved his job, making so many decisions caused him a lot of stress. He would waste hours in unproductive mental loops — analyzing variables to make the “right” choices. He would worry about the future and imagine all the ways a launch could go wrong. Then, he would beat himself up for squandering valuable time and energy deliberating instead of taking action. In other words, his thoughtfulness, which was typically a strength, often led him to overthink situations.

Terence is what I like to call a sensitive striver — a high-achiever who processes the world more deeply than others. Studies show that sensitive people have more active brain circuitry and neurochemicals in areas related to mental processing. This means their minds not only take in more information, but also process that information in a more complex way. Sensitive strivers like Terence are often applauded for the way they explore angles and nuance. But at the same time, they are also more susceptible to stress and overwhelm.

Deliberation is an admirable and essential leadership quality that undoubtedly produces better outcomes. But for Terence and others like him, there comes a point in decision making where helpful contemplation turns into overthinking. If you can relate, here are five ways to stop the cycle of thinking too much and drive towards better, faster decisions.

1. Put aside perfectionism

Perfectionism is one of the biggest blockers to swift, effective decision-making because it operates on faulty all-or-nothing thinking. For example, perfectionism can lead you to believe that if you don’t make the “correct” choice (as if there is only one right option), then you are a failure. Or that you must know everything, anticipate every eventuality, and have a thorough plan in place before making a move. Trying to weigh every possible outcome and consideration is paralyzing.

To curb this tendency, ask yourself questions like:

- Which decision will have the biggest positive impact on my top priorities?

- Of all the possible people I could please or displease, which one or two people do I least want to disappoint?

- What is one thing I could do today that would bring me closer to my goal?

- Based on what I know and the information I have at this moment, what’s the best next step?

After all, it’s much easier to wrap your head around and take action towards a single next step rather than trying to project months or years into the future.

2. Right-size the problem

Some decisions are worth mulling over, while others are not. Before you make a call, write down what goals, priorities, or people in your life will be impacted. This will help you differentiate between what’s meaningful and what’s not worth obsessing over.

Likewise, if you’re worried about the prospect of a decision bombing, try the 10/10/10 test. When the prospect of falling flat on your face seizes you, think about how you’ll feel about the decision 10 weeks, 10 months, or 10 years from now? It’s likely that the choice will be inconsequential or that you won’t even remember it was a big deal. Your answers can help you put things in perspective and rally the motivation you need to take action.

3. Leverage the underestimated power of intuition

Intuition works like a mental pattern matching game. The brain considers a situation, quickly assesses all your experiences, and then makes the best decision given the context. This automatic process is faster than rational thought, which means intuition is a necessary decision-making tool when time is short and traditional data is not available. In fact, research shows that pairing intuition with analytical thinking helps you make better, faster, and more accurate decisions and gives you more confidence in your choices than relying on intellect alone. In one study, car buyers who used only careful analysis were ultimately happy with their purchases about a quarter of the time. Meanwhile, those who made intuitive purchases were happy 60 percent of the time. That’s because relying on rapid cognition, or thin-slicing, allows the brain to make wise decisions without overthinking.

Terence, the product lead I mentioned earlier, was so intrigued by the idea of making decisions from his gut that he planned a “Day of Disinhibition” during which he followed his own intuition about everything he said and did for twenty-four hours. The result? Going with his gut gave him the courage to stop censoring himself and make tough calls, even when he knew it might upset some stakeholders. “It wasn’t just what I got done, but how I got it done, how quickly, and how I felt about it,” he later told me, “It put me in the best frame of mind to deal with whatever is in front of me,” he said. Try the “Day of Disinhibition” experiment for yourself, or simply set aside a few minutes today and list three to five times you trusted your gut in and whether the outcome was favorable.

4. Limit the drain of decision fatigue

You make hundreds of decisions a day — from what to eat for breakfast to how to respond to an email — and each depletes your mental and emotional resources. You’re more likely to overthink when you’re drained, so the more you can eliminate minor decisions, the more energy you’ll have for ones that really matter.

Create routines and rituals to conserve your brainpower, like a weekly meal plan or capsule wardrobe. Similarly, look for opportunities to eliminate certain decisions altogether, such as by instituting best practices and standardized protocols, delegating, or removing yourself from meetings.

5. Construct creative constraints

You may be familiar with Parkinson’s Law, which states that work expands to the time we allow it. Put simply, if you give yourself one month to create a presentation, it will take you one full month to finish it. But if you only had a week, you’d finish the same presentation in a shorter time.

I’ve observed a similar principle among sensitive strivers — that overthinking expands to the time we allow it. In other words, if you give yourself one week to worry about something that is actually a one-hour task, you will waste an inordinate amount of time and energy.

You can curb this tendency by creating accountability through creative constraints. For example, determine a date or time by which you’ll make a choice. Put it in your calendar, set a reminder on your phone, or even contact the person who is waiting for your decision and let them know when they can expect to hear from you. A favorite practice of my clients is “worry time,” which involves earmarking a short period of the day to constructively problem solve.

Above all, remember that your mental depth gives you a major competitive advantage. Once you learn to keep overthinking in check, you’ll be able to harness your sensitivity for the superpower that it can be.

Melody Wilding, LMSW is an executive coach and author of Trust Yourself: Stop Overthinking and Channel Your Emotions for Success at Work. Get a free copy of Chapter One here.

December 15th 2022

A Healthy Social Life Goes Beyond Friends and Family

When we have a variety of social interactions—with not just intimates, but acquaintances and strangers—we may be happier and healthier for it.

By Jill Suttie | December 5, 2022

Like many people, in terms of socializing, I prioritize making time for my closest friends and family. When it comes to reaching out to people I don’t know as well, I have a harder time and often find myself reluctant to engage—maybe because I’m introverted or just plain busy.

This could be a big mistake, though, according to a new study. Having a variety of different types of social interactions seems to be central to our happiness—something many of us discovered firsthand during the pandemic, but may already have forgotten.

In a series of surveys (done pre-pandemic), researchers looked at how having a socially diverse network related to people’s well-being. Just to be clear, they weren’t looking at racial, ethnic, or gender diversity, but how much people interacted with different types of social contacts—friends, family, colleagues, neighbors, classmates, community members, etc.

In one survey, 578 Americans reported on what activities they’d been engaged in, with whom, and for how long over the past 24 hours, while also saying how happy and satisfied with life they were. The researchers then gave them a score for “social diversity” based on the variety of social contacts they’d had and the length of time spent with each type of contact.

After analyzing the results, they found that people with more diverse social networks were happier and more satisfied with life than those with less diverse networks—regardless of how much time they’d spent socializing overall. This pattern held even after taking into account things like a person’s gender, age, employment status, and other potential influences of happiness.

Having a wider set of social contacts seems to be important for happiness, says lead researcher Hanne Collins of Harvard Business School.

“The more you can broaden your social portfolio and reach out to people you talk to less frequently—like an acquaintance, an old friend, a coworker, or even a stranger in the grocery store—the more it could have really positive benefits for your well-being,” she says.

To further test this idea, she and her colleagues looked at large data sets from the American Time Use Survey (which provided detailed information from over 19,000 Americans about what activities they engaged in during a typical day) and the World Health Organization’s Study on Global Aging and Adult Health (which did the same for 10,447 respondents from China, Ghana, India, Mexico, the Russian Federation, and South Africa).

In both cases, they found that when people had a broader range of social interactions, they experienced greater happiness and well-being—including better physical health. These gains in well-being went above and beyond gains related to how active people were or what country they were from or other demographics—meaning the results seemed to apply to people from all stations of life and from many cultures.

“Across many measures, many populations, and many different studies, we’re finding the same kind of positive association between a greater social portfolio and well-being,” says Collins.

In this type of analysis, though, it’s hard to tell whether social diversity leads to happiness or if happier people just attract more diverse social contacts. To try to get at that, Collins and her colleagues did another analysis, using data from a mobile app that 21,644 French-speaking people used to report on their daily social activities and happiness.

There, they found that when someone experienced greater-than-average social diversity one week, they were happier that week and the week after—independent of how active or social they were overall. This finding probably jives with people’s own experience during the pandemic, Collins adds.

“The pandemic narrowed people’s social portfolios, in terms of having different relationship partners to talk to, and they missed that wider network,” she says. “We benefit from having access to more distant others.”

Why is that? Other research supports the importance of “weak ties” in our social networks—and how interacting with a stranger can be more pleasurable than we predict. It could be that being with different people elicits different kinds of emotions, says Collins, and that emotional variety may be a driving force in our happiness.

Alternatively, it could be that having a more diverse network allows you to get different kinds of social support when you need it—whether that’s emotional or financial support from friends or family, or informational and practical support from an acquaintance (like letting you borrow a tool or helping you find a job). Having various kinds of social support can be tied to well-being, too, she says.

Whatever the case, Collins hopes her research will spur people to expand their social networks when they can. She suggests reaching out to old friends, joining a class, having an extra meeting with a colleague to touch base, or chatting with the grocery store cashier. Especially after going through the isolation imposed during the pandemic, she says, getting back to a wider set of social interactions could really help improve people’s well-being.

“As we try to find out what a ‘new normal’ looks like, recovering our diverse social portfolios may be really impactful for people,” she says. “Just trying to be conscious about who you’re talking to and making the effort to foster moments of connection with people with less obvious access to your life could be powerful.”

Greater Good wants to know: Do you think this article will influence your opinions or behavior?

Greater Good wants to know: Do you think this article will influence your opinions or behavior?

Get the science of a meaningful life delivered to your inbox. Submit

About the Author

Jill Suttie Jill Suttie, Psy.D., is Greater Good’s former book review editor and now serves as a staff writer and contributing editor for the magazine. She received her doctorate of psychology from the University of San Francisco in 1998 and was a psychologist in private practice before coming to Greater Good.

Jill Suttie Jill Suttie, Psy.D., is Greater Good’s former book review editor and now serves as a staff writer and contributing editor for the magazine. She received her doctorate of psychology from the University of San Francisco in 1998 and was a psychologist in private practice before coming to Greater Good.

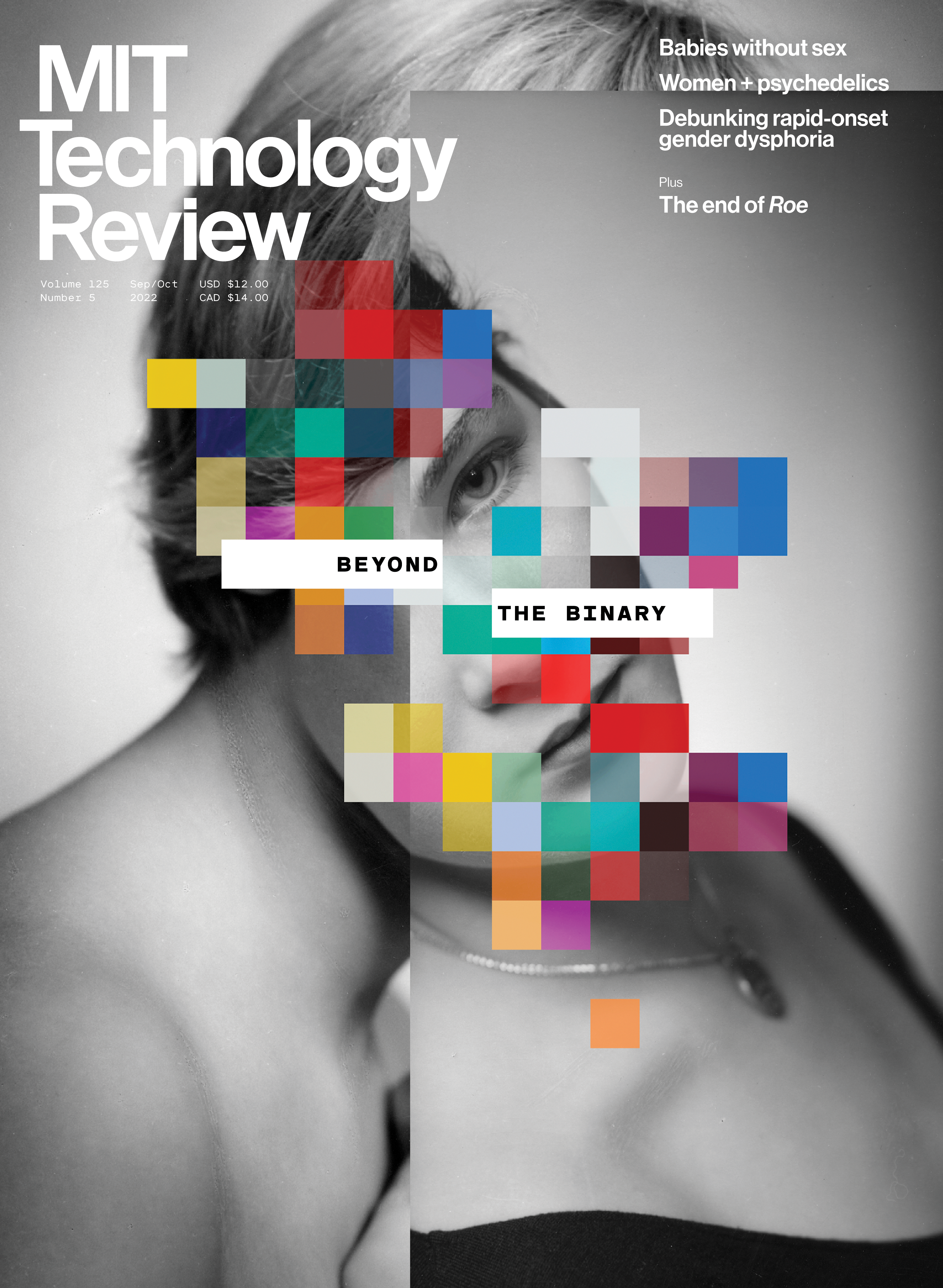

November 10th 2022

Psychosis (young people)

Medication for Psychosis

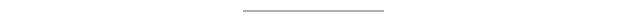

Most people had been prescribed a number of different medications over the time they had been having psychotic experiences. These included:

- Anti-psychotics (e.g. olanzapine, risperidone, quetiapine, aripiprazole)

- Anti-depressants (e.g. citalopram)

- Benzodiazepines (e.g. diazepam, lorazepam, clonazepam)

These medicines are usually prescribed by a psychiatrist, and should be regularly monitored. You can read more about taking anti-depressants here. Finding a medication that works can often involve trial and error, and it can take months to find the right dosage or best combination of medications to suit a person. Most people find medications that are helpful for their symptoms, although, a few of the young people we spoke to said they had never found ones that suited them.

Green Lettuce tried many different medications and said that most didn’t help. Seroq

(quetiapine) only made the voices go away for a short time but diazepam helped a lot.

Effects of taking medication for psychosis

Some people found medication provided a short relief from their psychotic experiences. Others found it stopped hallucinations, delusions and paranoia for longer periods or reduced them and it could also “kick start” their recovery. Quetiapine (antipsychotic) helped reduce the number of voices Dominic heard from seven to three, and helped him to sleep. However, most people felt that medication was a part of the solution rather than solving everything. Nikki says medication takes her from “bad to not as bad” and she uses self-help techniques to get herself from “not as bad to better”: “So it doesn’t do all the work, but it helps”. People we spoke to were often prescribed different types of medication (anti-depressants, benzodiazepines and anti-psychotics) together and some were able to compare the effects of each. Green Lettuce says benzodiazepines like lorazepam and diazepam help him more than the antipsychotics and diazepam has longer lasting effects. Some felt that anti-depressants interfered with anti-psychotics or made them feel worse. Andrew X, whose psychosis is linked to depression and low mood, finds that anti-depressants make the low mood worse before it makes things better.

Nikki prefers taking anti-depressants to taking anti-psychotics. They make her feel “lighter” and she feels a little less suicidal.

Effects of taking medication for psychosis

Some people found medication provided a short relief from their psychotic experiences. Others found it stopped hallucinations, delusions and paranoia for longer periods or reduced them and it could also “kick start” their recovery. Quetiapine (antipsychotic) helped reduce the number of voices Dominic heard from seven to three, and helped him to sleep. However, most people felt that medication was a part of the solution rather than solving everything. Nikki says medication takes her from “bad to not as bad” and she uses self-help techniques to get herself from “not as bad to better”: “So it doesn’t do all the work, but it helps”. People we spoke to were often prescribed different types of medication (anti-depressants, benzodiazepines and anti-psychotics) together and some were able to compare the effects of each. Green Lettuce says benzodiazepines like lorazepam and diazepam help him more than the antipsychotics and diazepam has longer lasting effects. Some felt that anti-depressants interfered with anti-psychotics or made them feel worse. Andrew X, whose psychosis is linked to depression and low mood, finds that anti-depressants make the low mood worse before it makes things better.

October 21st 2022

Sins of the mothers in today’s feminist broken homes.

10 Proven Ways to Learn Faster to Boost Your Math, Language Skills and More Quickly

Neuroscience has taught us a lot about how our brains process and hold on to information. By Deep Patel November 21, 2018 Updated: September 14, 2022 Opinions expressed by Entrepreneur contributors are their own.

Learning new things is a huge part of life — we should always be striving to grow and learn a new skill. Whether you’re learning Spanish or want to do math fast, it takes time to learn each lesson, and time is precious. So how can you make the most of your time by speeding up the learning process? Thanks to neuroscience, we now have a better understanding of how we learn and the most effective ways our brains process and hold on to information.

If you want to get a jump start on expanding your knowledge, here are 10 proven ways you can start being a quick learner.

1. Take notes with pen and paper.

Though it might seem that typing your notes on a laptop during a conference or lecture will be more thorough, thus helping you learn faster, it doesn’t work that way. To speed up your learning, skip the laptop and take notes the old-fashioned way, with pen and paper. Research has shown that those who type in their lecture notes process and retain the information at a lower level. Those who take notes by hand actually learn more.

While taking notes by hand is slower and more cumbersome than typing, the act of writing out the information fosters comprehension and retention through muscle memory. Reframing the information in your own words helps you retain the information longer, meaning you’ll have better recall and will perform better on tests.

Related: Your Lousy Handwriting Might Actually Make You Smarter

2. Have effective note-taking skills.

The better your notes are, the faster you’ll learn. Knowing how to take thorough and accurate notes will help you remember concepts, gain a deeper understanding of the topic and develop meaningful learning skills. So, before you learn a new topic, make sure you learn different strategies for note taking, such as the Cornell Method, which helps you organize class notes into easily digestible summaries.

Whatever method you use, some basic tips for note-taking include:

- Listen and take notes in your own words.

- Leave spaces and lines between main ideas so you can revisit them later and add information.

- Develop a consistent system of abbreviations and symbols to save time.

- Write in phrases, not complete sentences.

- Learn to pull out important information and ignore trivial information.

3. Distributed practice.

This method involves distributing multiple practices (or study sessions) on a topic over a period of time. Using short, spaced-out study sessions will encourage meaningful learning, as opposed to long “cram sessions,” which promote rote learning. The first step is to take thorough notes while the topic is being discussed. Afterward, take a few minutes to look over your notes, making any additions or changes to add detail and ensure accuracy.

Do this quickly, once or twice following each class or period of instruction. Over time, you can begin to spread the sessions out, starting with once per day and eventually moving to three times a week. Spacing out practice over a longer period of time is highly effective because it’s easier to do a small study session and you’ll stay motivated to keep learning.

Related: 3 Ways to Become a More Effective Learner

4. Study, sleep, more study.

You have a big project or a major presentation tomorrow and you’re not prepared. If you’re like many of us, you stay up too late trying to cram beforehand. Surely your hard work will be rewarded, even if you’re exhausted the next day… right? However, that’s not the most efficient way for our brains to process information.

Research shows a strong connection between sleep and learning. It seems that getting some shut-eye and taking short breaks are important elements in bolstering how our brains remember something. Deep sleep (non-rapid-eye-movement sleep) can strengthen our long-term memory if the sleep occurs within 12 hours of learning the new information. And students who both study and get plenty of sleep not only perform better academically; they’re also happier.

Related: Study Finds the Less You Sleep the Less People Like You

5. Modify your practice.

If you’re learning a skill, don’t do the same thing over and over. Making slight changes during repeated and deliberate practice sessions will help you master a skill faster than doing it the same way every time. In one study of people who learned a computer-based motor skill, those who learned a skill and then had a modified practice session where they practiced the skill in a slightly different way performed better than those who repeated the original task over and over.

This only works if the modifications are small — making big changes in how the skill is performed won’t help. So, for instance, if you’re practicing a new golf swing or perfecting your tennis game, try adjusting the size or weight of your club or racket.

6. Try a mnemonic device.

One of the best ways to memorize a large amount of information quickly is to use memory techniques like a mnemonic device: a pattern of letters, sounds or other associations that assist in learning something. One of the most popular mnemonic devices is one we learned in kindergarten — the alphabet song. This song helps children remember their “ABCs,” and it remains deeply ingrained in our memory as adults. Another is “i before e except after c” to help us remember a grammar rule.

Mnemonics help you simplify, summarize and compress information to make it easier to learn a new word or new skill. It can be really handy for students in medical school or law school, or people studying a new language. So, if you need to memorize and store large amounts of new information, try a mnemonic and you’ll find you remember the information long past your test.

Related: 5 Apps to Boost Your Brain Power

7. Use brain breaks to restore focus.

Information overload is a real thing. In order to learn something new, our brains must send signals to our sensory receptors to save the new information, but stress and overload will prevent your brain from effectively processing.

When we are confused, anxious or feeling overwhelmed, our brains effectively shut down. You can see this happen when students listening to long, detailed lectures “zone out” and stop paying attention to what’s being said.

They simply aren’t able to effectively conduct that information into their memory banks, so learning shuts down. The best way to combat this is by taking a “brain break,” or simply shifting your activity to focus on something new. Even a five-minute break can relieve brain fatigue and help you refocus.

8. Stay hydrated.

We know we should drink water because it’s good for us — it’s good for our skin and our immune system, and it keeps our body functioning optimally. But staying hydrated is also key to our cognitive abilities. Drinking water can actually make us smarter. According to one study, students who took water with them to an examination room performed better than those who didn’t.

Dehydration, on the other hand, can seriously affect our mental function. When you fail to drink water, your brain has to work harder than usual.

9. Learn information in multiple ways.

When you use multiple ways to learn something, whether it’s language learning or speed reading, you’ll use more regions of the brain to store information about that subject. This makes that information more interconnected and embedded in your brain. It basically creates a redundancy of knowledge within your mind, helping you truly learn the information and not just memorize it.

You can do this through spaced repetition or by using different media to stimulate different parts of the brain, such as reading notes, reading the textbook, watching a video on social media and listening to a podcast or audio file on the topic. The more resources you use, the faster you’ll learn.

10. Connect what you learn with something you know.

The more you can relate new concepts to ideas that you already understand, the faster the you’ll learn the new information. According to the book Make It Stick, many common study habits are counterproductive. They may create an illusion of mastery, but the information quickly fades from our minds.

Memory plays a central role in our ability to carry out complex cognitive tasks, such as applying knowledge to problems we haven’t encountered before and drawing inferences from facts already known. By finding ways to fit new information in with preexisting knowledge, you’ll find additional layers of meaning in the new material. This will help you fundamentally understand it better, and you’ll be able to recall it more accurately.

Elon Musk, founder of Tesla and SpaceX, uses this method. He said he views knowledge as a “semantic tree.” When learning new things, his advice is to “make sure you understand the principles, i.e., the trunk and big branches, before you get into the leaves/details or there is nothing for them to hang on to.” When you connect the new to the old, you give yourself mental “hooks” on which to hang the new knowledge.

Entrepreneur Editors’ Picks

This 27-Year-Old Yale Alum Has a College Prep Company With a 100% Harvard Acceptance Rate. Here’s How He Does It — and How Much It Costs.

How a Handwritten Core Values List Can Make You a Great Leader

This Body-Language Expert’s ‘Triangle’ Method Will Help You Catch a Liar in the Act

6 Things I’d Tell My 20-Year-Old Entrepreneur Self

Calling All Pet Lovers: The Best Pet Care Franchise Opportunities

8 Easy, Virtual Side Hustles for Extra Cash What This Overlooked Military Tip Can Teach You About Being an Effective Entrepreneu

October 17th 2022

Why Talented People Don’t Use Their Strengths

We often undervalue what we inherently do well.

- Whitney Johnson

Read when you’ve got time to spare.

More from Harvard Business Review

- How to Unlock Your Team’s Creativity1,203 saves

- New Managers Should Focus on Helping Their Teams, Not Pleasing Their Bosses2,231 saves

- 5 Ways Smart People Sabotage Their Success10,148 saves

Photo by: cintascotch/Getty Images

Photo by: cintascotch/Getty Images

If you’ve watched the Super Bowl in recent years, you’ve probably seen the coaches talking to each other over headsets during the game. What you didn’t know is that during the 2016 season, the NFL made major league-wide improvements to its radio frequency technology, both to prevent interference from media using the same frequency and to prevent tampering. This was a development led by John Cave, VP of football technology at the National Football League. It’s been incredibly helpful to the coaches. But it might never have been built, or at least Cave wouldn’t have built it, had it not been for his boss, Michelle McKenna-Doyle, CIO of the NFL.

When McKenna-Doyle was hired, she observed that a number of her people were struggling, but not because they weren’t talented — because they weren’t in roles suited to their strengths. After doing a deep analysis, she started having people switch jobs. For many, this reshuffling was initially unwelcome and downright uncomfortable. Such was the case with Cave.

Cave had the talent to create products and build things. But he didn’t have time to do it, because he had the big job of system development, including enterprise systems. “Why was he weighed down with the payroll system when he could figure out how to evolve the game through technology?” McKenna-Doyle asked. As she later explained to me, she envisioned a better role for his distinctive strengths. The coaches wanted to talk to each other. The technology didn’t exist. She tasked Cave with creating it. “At first, he was concerned, because his overall span was shrinking. ‘Just trust me,’ I said. ‘You’re going to be a great innovator,’ and he is.”

Experts have long encouraged people to “play to their strengths.” And why wouldn’t we want to flex our strongest muscle? But based on my observations, this is easier said than done. Not because it’s hard to identify what we’re good at. But because we often undervalue what we inherently do well.

Often our “superpowers” are things we do effortlessly, almost reflexively, like breathing. When a boss identifies these talents and asks you to do something that uses your superpower, you may think, “But that’s so easy. It’s too easy.” It may feel that your boss doesn’t trust you to take on a more challenging assignment or otherwise doesn’t value you — because you don’t value your innate talents as much as you do the skills that have been hard-won.

As a leader, the challenge is not only to spot talent but also to convince your people that you value their talents and that they should, too. This is how you start to build a team of employees who bring their superpowers to work.

Begin by identifying the strengths of each member of your team. Some of my go-to questions are:

What exasperates you? This can be a sign of a skill that comes easily to you, so much so that you get frustrated when it doesn’t to others. I’m weirdly good at remembering names, for example, and often get annoyed with others who don’t. I have a terrible sense of direction, however, and probably irritate other people who intrinsically sense which way is north.

What compliments do you dismiss? When we’re inherently good at something, we tend to downplay it. “Oh, it was nothing,” we say — and maybe it was nothing to us. But it meant something to another person, which is why they’re thanking you. Notice these moments: They can point to strengths that you underrate in yourself but are valuable to others.

What do you think about when you have nothing to think about? Mulling over something is a sign that it matters to you. Your brain can’t help but come back to it. If it matters to you that much, maybe you’re good at it.

In group settings, I’ll also ask people why they hired so-and-so — what that person’s genius is. Rarely is this a skill listed on their résumé.

When people bring up new ideas, you can ask them, Will this leverage what you do well? Are you doing work that draws on your strengths? Are we taking on projects that make the most of your strengths?

Once each person has identified their strengths, make sure everyone remembers them. Brett Gerstenblatt, VP and creative director at CVS, has his team take a personality assessment, then post their top five strengths on their desk. Brett wants people to wear their strengths like a badge. Not to tell others why they’re great, but to remind them to use them.

Diana Newton Anderson, an entrepreneur turned social good activist, shares a story of her college basketball coach, who had her team take shots from different places on the court: the key, the elbow, the paint. He would record their percentages, and then had every person on the team memorize those percentages. This would allow the team to literally play to each other’s strengths. You can do something similar with your team.

As with McKenna-Doyle, building a team that can play to their strengths begins with analysis. Observe people, especially when they are at their best. Because some will undervalue what they do well, it may be up to you to place a value on what they do best. Understanding and acknowledging each person’s strengths can be a team-building exercise. Then you can measure new ideas, new products, and new projects against these collective superpowers, asking: Are we playing to our strengths? When people feel strong, they are willing to venture into new territory, to play where others are not, and to consider ideas for which there isn’t yet a market.

How to Get Comfortable With Uncertainty and Change

When life is uncertain, our usual responses and coping strategies might not always work. The practice of mental agility can help us be resilient.

By Kira M. Newman | October 4, 2022

I recently moved to a new apartment, an occasion that calls for celebration—preferably outdoors in my brand-new backyard. But I didn’t expect how much being in a different space would disrupt my sense of safety. So I worried—about my cat escaping out the front door, how to protect my family from COVID, raccoon-transmitted diseases, and more.

After reading Elaine Fox’s new book Switch Craft: The Hidden Power of Mental Agility, I have a better idea of what’s going on. I fall into the category of someone who’s uncomfortable with uncertainty. I love a good routine, and moving disrupted all of mine. I have a need to feel in control of my circumstances, but just about everything in my immediate surroundings changed.

Maybe you fit this description, too, and you have trouble coping when life is full of unknowns or when things don’t turn out as you expected. According to Fox, what we need to cultivate is mental agility—a nimbleness in how we think, feel, and act that will allow us to adapt to changing circumstances.

Feeling uncomfortable with uncertainty

Uncertainty arises when we’re in new situations, like a move or a new job, or when we’re in unpredictable situations—like when we have a job interview, a medical test, an injury, or the possibility of layoffs at work.

Because our brains are future-predicting machines, it’s natural to want to avoid ambiguity. “As human beings, we crave security, and that is why all of us are intolerant of uncertainty to some extent,” writes Fox.

But some have this tendency more than others. For example, you might be intolerant of uncertainty if you love planning, hate surprises, and get frustrated when unexpected things mess up your day. Someone who has trouble with uncertainty might find it hard to make decisions in ambiguous circumstances, because they feel like they don’t have enough information and don’t want to make the wrong choice.

To avoid the discomfort of uncertainty, some of us engage in what Fox calls “safety behaviors”—things like making lots of lists, constantly double checking, overpreparing, or seeking reassurance from others. For example, you might read a restaurant menu in advance, or repeatedly check in on your kid to make sure they’re doing OK.

If you dislike uncertainty, you might also be a worrier, because worrying actually gives us a sense of control in a difficult situation—at least we’re doing something! You might also shy away from challenges that you could fail at, and lean on tried-and-true pathways in life.

The power of mental agility

To get more comfortable with uncertainty, we need to practice what Fox calls mental agility, or what psychologists call psychological flexibility. Research suggests that people who are more psychologically flexible have higher well-being and tend to be less anxious and worried.

Someone who is psychologically flexible is open to change, or may even find change exciting. When they’re working on a problem, they try lots of different solutions. They don’t see the world in black and white, they like to learn from others, and they often have some unusual ideas of their own.

Mental agility shines when we’re facing change, when things don’t go as expected, or when the future is particularly unpredictable—like, say, when travel plans fall through, going through a divorce, or in a pandemic. At that moment, some people dig their heels in and keep doing what they’ve always done. But mentally agile people are able to recognize when what they’re doing isn’t working, and change things up.

“There is no one-size-fits-all solution to any of life’s problems,” writes Fox.

She likes the metaphor of using different clubs on a golf course, depending on whether you’re hitting a long shot, swinging from a bunker, or putting. “Life is exactly like that—we’re going to be faced with quite different types of problems and different types of obstacles to get around, and we need different approaches for all of those.”

It comes down to the choice of stick or switch: Should I keep pursuing the same thoughts, feelings, and actions, or do I need to switch to something new?

For example, she says, parents need a veritable smorgasbord of strategies to raise their children, everything from tough discipline and strict boundaries to treating kids to ice cream and a day off. Knowing when to use which one is a sign of healthy flexibility. The same goes for leaders at work, who might want to change the way they manage their employees when the company is going through a season of stress. Switch Craft: The Hidden Power of Mental Agility (HarperOne, 2022, 352 pages).

Coping strategies are another good example. Psychologists like to group them into two main types: emotion-focused and problem-focused. Emotion-focused strategies change the way we feel, like distracting ourselves, getting support from friends, or looking at the situation from a different perspective. Problem-focused strategies, on the other hand, involve taking action to solve the problem directly.

No one strategy works all the time, and you’ll often see people get stuck in their favorite way of coping. If you tend toward distraction and denial, you might avoid dealing with a problem that you actually could have solved; if you’re an inveterate problem-solver, you might feel helpless and angry when confronting a problem—or a loved one’s—that has no solution, when all that’s really needed is support and connection.

How to cultivate mental agility

Fox’s book is full of tips to cultivate mental agility, as well as other related skills that can help you roll with the punches in life. Here are a few that felt most practical and new to me.

Surrender to transitions. When something changes in your life—you leave a job, end a relationship, or lose someone you love—recognize that you’re now in a transition. Transitions take time to move through, and they can’t be rushed. Your identity (as an employee, partner, or friend, perhaps) will have to shift and change, as well. Be kind and accepting, and don’t expect too much of yourself as you struggle through this time.

Prepare for change in advance. Sometimes change is unexpected, and other times you see it coming. When you anticipate a big change in life, spend some time exploring your feelings around it. You can list all the ways your life will change, and identify the ones that are causing you anxiety. Give yourself the opportunity to mourn what you will leave behind, but also devote some of your attention to new opportunities that you’re excited about.

Seek out small uncertainties. You can build up your tolerance for uncertainty, Fox explains, by gradually exposing yourself to it on purpose. For example, you could reach out to an acquaintance you haven’t seen in a while, try bargaining for an item you want to buy, or check social media less frequently.

Change up your perspective. One way to do this is to find something small that annoys you, and try to see the silver lining to it. For example, maybe your commute got longer, but that means you have extra time to listen to podcasts.

When you’re facing a problem, you could change your perspective by brainstorming a handful of solutions, rather than trying to figure out the perfect correct one. Or make list of people you admire, and ask yourself: What would they do in your place?

Ask a different question. When life is hard, we often find ourselves harping on “why” questions: “Why is this happening to me?” In those moments, Fox suggests letting go of the “why” and asking “how” instead: “How can I change this situation?” Or perhaps you’re already asking a “how” question, but the wrong one: Instead of “How do I stop working so much?,” she explains, try an easier question: “How can I find time to go to the gym?”

Move past worry. Repetitive worrying is one of the most common rigid thought patterns we get stuck in. To break free from it, identify whether the problem you’re worrying about is solvable or not—and take action if you can. If there’s nothing you can do, Fox suggests recording yourself talking in detail about your worries, and then listening to the recording repeatedly until your worries don’t have as much of a hold on you.

It’s been about two months since my move, and my brain has calmed down about all the changes. (Surrender to transitions—got it.) I definitely see the appeal of being someone who moves through life agilely and with curiosity, letting things happen as they may and feeling confident I’ll figure out how to deal with them. Lists gripped tightly in hand, I have trouble ever imagining myself that way.

But Fox’s book helped put a name and an explanation on something I struggle with, so at least I have a goal to aspire to. Since reading it, I have noticed my knee-jerk resistance to plans changing or doing things someone else’s way, and I have been able to let go. I doubt anyone will ever call me spontaneous and easygoing, but at least I can make a point to expect the unexpected in life.

Greater Good wants to know: Do you think this article will influence your opinions or behavior?

Greater Good wants to know: Do you think this article will influence your opinions or behavior?

About the Author

- Follow Kira M. Newman Kira M. Newman is the managing editor of Greater Good. Her work has been published in outlets including the Washington Post, Mindful magazine, Social Media Monthly, and Tech.co, and she is the co-editor of The Gratitude Project. Follo

October 15th 2022

Neuropsych — October 13, 2022

Opening the Stasi files: Would you read the secrets your government kept on you?

What if your best friend was an informant?

Key Takeaways

- In 1991, the German government allowed the public to open the “Stasi files” that the East German secret police had kept on them.

- It’s estimated that less than half of those who thought they had files applied to see them. The majority didn’t want to know.

- One of the biggest reasons given for not finding out was that people were worried that their present-day relationships would be damaged if they learned that others close to them were informants.

An interesting area of “deliberate ignorance” concerns once-secret government data on individuals. Would you want to know what spies and surveillance teams have found out about you? Are you curious as to what it would say? As it happens, there’s a research paper about just that.

Opening the Stasi files

In the decades after World War II, East Germany was a fearful place of suspicion, surveillance, and spies. The Communist state’s secret police, the Stasi, wiretapped, bugged, and tracked citizens on an enormous scale. By the time the Berlin Wall came down in 1989, the Stasi had over 90,000 employees and 200,000 informants (that we can estimate). While the Stasi disappeared — reabsorbed into a healing, reunited nation — the millions of pages of information they had collected on people did not.

In 1991, the reunified German government passed a bill that allowed people to access and view the Stasi files that were kept on them. What do you think people did? What would you do? A lot of people wanted to know. By 2020, 2.17 million people had applied to see their files. But, that’s not as many as you might have thought. Given that an estimated 5.25 million people in Germany believe they have a file to see, that means that more than half chose not to see their Stasi files. So, why didn’t all those people want to know?

The study revealed that the vast majority of people simply thought the information on them wasn’t relevant. The fact that they read “capitalistic literature” or fraternized with certain unsavory characters simply didn’t matter anymore in 1990s Germany. What’s more interesting, though, is the next largest reason: People didn’t want to know if their friends, family, or colleagues were informants.

Trust issues

The Stasi files research reveals that people did not want to ruin the relationships they had in their present lives. Imagine, for instance, that you opened a file and found information that only your spouse or a very close family member could have divulged. It would ruin that relationship forever. If you discovered that your best friend’s name appeared at the top of an “informants” document, how would that change your friendship? For a society that had been shrouded in suspicion and mistrust for so long, the opening up of personal Stasi files served only to erode that trust further.

What this also teaches us is just how far people are willing to forgive, or at least forget, the wrongdoings of those “on the other side.” It’s something that a lot of us do not think about in the 21st century. When Nazi Germany fell to the Allies, the thousands of bureaucrats of the Nazi machine simply found new work in the new country. Few to no questions were asked. Thousands of informants, party members, enablers, and soldiers were reabsorbed into a healing society (although, the most notorious were either arrested or subjected to “denazification”). The same story was told in Vichy France, as well as all Nazi-occupied countries across Europe. It’s a story being told even today where you find sudden, bloody regime change such as in Afghanistan or after the Arab Spring.

Subscribe for counterintuitive, surprising, and impactful stories delivered to your inbox every Thursday Fields marked with an * are required

For a country to heal, perhaps there is a necessary element of “deliberate ignorance” — a no-questions-asked policy. It’s something mirrored, on a smaller scale, in the wake of elections in democracies. Invariably, the winning incumbent’s speech will be one of reconciliation, renewal, and getting on with the job. It was a hard, vitriolic battle, but let’s move on now. The case of the Stasi files reveals how much this is ingrained in our collective ability and desire to forget.

Jonny Thomson teaches philosophy in Oxford. He runs a popular account called Mini Philosophy and his first book is Mini Philosophy: A Small Book of Big Ideas.

October 11th 2022

There are 3 main attachment styles in every relationship—here’s the ‘healthiest’ type, says therapist

Published Wed, Sep 28 202210:36 AM EDTUpdated Thu, Sep 29 20223:33 PM EDT John Kim, Contributor@angrytherapistShare

John Kim, Contributor@angrytherapistShare

Katelyn Dubose | Getty

Our attachment style is shaped and developed in early childhood by our relationships with our parents.

According to the attachment theory, first developed by psychologist Mary Ainsworth and psychiatrist John Bowlby in the 1950s, we mirror the dynamics we had with our parents — or primary caregivers — as infants and children.

As a therapist who specializes in relationships, I’ve found that attachment style discussions are not typical until much later on in life, when we must start to examine our relationship patterns and connect the dots.ADVERTI

The 3 main attachment styles: Which one are you?

Attachment theory is nuanced, like humans are. Although it is a spectrum of four styles, common parlance refers to only three: anxious, avoidant and secure.

Studies show that people who are securely attached have the healthiest relationships, and it’s the type that everyone should strive for.

Understanding which style you fall under — and the specific details surrounding it — can help you take control of how you relate to other people, particularly in stressful situations.

1. Anxious attachment style

Anxious attachment is characterized by a concern that the other person, whether with a significant other, friend or family member, will not reciprocate your level of availability.

This is generally caused when a child learns that their caregiver or parent is unreliable and does not consistently provide responsive care towards their needs.

I am anxiously attached. My parents came to America with very little money. They worked a lot and were more worried about paying the bills than creating a safe emotional space where secure attachments grew.

Anxious attachment types have a sense of unworthiness but generally evaluate others positively. As a result, they strive for self-acceptance by tying their worth to approval and validation from their relationships.

Knowing this about myself has been a game-changer in my current relationship. Instead of demanding, wanting more, feeling rejected and undesired, I can take ownership and remind myself that how I feel may not be the reality.

2. Avoidant attachment style

My partner Vanessa leans toward an avoidant attachment style. Children who fall under this category tend to avoid interaction with their parents, and show little or no distress during separation. The child may believe that they cannot depend on the relationship.

An avoidant attachment style shows up in adults who hold a positive self-image and a negative image of others. They prefer to avoid close relationships and intimacy in order to retain a sense of independence and invulnerability. It’s a way to hide and not truly show themselves.

The avoidant struggles with intimacy and expressing feelings, thoughts and emotions. They are often accused of being distant and closed off. The closer someone gets and the needier they seem to become, the more an avoidant withdraws.

Knowing that Vanessa has more of an avoidant attachment style makes me understand and listen to her more, instead of immediately jumping to blame.

3. Secure attachment style

People who are securely attached appreciate their own self-worth and ability to be themselves in their relationships. They openly seek support and comfort from their partner, and are similarly happy when their partner relies on them for emotional support.

During the childhood years, their caregivers made sure they felt valued, supported, heard and reassured. Here are some ways securely attached kids show up as adults:

- They are able to regulate emotions and feelings in a relationship.

- They have a strong goal-oriented behavior when on their own.

- They don’t struggle with opening up and trusting others.

- They are comfortable being alone and use that time to explore their emotions.

- They have a strong capacity to reflect on how they are maneuvering in a relationship.

Secure attachment is what everyone is swimming towards, including Vanessa and me. But it takes awareness and practice.

The good news about attachment styles

We can become more and more securely attached as we experience healthy attachment habits in our adult relationships.

Because Vanessa is aware of her tendency to be avoidant, for example, she’s able to reflect on her emotional responses and see that they are mostly a knee-jerk reaction she’s adopted for protection. Then she can challenge herself to choose differently based on the kind of connection she truly wants.

We both give each other the space and the loving boundaries that we expect from one another.

Rewiring yourself to be more securely attached has to be a lifestyle, an everyday thing. Because as humans, we snap back if we are not intentional and just live by our default.

We all have our stories; no one has a perfect childhood. And it’s not about blaming or living in the past. It’s about looking at who we are now, and healing and evolving to become more secure.

John Kim, LMFT, is a therapist and life coach based in Los Angeles. He is also the author of “It’s Not Me, It’s You” and “Single on Purpose.” Follow him on Twitter and Instagram.

September 27th 2022

The big idea: should we drop the distinction between mental and physical health?

The current false dichotomy holds back research and stigmatises patients

Illustration: Elia BarbieriEdward BullmoreMon 12 Sep 2022 12.30 BSTLast modified on Tue 13 Sep 2022 02.50 BST

A few months ago, I was infected by coronavirus and my first symptoms were bodily. But as the sore throat and cough receded, I was left feeling gloomy, lethargic and brain-foggy for about a week. An infection of my body had morphed into a short-lived experience of depressive and cognitive symptoms – there was no clear-cut distinction between my physical and mental health.

My story won’t be news to the millions of people worldwide who have experienced more severe or prolonged mental health outcomes of coronavirus infection. It adds nothing to the already weighty evidence for increased post-Covid rates of depression, anxiety or cognitive impairment. It isn’t theoretically surprising, in light of the growing knowledge that inflammation of the body, triggered by autoimmune or infectious disease, can have effects on the brain that look and feel like symptoms of mental illness.

However, this seamless intersection of physical and mental health is almost perfectly misaligned with the mainstream way of dealing with sickness in body and mind as if they are completely independent of each other.

In practice, physical diseases are treated by physicians working for medical services, and mental illnesses are treated by psychiatrists or psychologists working for separately organised mental health services. These professional tribes follow divergent training and career paths: medics often specialise to focus exclusively on one bit of the body, while psychs treat mental illness without much consideration of the embodied brain that the mind depends on.

We live in a falsely divided world, which draws too hard a line – or makes a false distinction – between physical and mental health. The line is not now as severely institutionalised as when “lunatics” were exiled to remote asylums. But the distinction remains deeply entrenched despite being disadvantageous to patients on both sides of the divide.

A 55 year old woman with arthritis, depression and fatigue, and a 25 year old man with schizophrenia, obesity and diabetes, have at least this in common: they will probably both struggle to access joined-up healthcare for body and mind. Psychological symptoms in patients with physical disease are potentially disabling yet routinely under-treated. Physical health problems in patients with major psychiatric disorders contribute to their shockingly reduced life expectancy, about 15 years shorter than people without them.

Why do we stick with such a fractured and ineffective system? I will focus on two arguments for the status quo: one from each side, from the tribes of medics and psychs.

For the medics, the problem is that we just don’t know enough about the biological causes of mental illness for there to be a deep and meaningful integration with the rest of medicine. Psychiatry is lagging behind scientifically more advanced specialities, such as oncology or immunology, and until it catches up in theory it can’t be joined up in practice. To which I would say yes but no: yes, greater detail about biological mechanisms for mental symptoms will be fundamental to the fusion of mind and body medicine in future; but no, that is not a sufficient defence of the status quo, not least because it discounts how much progress has already been made in making biomedical sense of illnesses such as schizophrenia.

When I started as a psychiatrist, about 30 years ago, we knew that schizophrenia tended to run in families; but it is only in the last 5-10 years that the individual genes conferring inherited risk have been identified. We were unsure whether schizophrenia was linked to structural changes in the brain; but MRI scanning studies have established beyond doubt that it is. We were puzzled that the risk of diagnosis was increased among young adults born in the winter months, when viral infections are more common; but now we can begin to see how the mother and child’s immune response to perinatal infection could disrupt the synaptic pruning process which is crucial to development of brain networks throughout childhood and adolescence.

For the psychs, the problem is fear of excessive reductionism: that the personal and social context of mental illness will be neglected in pursuit of an omnipotent molecule or other biological mechanism at the root of it all. That would indeed be a dead end, but it’s not a likely destination.

We have known since Freud that childhood experience can have a powerful effect on adult mental health. There is now massive epidemiological evidence that social stress, broadly speaking, and early life adversity in particular, are robust predictors of both mental illness and physical disease. Only a biomedical zealot in denial would claim this doesn’t matter. But the question remains: how does experience of poverty, neglect, abuse or trauma in the first years of life have such enduring effects on health many decades later?

Freud’s answer was that traumatic memories are buried deep in the unconscious mind. A more up-to-date answer is that social stress can literally “get under the skin” by rewriting the script for activation of the genetic blueprint. Molecular modifications called epigenetic marks cause long-term changes in the brain and behaviour of young rats deprived of maternal affection or exposed to aggression. Similar mechanisms could biologically embed the negative impacts of early-life adversity in humans, exacerbating inflammation and steering brain development on to paths that lead to mental health problems in future.Advertisement

As things stand, these are plausible theories based on animal experiments rather than established facts in patients. But already they tell us this is not a zero-sum game. Drilling down on the biological mechanisms doesn’t mean that we must abandon or devalue what we know about the social factors that cause mental illness. Anxious anticipation of such a binary choice is itself a symptom of the divided way of thinking that we need to escape.

So, if we could entirely free ourselves from this unjustified class distinction between mental and physical health, what changes might we hope to see in future?

Sign up to Inside Saturday

For medics and psychs, there will be more educational and career paths that cut across, rather than entrench, specialisations. Diagnostic labels categorically ordained by the bible of psychiatric diagnosis, the Diagnostic and Statistical Manual of Mental Disorders (DSM), will be reformulated in terms of the interactions between biomedical and social factors that cause mental symptoms. There will be new treatments to tackle the physical causes of mental illness, which are expected to be many and variable between patients, rather than trying to smother symptoms by “one size fits all” treatment regardless of cause. Knowing more about their physical roots, we should be much more successful at predicting and preventing mental health disorders.

For patients, the result will be better physical and mental health outcomes. There will be more integrated specialist physical and mental health services, like the new hospital we are planning in Cambridge for children and young people, so that body and mind can be treated under one roof throughout the first two decades of life. There will be more opportunities for people with relevant lived experience to co-produce research investigating the links between physical and mental health. But the biggest impact of all could be on stigma. The sense of shame or guilt that people feel about being mentally ill is an added load, a meta-symptom, culturally imposed by the false dichotomy between physical and mental health. Without it, the stigma of mental illness should fade away, just as the stigma attached to epilepsy tuberculosis and other historically mysterious disorders has been diminished by an understanding of their physical causes.

Ultimately it is easier to imagine a better future for mental and physical health together than for either alone.

Edward Bullmore is professor of psychiatry at the University of Cambridge and author of The Inflamed Mind: A Radical New Approach to Depression (Short Books).

Further reading

Inventing Ourselves: The Secret Life of the Teenage Brain by Sarah-Jayne Blakemore (Black Swan, £9.99)

The Body Keeps the Score: Mind, Brain and Body in the Transformation of Trauma. by Bessel van der Kolk (Penguin, £12.99)

Illness as Metaphor & Aids and its Metaphors by Susan Sontag (Penguin Classics, £14)

Comment Societies are inherently unequal. Lies to the contrary engender escapism through all manner of self abuse, guilt and self blame. Body chemistry responds to perceptions, the most obvious example being the brain’s body and mind chemical response to triggers for sexual arousal.

Many get off on power , even if the personwho is so lowly the best they can do is kick the cat. Depression comes from such circumstances as lack of hope, isolation, being scapegoated or convicted for things you haven’t done. These have physical consequences through perceptions and brain changes, starting with anxiety, self negelct, leading into alcoholism , drug addiction which can cause schizophrenia and hearing voices due to brain pathway damage,and homlessless. Guilt is a huges issue but it should be psychiatrists and the elite who feel it.

So enters the state psychiatrist, young, brainwashed that all the answers are in the 6kg DSM. R.D Laing, author of what should have been ground breaking, ‘TheDivided Self.’

They are straight out of med school or uni depending whether they have studied medicine or psychology. Here they have learned less and less about less and less as education is progressively about social control and creating mnions with what the system defines as ‘normal psychology.’ The job is the same. Save society from the guilt and punishment it deserves. Record numbers of increasingly hopeless, scared , depressed and demoralised youth are committing suicide. As with the current high profile U.K case, the knee jerk response is to blame the internet because the corrupt elite running society need protection. That is why moronic lackeys from the police to do the ground work, banging on doors and dragging in the patients, handcuffed if necessary so the officers are safe. Society is one big sickness and getting worse. Covid lockdowns in response to a man made virus generated a mental health epidemic , health destroying ruin and suicide because the elite needed to create health destroying fear and conformity. R J Cook

September 22nd 2022

Why Companies Are So Interested in Your Myers-Briggs Type

If you’ve looked for a job recently, you’ve probably encountered the personality test. You may also have wondered if it was backed by scientific research.

Getty/Jonathan Aprea By: Ben Ambridge September 7, 2022 5 minutes Share Tweet Email Print

How many piano tuners are there in the entire world? How much should you charge to wash every window in Seattle? How many golf balls can you fit in a school bus?

According to urban legend, these are all questions that Google asks at interviews. Or at least, that Google used to ask. Apparently the off-beat questions have fallen out of favor. So, what do they do instead? Well, as well as asking more standard interview questions (e.g., “Tell us about a time you faced and overcame an important challenge”), Google uses personality tests. In fact, according to Psychology Today, around 80 percent of Fortune 500 companies use personality tests in some form.Is the use of personality testing for making hiring decisions backed by scientific research? Well, it’s complicated.

But is the use of personality testing for making hiring decisions backed by scientific research? Well, it’s complicated. There are two basic approaches to personality tests: trait-based and type-based.

Mainstream academic psychology has gone almost exclusively down the route of trait-based approaches. By far the dominant approach is known as the “Big 5,” as it assumes that personality can essentially be boiled down to five traits, summarized by the acronym OCEAN: Openness to experience; Conscientiousness; Extraversion; Agreeableness; and Neuroticism (these days, more often referred to as “Emotional Stability”). What makes this a trait-based (as opposed to a type-based approach) is that each of these follows a a sliding scale. For example, you might score 82/200 for Conscientiousness, 78/100 for Extraversion, 48/100 for Agreeableness, and so on (if you’re interested, there are many places online you can take a free version of this personality test yourself). What this approach does not do is categorize people into types (e.g., “He’s an extrovert,” “She’s an introvert”).

Do scores on the “Big 5” predict aspects of performance in workplace? Psychologists Leonard D. Goodstein and Richard I. Lanyon answer with a cautious “Yes.” Unsurprisingly, conscientiousness shows a correlation with most measures of job performance, regardless of the particular job or of how performance is measured, though the size of the correlation is modest. Researchers measure the relationship between two things (in this case, conscientiousness on a questionnaire and some measure of job performance) on scale from 0 (no relationship whatsoever) to 1 (a perfect relationship: i.e., if you know a person’s conscientiousness score, you can predict with perfect accuracy their score on the measure of job performance).

On the 0–1 scale, the relationship between conscientiousness and job performance (as measured by this so-called “r value”) was 0.22; not trivial, but by no means large. This means only around 5 percent of the variation between different people on their job performance can be explained by their conscientiousness score on the personality questionnaire (calculated by squaring 0.22 to give the “r-squared value”). Similarly modest correlations were observed between extraversion and performance, but only—as you might expect—for employees involved in sales (r=0.15) or managing others (r=0.18). Openness to experience (creativity, enjoying new things) was positively correlated with employees’ ability at training others (r=0.25), but not with their job performance per se.

So far, so (cautiously) good. But here’s the thing: Outside of academic psychology, in the world of big business, employers tend not to use the “Big 5” or other trait-based measures of personality. Instead, they lean toward type-based measures such as the Myers-Briggs. Type-based measures don’t give people scores on continuous scales but instead categorize them into distinct “types.”

In the case of Myers-Briggs, there are 16 different types, defined by the test-takers’ preferences on four dimensions:

Extraversion (outgoing, life and soul of the party) or Introversion (prefer calmer interactions)

Sensing (relying mainly on your eyes and ears, etc.) or Intuition (seeing patterns or connections)

Thinking (prioritizing logic in decision making) or Feeling (prioritizing emotions in decision making)

Judging (living life in a planned, orderly way) or Perceiving (living life in a flexible, spontaneous way).

Your personality-type is simply a combination of your preferences. For example, my type would be “Extraversion Intuition Thinking Judging.”

How does the Myers-Briggs fare as a measurement of personality? The answer—unlike for the “Big 5,” which is generally well-supported by a large body of research—is that we just don’t know. A 2017 systematic review and meta-analysis set out to investigate “the validity and reliability of the Myers-Briggs,” trying to determine if the test measures what it claims to measure (validity), and if it comes up with more-or-less the same answer if people take the test several times (reliability)?

Weekly Newsletter

Get your fix of JSTOR Daily’s best stories in your inbox each Thursday.

Privacy Policy Contact Us

You may unsubscribe at any time by clicking on the provided link on any marketing message.

The researchers came up almost blank: Out of 221 studies of the Myers-Briggs Type Indicator, only seven studies met their criteria for inclusion: four looking at validity and three at reliability. The four validity studies concluded that individual Myers-Briggs scores do seem to correlate well with one another and/or other personality measures, although the studies were too different to allow their results to be combined (as is usually done for meta-analysis). The three reliability studies concluded that the correlation between an individual’s score on different sittings of the same test is generally good (r=0.7–0.8), although almost all were conducted on college students, who are not necessarily representative of the general population.

And that’s it—there’s simply very little data on how well the Myers-Briggs (and other type-based personality tests) measures personality and even less on how it might predict job performance. We just don’t know. It’s too early to say that the test is “meaningless,” “totally meaningless,” or “a fad.” One thing is clear: valid and reliable alternatives that have been shown to correlate with job performance—tests based on the “Big 5” model—are widely available for free.

Comment This is not about accuracy, It is aboout reinforcing conformity implanting in candidates what they should aspire to regardless of class, race, gender or experience. Britain is a leader in police state controls , using notions of the DSM ( Diagnosis, Statistics and Medication ) along with malicious police devices like PNC Criminal Markers created by malicious moronic police officers on dubious so called ‘softintelligence’ , the likes of which saw a young black shot dead in Streatham. A person can go a lifetime without getting a good job or any job at all, so no mortgage because of this. Background checks are secretive, with confidentail calls and memos destroying peoples lives – from vindctive police and careerists who neverf admit mistakes, closing ranks to protect themselves.

Thepropaganda that Britain or the U.S exemplify democarcy is absurd.. The rampant Royalism which the elite and their media are now projecting on too the once villified Charles and Camilla should be hard to stomach by people with a brain, unless they are part of the ruling elite interest group or their lackeys who certainly have questionable intelligence.

Britain has reached new levels of fake diversity. Take the death of black Stephen Lawrence , Dalian Atkinson , Charles DeMenezes and the Streatham police killing. Police response in this country to their misconduct and crimes is always knee jerk cover up ‘in the public interest.’ They mean ‘class interest.’ This was pretty obvious from all the black dictators and other tyrants turning up welcome at the Queen’s funeral. The Queen was a totem who inherited a life of privilege, now portrayed as a Mother Theresa with a life of sacrifice. The self indulgence of the Royal family does not bear scrutiny. The masses love it, beause like puppets they cannot see the strings. That is what the money spinning Myers Briggs test is all about.

R J Cook

I knew my marriage was over by then, but we have to be treated as freaks and forced to conform , as through devices like Myers – Briggs.