This is just a random selection or collection of things that interest me – Robert Cook

Robert Cook is a former engineering buyer for the Nitrate Corporation Of Chile and former Construction worker.

How the Dreadnought sparked the 20th Century’s first arms race

By Giles Edwards

BBC News

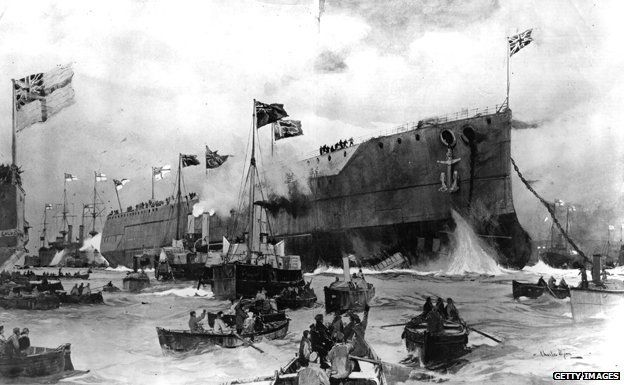

In 1906, HMS Dreadnought was launched. Described as a deadly fighting machine, it transformed the whole idea of warfare and sparked a dangerous arms race.

On 10 February 1906 the world’s media gathered in Portsmouth to watch King Edward VII launch what he and his ministers knew would be a world-beating piece of British technology.

It was both an entrancing piece of high technology and a weapon of previously unimagined destructive power. What the king unveiled that day was the Royal Navy’s newest warship – HMS Dreadnought.

At the time, Britain was a nation obsessed with the Navy. The Navy was at the centre of national life – politically powerful and a major cultural force as well, with images of the jolly sailor Jack Tar used to sell everything from cigarettes to postcards. The 100th anniversary of the Battle of Trafalgar just months earlier had served to remind anyone who doubted it of the Royal Navy’s power, size and wild popularity.

So if the British public had come to expect their Navy to be world-beaters, they were delighted with Dreadnought, and eager to hear all about her.

Read More How the Dreadnought sparked the 20th Century’s first arms race – BBC News

The HMS Dreadnought, 1917 is a rank V British battleship with a battle rating of 6.7 (AB/RB/SB). It was introduced in Update “New Power”.

The Dreadnought is the battleship that launched the dreadnought revolution in 1906. She was the first all-big gun battleship powered by turbines to enter service with any navy, representing a quantum leap in firepower and speed over all previous capital warships and further establishing Britain’s position as the leading world naval power at that time. Fittingly, the Dreadnought is also one of the first battleships to be introduced into the game.

General info

Survivability and armour

Armourfront / side / backCitadel100 / 279 / 19 mmMain fire tower279 / 279 / 330 mmHull25 mm (steel)Superstructure16 mm (steel)Number of section9Displacement20 730 tCrew810 people

The Dreadnought has a reasonably thick armoured belt for a first-generation dreadnought battleship. It is 279 mm thick below the waterline and 203 mm above the waterline, thinning out to 152 mm towards the bow and 102 mm towards the stern. The Dreadnought also features a “turtleback” citadel, with angled 70 mm and 76 mm plates behind the main belt designed to deflect shells that penetrate the main belt. The bow end of the citadel is protected by an angled 102 mm plate, while the stern end is protected by a 203 mm vertical upper plate and 102 mm angled lower plate. The upper deck plating is 19 mm thick and the lower deck plating is 45 mm thick amidships, 38 mm over the bow, and 51 mm over the stern and steering gear.

The main gun turrets are protected by angled 279.4 mm plating around the front and sides, 330 mm plating on the rear, and 76.2 mm plating on the turret roof and the bottom. The bow, stern, and wing turret barbettes are 279 mm thick facing outwards from the ship and 203 mm facing inwards, while the amidships turret has 203 mm all-round barbette protection.

The main gun magazines are located well below the waterline and are further protected by additional 51 mm and 25 mm plates, with 102 mm plating covering the outward facing sides of the wing turret ammunition magazines.

The bridge is protected by an armoured conning tower with 279 mm thick sides and a 76 mm roof.

The Dreadnought also has additional protection amidships from her coal bunkers, which provide the equivalent of about 40 mm of steel.

The Dreadnought has a small crew complement for a battleship, along with the British Colossus.

Mobility

Speedforward / backAB45 / 26 km/hRB39 / 22 km/h

The Dreadnought has about average mobility for a first-generation dreadnought. As a battleship, she is displaces much more than cruisers and destroyers, and thus her acceleration and top speed are much lower. Her handling and manoeuvrability are about average for a battleship.

| Mobility Characteristics | ||

|---|---|---|

| Game Mode | Upgrade Status | Maximum Speed (km/h) |

| Forward | Reverse | |

| AB | ||

| Upgraded | 45 | 26 |

| RB/SB | ||

| Upgraded | 39 | 22 |

Modifications and economy

Repair costBasic → ReferenceAB26 000 → 32 656 RB51 000 → 64 056 Total cost of modifications283 000 423 000 Talisman cost2 200 Crew training230 000 Experts790 000 Aces1 800 Research Aces780 000 Reward for battleAB / RB / SB450 / 600 / 100 % 202 / 202 / 202 % Modifications

Armament

Primary armament

5 х Turret2 x 305 mm/45 Mark X cannonAmmunition220 roundsVertical guidance-3° / 13°Main article: 305 mm/45 Mark X (305 mm)

The Dreadnought is armed with ten BL 12-inch Mark X main guns located in five twin turrets. Two of these are wing turrets located amidships that can only fire to one side each, thus her actual maximum broadside consists of only eight main guns from four twin turrets. She can also bring six guns to bear directly ahead or astern from the wing turrets (which have 180 degrees of traverse) and the bow/stern turret. The turrets have fairly good traverse arcs towards the bow of the ship (the two rear turrets can traverse up to 150 degrees to each side) and somewhat worse towards the stern (the bow turret can only traverse up to 141 degrees to each side). The guns have a maximum rate of fire of 2 rounds/minute per gun with an aced crew.

Ammunition types consist of HE Mark IIa, APC Mark VIa, and CPC Mark VIIa (semi-armour piercing). The HE shell has the second largest explosive filler of any 12-inch/305 mm shell at ~53 kg of TNT equivalent, with only the SAP shell of the Russian/Soviet 305 mm used by the Parizhskaya Kommuna and Imperatritsa Mariya having more explosive (~55 kg TNT). It is capable of causing immense damage to destroyers, most cruisers, and other lightly armoured targets. By contrast, the APC shell is the lightest of the 12-inch AP projectiles, has a relatively small filler of only ~12 kg TNT, and has the lowest penetration of the AP shells its calibre. The CPC SAP shell is a compromise between the explosive power of the HE shell and the penetration of the APC shell. It has enough penetration to go through anything other than thick battleship belt armour, and has ~36 kg of TNT.

Dreadnought’s rangefinder is quite poor, having a measurement accuracy of only 86% upgraded, reflecting its age.

Secondary armament

18 х Turret76 mm/50 12pdr 18cwt QF Mark I cannonAmmunition300 roundsMain article: 76 mm/50 12pdr 18cwt QF Mark I (76 mm)

The Dreadnought’s secondary armament consists of 18 QF 12-pounder Mark I in single mounts. These are 76 mm guns, thus the Dreadnought’s secondary battery of the smallest of any battleship in the game. Each gun has a rate of fire of 15 rounds/gun with an aced crew. Each main gun turret has two of these on its roof, with the remaining ten guns scattered around the ship’s hull and superstructure. These guns can only elevate up to 20 degrees and only fire CP semi-AP shells, thus are only useful against surface targets. The CP shell has a small filler of only 520 g TNT equivalent and low penetration, thus it will struggle to do much damage against anything other than coastal craft.

Anti-aircraft armament

2 х Turret76 mm/45 QF 3in 20cwt HA Mark I cannonAmmunition150 roundsMain article: 76 mm/45 QF 3in 20cwt HA Mark I (76 mm)

The Dreadnought has two anti-aircraft QF 12-pounder 20-cwt HA Mark I guns. These have a low rate of fire of only 12 rounds/minute with an aced crew, and there is no option to use time-fused shells, making them less effective against aircra

Read More HMS Dreadnought – War Thunder Wiki

Comment I knew my father for a few short years, a natural engineer who taught me so much. After his death I lived in my world of model making. Many years later, my ex father in law, another gifted engineer, out of his time, became a surrogate father for a while. He helped me build a flying model Spitfire. We drifted apart for various reasons and I reverted to my interest in social sciences and social engineering.

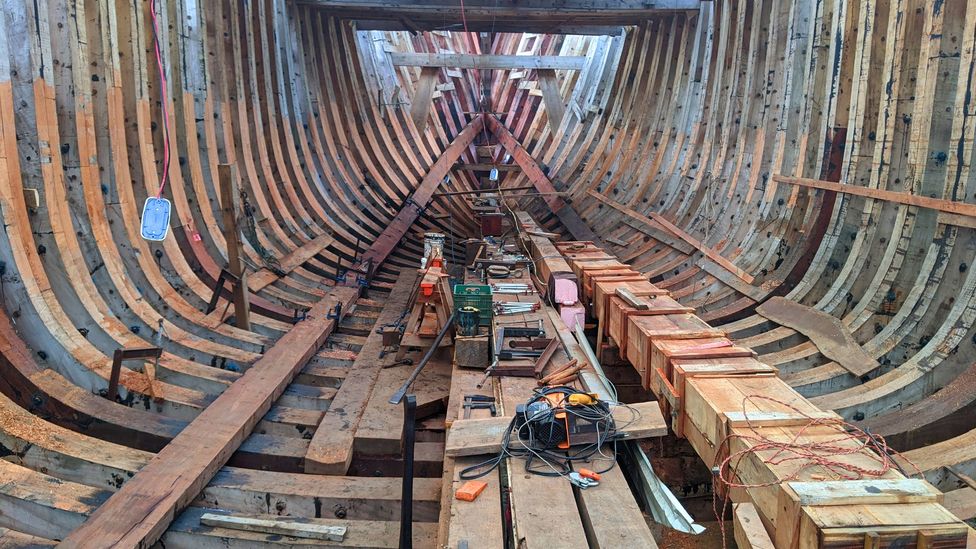

Either way we are playing with nature and the consequences can be explosive. Leaving that to one side, I will start with the time I asked my late father how metal ships could float. he explained Archimedes Principle – and his famous Eureka moment.

So I understand that. What remains to amaze me is how humans can manipulate materials to build great ships like the Dreadnought. How sad that this is all motivated by war – though Brunel who pioneered Iron ships had no such motive, but these people are always manipulated by politicians. R J Cook

MODERN SHIPYARD TECHNOLOGY AND EQUIPMENT

After commenting on the immensity of the changes that have taken place in shipyards since the year 1950, the author goes on to review recent developments in shipyard technology and equipment. Those discussed have to do with steelwork, metal forming, welding and other joining methods, pipeworking, prefabrication and assembly, outfitting, shipyard lifts, and outfitting afloat. Lastly, the review looks at some of the major changes being made to modernize two very different shipyards in the UK–a Clydeside merchant shipyard and a state-owned naval dockyard facility for the construction of nuclear-powered submarines.

- Availability:

- Find a library where document is available. Order URL: http://worldcat.org/issn/03060209

- Supplemental Notes:

- Journal article

- Authors:

- Dawson, C

- Publication Date: 1990-11

March 20th 2022

Is the Boeing 737 Max now safe to fly? Here’s a look at the jet’s past problems and future challenges.

By DOMINIC GATES, THE SEATTLE TIMES

Shrouded by the darkest clouds in its history from the unprecedented pandemic-driven collapse of the airliner business, Chicago-based Boeing has one glimmer of a silver lining left for 2020: The 737 Max may finally fly passengers again.

The Federal Aviation Administration in August laid out the proposed fixes for the design flaws in the Max’s automated flight controls, starting a clock that could see Boeing get the green light sometime next month — with U.S. airlines then scrambling to get a few Max jets flying by year end.

On the two fatal Max flights, an erroneous signal triggered software — the Maneuvering Characteristics Augmentation System — that repeatedly pushed each plane’s nose downward until it crashed.

Is fixing that flight control software good enough? Will the updated 737 Max really be safe?

Former jet-fighter pilot and aeronautical engineer Bjorn Fehrm is convinced. Though he calls the design flaws that caused the two 737 Max crashes “absolutely unforgivable,” he believes Boeing has definitively fixed them.

Read More Is the Boeing 737 Max now safe to fly? Here’s an in-depth look. – Chicago Tribune

Designer: William Edward Boeing, George Conrad Westervelt

First flight: 15 June 1916

Manufacturer: Boeing

Number built: 2

Getting It Wright

The brothers gained the mechanical skills essential to their success by working for years in their Dayton, Ohio-based shop with printing presses, bicycles, motors, and other machinery. Their work with bicycles, in particular, influenced their belief that an unstable vehicle such as a flying machine could be controlled and balanced with practice. This was a trend as many other aviation pioneers were also dedicated cyclists and involved in the bicycle business in various ways. From 1900 until their first powered flights in late 1903, the brothers conducted extensive glider tests that also developed their skills as pilots. Their shop employee Charles Taylor became an important part of the team, building their first airplane engine in close collaboration with the brothers.

Capitalizing on the national bicycle craze (spurred by the invention of the safety bicycle and its substantial advantages over the penny-farthing design), in December 1892 the brothers opened a repair and sales shop (the Wright Cycle Exchange, later the Wright Cycle Company) and in 1896 began manufacturing their own brand. They used this endeavor to fund their growing interest in flight. In the early or mid-1890s they saw newspaper or magazine articles and probably photographs of the dramatic glides by Otto Lilienthal in Germany.

The Wrights based the design of their kite and full-size gliders on work done in the 1890s by other aviation pioneers. They adopted the basic design of the Chanute-Herring biplane hang glider (“double-decker” as the Wrights called it), which flew well in the 1896 experiments near Chicago, and used aeronautical data on lift that Otto Lilienthal had published. The Wrights designed the wings with camber, a curvature of the top surface.

The brothers did not discover this principle, but took advantage of it. The better lift of a cambered surface compared to a flat one was first discussed scientifically by Sir George Cayley. Lilienthal, whose work the Wrights carefully studied, used cambered wings in his gliders, proving in flight the advantage over flat surfaces. The wooden uprights between the wings of the Wright glider were braced by wires in their own version of Chanute’s modified Pratt truss, a bridge-building design he used for his biplane glider (initially built as a triplane). The Wrights mounted the horizontal elevator in front of the wings rather than behind, apparently believing this feature would help to avoid, or protect them from, a nosedive and crash like the one that killed Lilienthal. Wilbur incorrectly believed a tail was not necessary, and their first two gliders did not have one.

In 1904–1905, the Wright brothers developed their flying machine to make longer-running and more aerodynamic flights with the Wright Flyer II, followed by the first truly practical fixed-wing aircraft, the Wright Flyer III. The brothers’ breakthrough was their creation of a three-axis control system, which enabled the pilot to steer the aircraft effectively and to maintain its equilibrium This method remains standard on fixed-wing aircraft of all kinds. From the beginning of their aeronautical work, Wilbur and Orville focused on developing a reliable method of pilot control as the key to solving “the flying problem”. This approach differed significantly from other experimenters of the time who put more emphasis on developing powerful engines. Using a small home-built wind tunnel, the Wrights also collected more accurate data than any before, enabling them to design more efficient wings and propellers. Their first U.S. patent did not claim invention of a flying machine, but rather a system of aerodynamic control that manipulated a flying machine’s surfaces.

The Wright brothers were certainly complicit in the lack of attention they received. Fearful of competitors stealing their ideas, and still without a patent, they flew on only one more day after October 5. From then on, they refused to fly anywhere unless they had a firm contract to sell their aircraft. They wrote to the U.S. government, then to Britain, France and Germany with an offer to sell a flying machine, but were rebuffed because they insisted on a signed contract before giving a demonstration. They were unwilling even to show their photographs of the airborne Flyer.

The American military, having recently spent $50,000 on the Langley Aerodrome – a product of the nation’s foremost scientist – only to see it plunge twice into the Potomac River “like a handful of mortar”, was particularly unreceptive to the claims of two unknown bicycle makers from Ohio. Thus, doubted or scorned, the Wright brothers continued their work in semi-obscurity, while other aviation pioneers like Santos-Dumont, Henri Farman, Léon Delagrange, and American Glenn Curtiss entered the limelight.

European skepticism

March 16th 2022

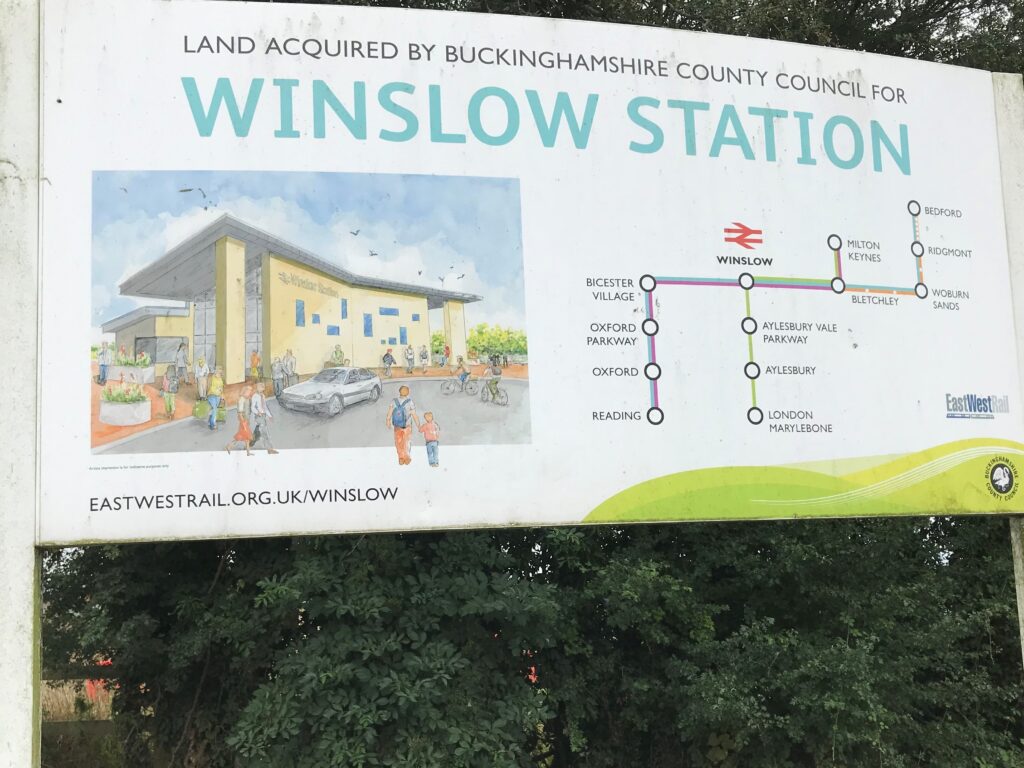

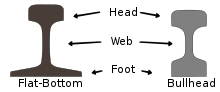

Engineering the old Oxford – Cambridge East West Railway Line At Winslow, Bucks.

The line’s original engineer Robert Stephenson quit because the clay soil was difficult and subject to land slippage. Even with modern equipment , the Claydon ground still proved difficult. Image by R J Cook / Appledene Photo graphics March 14th 2022.

RJ Cook and K C Close are producing a new book on the rise, fall and rise of this line. It is due in shops in 2024.

Cook was a senior council member campaigning for reopening the line in the early 1980w, with exclusive material on the project and specialist knowledge having been involved with equipping railways in Chile. Cook has considerable knowledge of this railway and engineering involved..

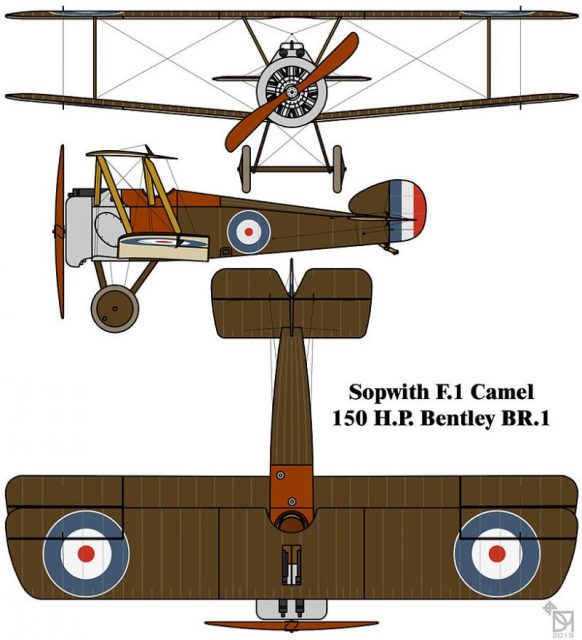

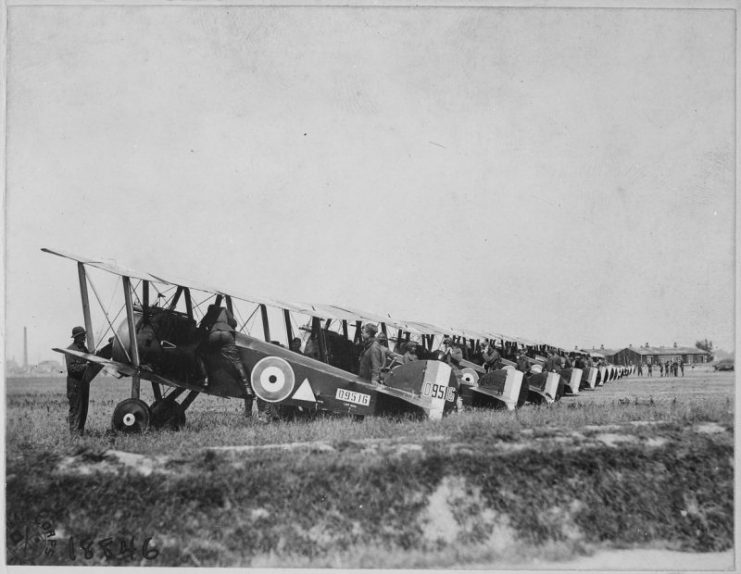

Wartime Military Gliders Under Construction.

The Development Of Glider Warfare During World War Two

by Peter Wood

Following on from the Wright Brothers’ invention of aircraft and their experimental use of engineless aircraft (gliders), it was during the 1936 Berlin Olympic Games that the sport of gliding was first introduced on a worldwide scale led by the International Olympic Committee (IOC). The demonstration of gliders at the Games took place on 4th August at the Berlin-Staaken airfield. A total of 14 pilots from across seven countries (Bulgaria, Italy, Hungary, Yugoslavia, Switzerland, Germany and Austria) took part in the demonstration, although no prizes were issued by the IOC.

Pioneering pilots such as the likes of Hanna Reitsch, a female German test pilot, soon showed promise for a career in the sport. Following the huge success and potential shown by these pilots, gliding was officially accepted by the IOC at their Cairo Conference in 1938, with plans to include it in the 1940 Olympic Games for the first time. The DFS Olympia Meise, a single seated glider was drawn up and prepared specifically for use in the 1940 games which were cancelled due to the outbreak of the Second World War and Finnish/Soviet Winter War.

January 27th 2022

January 21st 2022

China’s $1Tn artificial Sun burns five times brighter than real thing in breakthrough test

Antony Ashkenaz

Beijing spent $1trillion on developing an experimental nuclear reactor that could bring the world a step closer to achieving limitless clean energy. In a breakthrough test, this “artificial Sun” set a new world record after it was able to superheat a loop of plasma to temperatures five times hotter than the Sun for more than 17 minutes, according to state media reports.

Read More China’s $1Tn artificial Sun burns five times brighter than real thing in breakthrough test (msn.com)

January 18th 2022

British flyers may be caught in 5G aviation row which should never have reached boiling point

Geoff White, technology reporter

The language from the airlines is stark – “safety systems on aircraft will be deemed unusable” and the “vast majority of the travelling and shipping public will essentially be grounded”.

What’s causing the concern is the rollout in the US of the 5G mobile phone network.

January 17th 2022

SS Nomadic – Explore – Titanic Belfast

December 21st 2021

The first London Bridge was built by the Romans as part of their road-building programme, to help consolidate their conquest. The first bridge was probably a Roman military pontoon type, giving a rapid overland shortcut to Camulodunum from the southern and Kentish ports, along the Roman roads of Stane Street and Watling Street (now the A2 ).

The abutments of modern London Bridge rest several metres above natural embankments of gravel, sand and clay. From the late Neolithic era the southern embankment formed a natural causeway above the surrounding swamp and marsh of the river’s estuary; the northern ascended to higher ground at the present site of Cornhill. Between the embankments.

Old London Bridge

The Old London Bridge of nursery rhyme fame dates from 1176, when Peter of Colechurch, a priest and chaplain of St. Mary’s of Colechurch, began construction of the foundation. Replacing a timber bridge (one of several built in late Roman and early medieval times), Peter’s structure was the first great stone arch bridge built in Britain. It was to consist of 19 pointed arches, each with a span of approximately 7 metres (24 feet), built on piers 6 metres (20 feet) wide; a 20th opening was designed to be spanned by a wooden drawbridge. The stone foundations of the piers were built inside cofferdams made by driving timber piles into the riverbed; these in turn were surrounded by starlings (loose stone filling enclosed by piles). As a result of obstructions encountered during pile driving, the span of the constructed arches actually varied from 5 to 10 metres (15 to 34 feet). In addition, the width of the protective starlings was so great that the total waterway was reduced to a quarter of its original width, and the tide roared through the narrow archways like a millrace. “Shooting the bridge” in a small boat became one of the thrills of Londoners.

Rolls-Royce receives huge boost as it ties up required funding to start supplying parts for mini nuclear reactors – December 20th 2021

Rolls-Royce has received a huge boost as it tied up the required funding to start supplying parts for mini nuclear reactors.

The Qatar Investment Authority, the country’s wealth fund, will pour £85m into the engine-maker’s nuclear offshoot, which now has total funding of £490m.

It means it can start scouting sites for factories from which it will supply parts to build Small Modular Reactors (SMRs).

|

| 10 World Engineering MarvelsThese remarkable feats of design and construction transformed the ways that people travel, communicate and live.Read MoreDon’t miss the premiere of The Engineering That Built the World this Sunday, October 10 at 9/8c on The HISTORY Channel. |

Oxford, Worcester and Wolverhampton Railway – Wikipedia

THE 1980S– A DECADE OF DISASTER FOR RAILWAY WORKSHOPS

In the UK, at the start of the 1980s, there were 13 major railway works, employing over 30,000 staff with extensive engineering design and construction skills, but by the end of the decade, only 4 works were left and staff numbers had fallen to just over 8,000. Following the 1968 Transport Act, BR’s Workshops Division was able to bid for non-BR work, including potential export orders internationally. On 1st January 1970 it became rebranded as British Rail Engineering Limited.

There were a number of major workshop closures in the 1960s, with Glasgow Cowlairs being one of the last, and in the 1970s, only Barassie Wagon Works, near Troon shut its gates for the last time. That said, the impact of loss of jobs and engineering skills continued, but the pace of industrial demise in the 1980s would see a step change in the pace of that decline.

This was driven to a great extent by the government’s “Transport Act 1981”, which provided British Railways Board with the option to dispose of any part of its business, and subsidiary companies, amongst other activities related to components of the old British Transport Commission, and various road transport measures. The act did not specify which subsidiaries were, or could be offered for sale, but debates in parliament did contend that this would include BREL. The MP for Barrow-in-Furness, Albert Booth, made this observation in parliament in April 1981:

“The object of the amendment (“amendment No. 1”) is clear. It is to keep British Rail Engineering Ltd. strictly within the scope of British Railways and the British Railways Board and to remove the ability that the Bill would confer on the Minister to instruct the board to sell the engineering subsidiary or to prevent British Railways from seeking the consent of the Minister to sell the subsidiary.”

The 1980s – A Decade of Disaster for Railway Workshops – Railway Matters (twsmedia.co.uk)

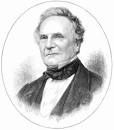

Pacific Railroad – July 7th 2021

Pacific Railroad

North America’s first transcontinental railroad (known originally as the “ Pacific Railroad ” and later as the ” Overland Route “) was a 1,912-mile (3,077 km) continuous railroad line constructed between 1863 and 1869 that connected the existing eastern U.S. rail network at Council Bluffs, Iowa with the Pacific coast at the Oakland Long Wharf on San Francisco Bay.Locale: United States of AmericaOperator(s): Central Pacific, Union PacificOther name(s): Pacific RailroadOwner: U.S. Government

first transcontinental railroad – Bing images

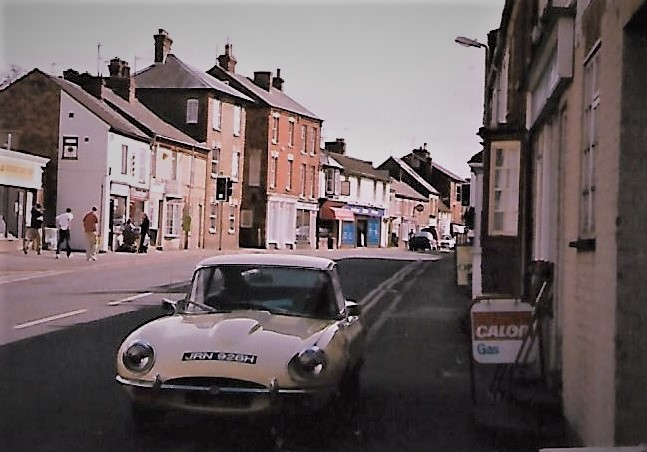

The American Made Rolls Royce Auto – Not a Success Story Posted June 2021

Robert Cook

By Terry E. Voster | Submitted On June 02, 2008 11

At one point in time the venerable prestige Rolls-Royce fine motor cars were made and manufactured in the U.S.A. – the United States of America. However this early example of marketing and production offshore and off home base was doomed to failure.

A bare six months after the signing of the historic contract between Charles Rolls and Henry Royce the export drive of Rolls-Royce was on its way. Early on in September 1906 Charles Royce was on his way to the United States, taking with him four cars as samples of the company’s wares. One of these cars was sold almost as soon as it was unloaded; one went straight away to Texas. The remaining two vehicles served as sales and marketing vehicles – an example of the fine craft and attention to detail that the company become world famous and known for. One of the cars was kept on the road as a demonstration model, while the other was put on display at the New York Auto Show. That first appearance at the auto show was a great success for Rolls Royce as well: an additional four orders were taken for new cars. As well an American distributor jumped to the plate.

Business grew for Rolls- Royce in America to the point that in the 12 month period before the beginning of the First World War, fully 100 vehicles were sold. By this time the owners and management of the firm had come to the conclusion of the great sales potential for Rolls-Royce motorcars in the United States. Judged on current trends and market sales information and experience, they came to the conclusion that the American market for their fine products was larger and richer than anything that they could expect to attain in their home market and current manufacturing domain – England. Import restrictions and tariffs would be the limiting factor for Rolls-Royce in terms of both added costs to the final price of the car to American consumers, who would have to absorb the import tariffs on their vehicles and the profitability of Rolls-Royce in America.

The die was cast. As promptly as possible American manufacturing facilities were set up. This was to be a full Rolls-Royce manufacturing facility in America. A factory itself was purchased in Springfield Massachusetts. Manufacturing was promptly commenced under the direct supervision of none other than Henry Royce himself. Production was done mainly by local workers, aided and supervised by a fleet of 50 tradesmen from the British Derby factory itself. These British workers actually physically immigrated to America permanently with their families as well.

Production at this Springfield plant commenced in 1921 with Rolls-Royce firmly stating that the product from this auto plant would be the equal of anything built at the home plant located at Derby England. The plan was that parts would be shipped and assembled in the US with custom made coachwork made by existing prestigious American firms. Interestingly enough over time the number of items made locally in the US, as opposed to Britain, began to actually increase, not decrease. However the consistency of the product, in terms of product line and actual product began to deviate from the strict British made product. Only the first 25 rolling chassis were actually identical to the Derby England factory items. As time went on there were more and more deviations from the strict British product. Some of this may be due to the personal preferences and procedures of the different local American coachbuilders. After each was a premium established firms with distinct products, styles and methods previously. Some was due to the requests from the American customers, their ability to individualize and personalize their American made car to their individual preferences and styles.

What did in the American Roll-Royce? For one thing cost. Substantial costs were incurred in converting the cars from right hand British drive to left hand American. As a result of the increased costs incurred, the selling price of these American made Rolls-Royces was not nearly as competitive to other automotive products available on the U.S. market for prestige automotive products. Next the primary U.S. coachmaker for Rolls-Royce, the Brewster Coachbuilding firm, fell into financial difficulties. Then along came the 1929 stock market crash. The American Rolls-Royce might of continued save for one major marketing blunder. The British parent firm introduced a dynamite model – the Phantom, The car was not made in the US nor even made available, by import of 100 cars, till a year later. The car had great reception with the prestige auto market in the USA. However by the time it was decided to manufacture this hit product to meet the American demand the actual Phantom model was replaced by an ultra high tech and sophisticated model – The Phantom II. With the retooling costs incurred the calculation was that each American Rolls-Royce Phantom II car unit produced and sold would cost the company an astounding 1 million to produce in comparison to the 1929 customer price threshold for luxury prestige automobiles of only $ 20,000.

The fate of Rolls-Royce American manufactured products was sealed. The firm honored the last 200 orders for their cars. By 1935 these orders were completed and delivered to their customers.

That was the ending of the Rolls-Royce experiment of producing an American made prestige car product.

Terry E. Voster – Winnipeg Used Cars

Iron Shipbuilding on the Thames, 1832-1915: An Economic and Business History. By A. J. Arnold. Aldershot and Burlington, Vt.: Ashgate, 2000. Pp. 198. $94.95.

Posted April 23rd 2021

The availability of wrought iron in bulk, and in a form capable of being rolled into plates that could be riveted together, revolutionized shipbuilding in Britain in the middle of the nineteenth century. What had previously been a widely dispersed industry, depending on small groups of specialized craftsmen using traditional skills to build wooden sailing ships, was transformed into one dominated by large firms closely related to heavy engineering. The River Thames below London Bridge, which had long been one of the most successful bases of traditional shipbuilding in Britain, was ultimately unable to compete with other districts possessing easier access to coal, iron, and engineering skills. Not even the excellence of the metropolitan engineers, who had led the world in the development of machine tools and marine steam engines up to the mid-nineteenth century, could save the Thames shipyards from decline. [End Page 610]

A. J. Arnold’s Iron Shipbuilding on the Thames is presented as an economic and business history, and it fulfills this objective satisfactorily. Apart from the introduction and conclusion, both quite brief, the main text is divided into six chapters dealing with consecutive periods between 1832 and 1915. Each chapter offers an overview and then considers the main shipyards operating on the Thames at the time. There are substantial appendices with lists of Admiralty ships built in London by private yards and of all the iron ships built in the main yards. The result is a very competent survey, providing useful information and analysis of the rise and decline of iron shipbuilding on the Thames.

It is disappointing, however, to have such an eventful and dramatic story told with so little sense of its narrative quality, and with so little attention to its distinctively technological character. Iron shipbuilding flourished mightily in the Thames shipyards until 1866, and then rapidly declined. Such a sudden reversal of fortune deserves more eloquent treatment than it receives here. Not even the astonishing histrionics that accompanied the construction and launch of I. K. Brunel’s Great Eastern are allowed to influence the prosaic and somewhat repetitive style of this account, even though Brunel’s problems with this ship illustrate vividly the difficulties of applying the new shipbuilding technologies to the industry on the Thames.

The reasons for the collapse of the industry were complex, and Arnold touches on most of them: the remoteness from sources of iron and coal; the relentless pressure to develop larger enterprises, which were more vulnerable to financial vicissitudes; the tradition of high wages for skilled workers on the Thames; and the sharp competition from Clydeside and operations in the North of England. The old pattern of management structures and labor relationships, so well established in the Thames yards, rendered them ill-equipped to cope with recession and stiffening competition. But it was the underlying technological factors of new materials, new sources of power, and novel methods of construction that placed London at such a profound disadvantage in relation to its competitors. The shipyards of the North came rapidly to exploit their advantages, and those of London virtually disappeared.

Just as heavy engineering had shifted northward toward Manchester and other places convenient to the coalfields, so shipbuilding, which had become virtually another kind of heavy engineering, moved in the same direction. There was nothing that the London shipyards could do to resist the powerful pull of these technological factors, and when the failure of the Overend and Gurney Bank in 1866 brought even the advantages of London as a financial center into question, the London industry went into a decline from which it never recovered. A few specialized enterprises endured with Admiralty support until World War I, but it was a losing battle, [End Page 611] and soon thereafter significant ship construction disappeared from the Thames.

R. Angus Buchanan

Dr. Buchanan is emeritus professor…

- Purchase/rental options available:

Additional Information

ISSN 1097-3729 Print ISSN 0040-165X Pages pp. 610-612 Launched on MUSE 2003-08-27 Open Access No

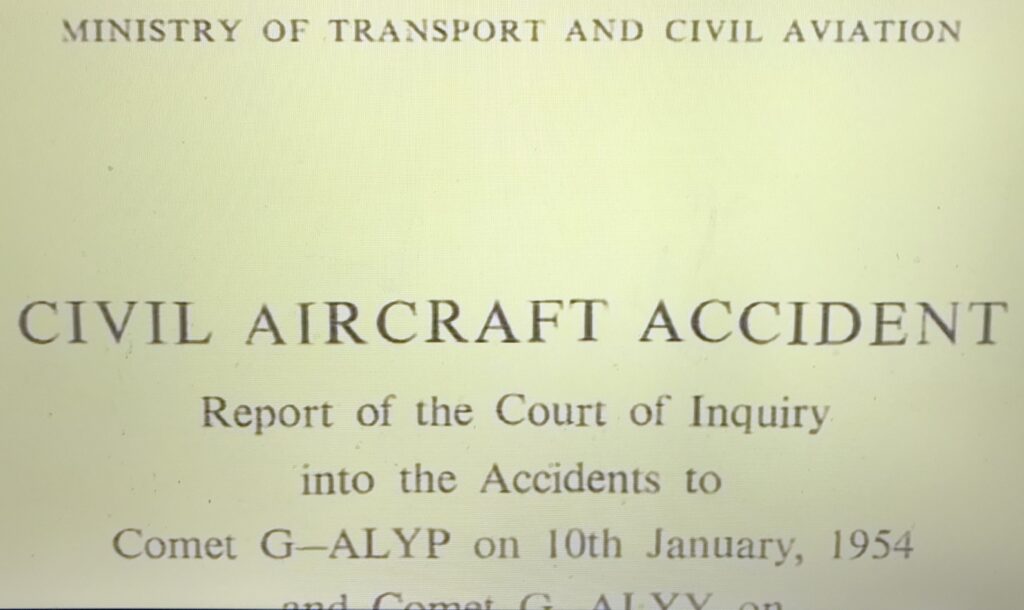

DH Comet – Worlds 1st Jet Airliner by : Posted April 20th 2021 by Robert Cook

My interest in aviation started pre school. I first saw a bi plane in the sky above Winslow Church. I was out walking with my oracle Aunt Flo. She also told me about the ill fated R101 being test flown over our little town of Winslow. Then she told me how aircraft were used to bomb people, culminating with the nuclear bombs dropped on Hiroshima and Nagasaki.

My father fed my interest, buying model airplane kits, representing all the airborne hardware of two World Wars which so stimulated flight. I rode in his brick lorry delivering in the Hayes areaa in the mid 1950s. He would park by the chain link fence on the old A3, so I could watch the Comet 4 landing. An amazing sound and sight. I used to build mock upsof war planes out of orange boxes and polythene in the garden , sitting in them making machine and engine noises , imagining I was a wartime hero as I’d seen on TV films and comics. Life was black and white , good and bad.

After my father’s death in 1962 , I discovered the world of balsa wood flying models. I became addicted , receiving ‘Model Aircraft’ Magazine every month. This led to my understanding of aerodynamics and what seems a simple idea, looking back, but many died or were injured in the discovery. Leonardo de Vinci worked out the basics , but an engine was needed. Even gliders have to be towed into the air – wonderful when you are up there as I know from experience.

To get and stay airborne, the air must flow faster over the top of the wings than below it. Hence the need for a precise cross sectional profile. When anything moves on ground or in the air , it requires energy but encounters friction and energy loss. So at slow speeds a broader wing span is required. Hence Barnes Wallis’s swing wing concept used on Concorde. Delta wings are better for jet speeds. Key moments , pre all the computer stuff , were VI and V2. VI is the rotate point when the nose is pulled up , the wing base exposed , so design issues and pilot error can be disaterous. There is potential for loss of lift. It was too easy to over rotate the DH Comet.

The DH Comet had wing issues, as well as metal fatigue, especially around the windows. Jets were fuel efficient at high altitude – hence the name jets associated with the jet stream. But air pressure reduces with altitude. Jets take off and land at higher speeds, so air pressure in the cabins must be quickly regulated, cusing enormous airframe stress. Hence explosive decompression killing many Comet passengers.

This set back allowed the U.S Boeing et al to build on the De Havilland’s ground breaking work.Their famous 707 set new standards , fouled only recently by the 737 Max 8 , which was built for computers to do the fine trimming of air flow needed across such a large airframe and wing spand ,which was nose heavy due to overlarge forward mounted fuel saving engines. These problems, following great loss of lives , have apparently been solved.

As for De Havilland. Sir Geoffrey was killed while out breaking the sound barrier and there is little left to remind us of the once great company’s works and aerodrome at Hatfield – which I visited a few years ago to buy training shoes. That is British industry .

Robert Cook

The de Havilland DH.106 Comet was the world’s first commercial jet airliner. Developed and manufactured by de Havilland at its Hatfield Aerodrome in Hertfordshire, United Kingdom, the Comet 1 prototype first flew in 1949. WikipediaFirst flight: 27 July 1949Number built: 114 (including prototypes)Developed into: Hawker Siddeley NimrodNational origin: United KingdomManufacturer: de HavillandPrimary users: BOAC: British European Airways; Dan-Air; Royal Air ForceRetired: 14 March 1997 (Comet 4C XS235)

Mechanical Hydraulic Diggers

In 1897, the Kilgore Machine Company of America produced the Direct Acting excavator, the first all hydraulic excavator. This used four direct acting steam cylinders, doing away entirely with cables and chains. Being built almost entirely of steel, it was far sturdier and hard wearing than previous designs.

We’ve come a long way, baby: the evolution of construction equipment hydraulics Posted April 20th 2021

June 13, 2019 By Mary Gannon FacebookTwitterLinkedIn

Modern mobile machinery has changed quite a bit. Here is a look at how construction equipment hydraulics have changed over the last couple hundred years.

By Josh Cosford, Contributing Editor

On a road near my home, there exists a hand-laid stone fence, perhaps 4 ft high and a hundred times as long. Crafted from locally-sourced rocks some century ago, I drive its length in awe as I imagine the physical and time resources used in its construction. The machinery to excavate, haul and lay heavy material was uncommon in the 1800’s, so I can’t reason it was constructed using anything but many strong hands.

The construction industry is as old as farming, and as societal needs grew, so too did the requirement for improvements in construction. The industrial revolution grew our capacity to construct buildings and infrastructure exponentially. Light and moderate construction techniques built our homes and offices, while heavy and intense construction made the factories and the roadways to get there. The hand-laid stone fence was obviously a light construction project, but it’s the heavy and intense construction so well suited to hydraulic motivation that has been important to civilization.

Modern construction gathers steam

Steam power is a form of fluid power energy transfer, but instead of pressurized air or hydraulic fluid, heat energy is added to water until it turns to its gaseous form. This transformation creates pressure as gas volume increases, which was captured in actuators to power large machinery. This technology gathered steam, as it were, in the early 19th century, but records show as early as 1796 a steam-powered dredge was used to clear the beds of waterways in England.

In 1835, William Otis, cousin to American industrialist Elisha Otis of elevator fame, applied steam energy to create a single-bucket land excavator. Accepted as the first self-powered, land-based machine used for heavy construction, it revolutionized the building of railway lines. This patented machine was able to move 300 yd3 per day, where two men and a wheelbarrow would drag this task out to a fortnight.

Some fifty years later, Sir W. G. Armstrong built the first excavator using hydraulics, where it was used in the construction of docks. It was steam powered, but also employed cables with only hydraulic actuation on one function. A semi-interesting aside: Armstrong’s company eventually merged with Vickers Limited, but disappointingly after much research, I could find no link to the Vickers of hydraulic fame. Regardless, Armstrong’s machine didn’t work very well and left the door open for others. The first machine to use only steam-powered hydraulic actuators without the aid of wheels and cables was the Kilgore 2-1/2 Yard Steam Railway Shovel. This machine was productive, but like the Armstrong machine, it was limited to rail line construction.

Creating a modern standard

It would take nearly another century before excavators looked and operated as they do today. For most of this span, excavators would remain cable-operated or some type of steam, mechanical, cable and hydraulic hybrid. Demag (now Komatsu) created the first 360°, all hydraulic, track-driven excavator as we know it today. The 1954 Hydraulikbagger, Figure 1, was powered by a 42-hp, 3-cylinder diesel and capable of 2.5 mph while carrying about a half yard of material. It was compact, efficient, agile and productive, especially for light and moderate construction projects.

So effective was the B504 that its construction features are now standard for the industry. Once excavators were gifted fully-hydraulic operation, construction equipment was capable of utility and productivity not previously possible. Decades earlier, the Ford Model T’s domination would pave the way (that’s right, I went there) for the development of interstate highways. The B504 was timed perfectly because the development of Eisenhower’s Interstate Highway System started shortly after. I’m not claiming the events were related in any way, but their timing ensured the construction industry in America would expand as never before.

Mobile construction equipment took form because of the inherent advantages of hydraulics; power density, controllability and reliability. Step one for hydraulic machinery was getting it all to work reliably and efficiently, but because construction is a competitive, low-margin industry, advancements came fast and hard. Productivity was chased, which needed the puzzle pieces of power, control and reliability to fall into place.

Early machinery was moderate pressure open loop, consisting of mostly gear and vane pumps running 1,000-2,500 psi. Even in the 1960s when hydraulic excavators were dominating their cable-operated counterparts, the technology advance was slow. OEMs saw the benefits hydraulics provided, so they applied the technology to loaders, scrapers and dozers, making them powerful and effective. But in the 60s, machining technology wasn’t able to provide the close tolerances required to make high pressure pumps, valves and actuators.

Higher pressures, sophisticated controls

As applied knowledge advanced, manufacturers realized high pressure was the key to productivity – and by “high pressure,” I mean 3,000 psi. Piston pumps can produce high pressure with efficiency, but they had to master tighter clearances and differing coefficients of expansion. Early variable displacement piston pumps used a swashplate with lever operation to control flow, providing an efficient speed control alternative to metering valves, which wasted energy.

The 1970s could be considered the decade of hydraulic creativity. To increase control and productivity, engineers were inventing clever ways to control hydraulics. The first hydrostatic drives were mastered and applied to loaders, enabling them to transition quickly and smoothly between forward and reverse motion. Caterpillar had the pressure compensated axial piston pump patented, and torque limiting was also developed in the decade of disco.

Torque limiting (also known as horsepower control) is a method to automatically limit flow inversely proportional to pressure. As pressure rises, flow drops, and when pressure drops, flow increases. This method gave the best of both worlds, allowing an excavator to behave as if its prime mover was twice the rated horsepower. The swing, boom, arm and bucket functions could all move rapidly with no load, but then the pump would cut flow as pressure rises, supplying the force needed for heavy work.

By the 1980s, cable operation was nearly extinct in the construction industry. So effective was hydraulics, that even the control functions were hydraulic pilot operated, which was an older technology. The brakes, the steering and the machine functions could be worked from the cab using pilot valves. Try to explain to your teenager that a joystick used to have oil running through it, and the distance and vigor the joystick moved would push fluid at the spools of the directional control valves with the same effort.

Enter load sensing

However, the proliferation of load sensing technology in the 1980s freed up horsepower, and in combination with improving machining tolerances, pressure (and therefore power density) rapidly increased. Load sensing allows the hydraulic pump to provide the exact flow and pressure required by the actuators, adding only a little extra energy to create pressure drop. It wasn’t uncommon to now see standard 4,000 psi for the implement functions and more than 5,000 psi for the travel circuit. With load sensing, running 5,000 psi doesn’t cripple flow when you’re limited with input horsepower.

Although mobile construction equipment had the most advanced hydraulic systems in existence, they fell way short when it came to electronic control. Even electrical control was not a trusted method of operating pumps or valves. The 1990s didn’t see a lot of advancement with construction equipment, especially in the way that hydraulics were controlled. Digital machine monitoring existed, but most of the technology was supplied for operator comfort — climate control, stereo systems and 12-V chargers.

The advent of electronic controls

The turn of the century saw machine OEMs strong-armed into progress. The looming Tier 4 emissions standards forced manufacturers to rethink the design and implementation of construction machinery. Machine functions were increasingly controlled electronically, where hydraulic joysticks were replaced by proportional control, cabs were fitted with LCD digital displays, and machine maintenance intervals were monitored electronically. However, pressure hadn’t increased in three decades, remaining in the 5,000 psi range well into the late 2000s.

Electronics are now prolific in the construction industry. Just as with your car, your excavator has programmable performance modes. You can run in “eco” mode, or with the adjustment of a convenient dial, ramp it up the high-power mode. GPS navigation, automatic grade compensation, traction control and hybrid drive systems are working their way into modern construction machinery.

The crawler dozer, pictured, is a machine of surprising technological advancement. High-end models have individually controlled hydrostatic drives for left and right tracks, themselves each closed-loop electronically controlled. The dozer’s path is maintained based on operator control, and the software accommodates regardless of load, turning angle or traction. They are available with software applications, real-time data logging and customizable machine responses. If one operator prefers feather-touch, high response from her controls, while another prefers a slower, attenuated control method, both can save their user profile preferences. The machine can’t be started until the operator inputs their login, at which point the profile is loaded.

The value of power density is not lost on dozer manufacturers. New machines are closing in on 7,000 psi, allowing higher torque from smaller, lighter machines and realizing improved fuel economy. Lighter machinery also makes transportation to and from worksites much easier and provides a side benefit of reduced ground compaction.

What does the future hold?

So, what does the future hold for construction equipment hydraulics? It’s obvious that pressure will continue to rise, enabling smaller, lighter machines to achieve productivity previously enjoyed by only large, high-powered equipment. Advanced materials will permeate machinery, using both carbon fiber and 3D-printed metals to increase strength while reducing weight.

Digital control with increased saturation of cyber-physical systems will be commonplace. A construction sight workday will be planned from a computer control station, where all worked is carried out remotely with operator-less machinery. As well, the continued electrification will see engines replaced with electric prime movers and battery packs. At some point, machines will be fully autonomous, where a digitally scanned topographical map of the territory is inputted, and the machine is told how to grade or excavate to match the desired output.

Industrial environments increasingly see electric actuators, eschewing fluid power altogether. However, electric actuators will never replace hydraulic actuators in construction machinery. I make this bold prediction because electric cylinders and motors can never be made so small yet so powerful as to replace hydraulics. A 100-hp, bent-axis piston motor can fit into a shoebox, and that’s at current industry pressure levels.

Where I see electric actuation expanding is with power delivery. Instead of central power units and distribution through hydraulic control networks, actuators will be self-contained integrated actuators. The servomotor and pump combination will be built into the hydraulic cylinder, which will include a small reservoir and manifold containing all hydraulic controls. These units will be modular, configurable and controlled via wireless networks, while still providing the high force that makes hydraulics king.

The modern mobile construction machine has come a long way from the steam-powered machines of the industrial revolution. Continued advancement will see machines become more productive, efficient and powerful, while the reduction of machine operators will see worksites become safer, especially as robots replace construction workers. But I doubt I’ll ever see another newly constructed stone fence at the hands of robots.

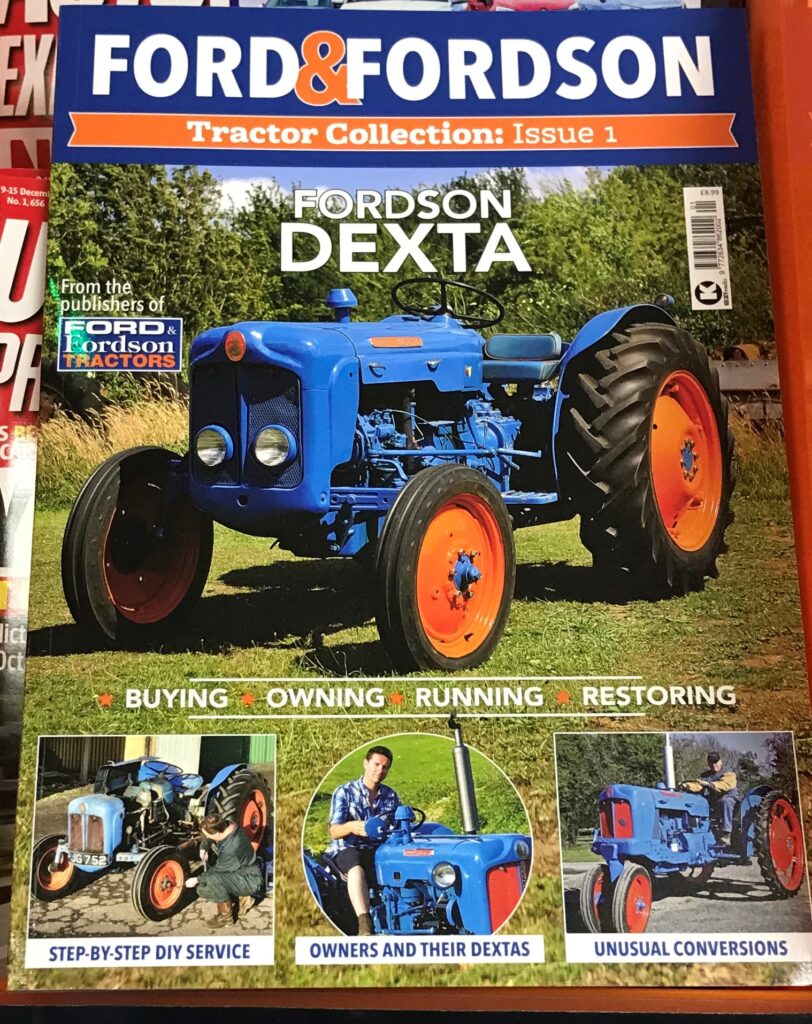

Agripower April 10th 2020

Why New York City Stopped Building Subways Posted March 7th 2021

Nearly 80 years ago, a construction standstill derailed the subway’s progress, leading to its present crisis. This is the story, decade by decade.

- Jonathan English

Read when you’ve got time to spare.

Photo by Madison McVeigh/CityLab

In the first decades of the 20th century, New York City experienced an unprecedented infrastructure boom. Iconic bridges, opulent railway terminals, and much of what was then the world’s largest underground and rapid transit network were constructed in just 20 years. Indeed, that subway system grew from a single line in 1904 to a network hundreds of miles long by the 1920s. It spread rapidly into undeveloped land across upper Manhattan and the outer boroughs, bringing a wave of apartment houses alongside.

Then it stopped. Since December 16, 1940, New York has not opened another new subway line, aside from a handful of small extensions and connections. Unlike most other great cities, New York’s rapid transit system remains frozen in time: Commuters on their iPhones are standing in stations scarcely changed from nearly 80 years ago.

Indeed, in some ways, things have moved backward. The network is actually considerably smaller than it was during the Second World War, and today’s six million daily riders are facing constant delays, infrastructure failures, and alarmingly crowded cars and platforms.

Why did New York abruptly stop building subways after the 1940s? And how did a construction standstill that started nearly 80 years ago lead to the present moment of transit crisis?

Photo by Madison McVeigh/CityLab

Three broad lines of history provide an explanation. The first is the postwar lure of the suburbs and the automobile—the embodiment of modernity in its day. The second is the interminable battles of control between the city and the private transit companies, and between the city and the state government. The third is the treadmill created by rising costs and the buildup of deferred maintenance—an ever-expanding maintenance backlog that eventually consumed any funds made available for expansion.

To see exactly how and why New York’s subway went off the rails requires going all the way back to the beginning. What follows is a 113-year timeline of the subway’s history, organized by these three narratives (with the caveat that no history is fully complete). Follow along chronologically or thematically for the historical context of the system’s sorry state, or use a playful “map” of the subway’s decline.

1904: First subway opens

The private Interborough Rapid Transit company opened the first underground subway line in 1904, stretching from West Harlem to Grand Central. After taking over the existing elevated railways, it created a near-monopoly on rapid transit in Manhattan and the Bronx. The Brooklyn Rapid Transit company dominated the elevated transit business in that borough, as well as its connections to Manhattan.

1913: The “Dual Contracts”

In an agreement called the “Dual Contracts,” the city entrusted the two private subway companies with a radical expansion of the system. Almost immediately, municipal leaders regretted the decision. Many were dissatisfied with the financial return from the investment of over $200 million—more than half the total cost of construction.

The dispute went beyond mere finance, however: The subway became a symbol of the battle between public and private interest, and a populist touchstone for a succession of mayors. Their most important leverage was control of the subway fare: By refusing to let the private companies charge more than a nickel for decades, inflation meant that in 1948 riders were effectively paying less than half what they had been paying in 1904.

1922: Independent Subway

Opposition to the private transit duopoly was the centerpiece of Mayor John Hylan’s administration. He announced a vast new “Independent” subway system, to be built and owned by the municipal government. Unlike earlier subway lines, which pushed deep into undeveloped territory, many of the IND lines closely paralleled existing private routes in order to compete with them.

Cities are changing fast. Keep up with the CityLab Daily newsletter.

The real estate industry was one of the most important constituencies supporting the development of the subway system in the early years. Developers enjoyed a symbiotic relationship with the subway, which was extended into empty fields that were then swiftly and profitably blanketed with apartment houses whose residents then filled the trains. With the construction of the IND, that bargain began to break down—they saw new subways as more of a tax burden than a generator of big speculative profits.

1939: World’s Fair

As visitors to New York’s 1939 World’s Fair gazed on General Motors’ vision of the world to come at its Futurama exhibit, they didn’t see new trains and subways. Instead, they saw cars traveling quickly on wide new superhighways to bungalows in a bucolic landscape. The car was viewed as the height of modernity; many dismissed public transit as a grimy relic of an earlier age. The postwar federal government would spend what it took to make the suburban dream come true.

1940: City takes over the private subways

Mayor Fiorello LaGuardia took advantage of the disastrous finances of the BMT and IRT, ravaged by the Depression and the ban on fare increases, to acquire both companies. That strained the city’s resources, with a total cost of $326,248,000. The cost was not much lower than that of building the entire IND network, and while it did unify the system, it didn’t produce a single additional mile of subway.

1940: Sixth Avenue subway opens

The Sixth Avenue line was one of the core segments of the IND’s Manhattan network. It was to be followed soon after by the Second Avenue line, but New Yorkers ended up waiting over 70 years for even a tiny segment of that project to be completed. The Sixth Avenue subway was an astonishing engineering achievement: The work had to weave around both the PATH train tunnel and the supports for the busy elevated line above. Such wizardry did not come cheaply, and it was emblematic of the high standards—and costs—on all the new IND lines.

The IND lines built by the municipal government cost an average of $9 million per mile, which was 125 percent higher than the earlier “Dual Contracts” lines. The cost per mile of Sixth Avenue was about four times as high as the original subway. This pattern of high construction cost persists to the present day.

1946: Subway ridership peaks

Subway ridership has never been as high as it was in 1946, and a precipitous decline began in the late 1940s as automobiles became widely available. The busiest station in the system, Times Square, saw its ridership drop from 102,511,841 riders in 1946 to 66,447,227 riders in 1953. Subway expansion would become increasingly difficult to justify as New Yorkers were abandoning the existing system—even though outward expansion was just what was needed to keep the subway as the region’s primary mode of transportation.

1947: End of the five-cent fare

With the subways now in municipal hands, a doubling of the fare was finally negotiated. Years of deferred maintenance by the cash-strapped private companies had become increasingly evident. But by then, fare hikes only exacerbated the problem of declining ridership.

The current New York City subway map altered to highlight all of the lines whose construction started after World War II. Additional lines not shown were converted or repurposed from existing railway lines.Photo by Jonathan English/Madison McVeigh//MTA/CityLab

1951: Transit Bond issue

After 1945, the City of New York found itself in constrained financial circumstances. The growth and modernization of its infrastructure necessitated substantial borrowing, but the city was already burdened by an enormous Depression-era debt and faced a state-mandated debt limit. In November 1948, the Board of Transportation recommended that the city seek a $500 million exemption from the debt limit to permit the revival of the Second Avenue Subway plan, along with several outer-borough projects like the Utica Avenue line that mayors since have continued to tout, most recently Bill de Blasio. (Indeed, the wish list for subway construction has changed little to the present day.) The request passed in a statewide referendum on November 6, 1951.

But rather than being used as promised to continue the prewar pattern of expansion, most of the money was instead diverted to eroding the mountain of deferred maintenance that had built up during the war and the Depression.

1953: Creation of the Transit Authority

To ensure that fare policy never again became captive to electoral politics, many civic leaders advocated for the creation of an independent state authority to administer the city’s transit system, comparable to the Port Authority or Robert Moses’ Triborough authority. The subways were thus handed over to the state-created Transit Authority.

But the institutional reshuffle did not resolve the fundamental financial problems of a system; ridership continued to decline and maintenance remained deferred. The state and municipal governments were both unwilling to provide the subsidy that would have been needed to adequately sustain the system. Unlike highways, transit was still seen as a business that should make a profit, and not as a public service.

1956: The Interstate Highway Act

With the encouragement of President Eisenhower, Congress passed an act providing lavish federal funding for a cross-country network of expressways. The 1950s saw the construction of over a dozen major expressways and bridges in the New York region. This construction program rivaled or even exceeded the earlier subway boom. And unlike the subways, all of it benefited from federal largesse. Celebrating the completion of the Bruckner Expressway in the Bronx, Mayor Robert Wagner boasted, “This two and one-half mile stretch of elevated expressway cost more than $34 million, of which 90 percent was put up by the federal government.”

1950s: Growth of the suburbs

By the postwar period, the majority of population growth in the New York region was taking place outside of the five boroughs. New York City no longer dominated the region to the same extent that it once had, and the growing political power of the suburbs hindered funding requests for subway projects that many suburbanites believed did not benefit them. Manhattan and Brooklyn shrank from 1940 to 1960, while Nassau and Suffolk counties essentially tripled in population.

Yet New York City still planned subway projects as if the suburbs didn’t exist. In the postwar period, most greenfield real estate development shifted out of the city entirely and into the surrounding counties. Instead of being built around transit, new developments were centered on expressways.

1965: Creation of the Metropolitan Transportation Authority

In an effort to address the geographic and financial limitations of the Transit Authority, Governor Nelson Rockefeller created a new regional authority that would ultimately control the subways and commuter railways. It was given the toll revenue from the Triborough Authority’s bridges and tunnels, which had been the financial basis of Robert Moses’ bureaucratic empire, to provide the revenue needed to subsidize the transit system.

But while the new authority’s service area stretched beyond the five boroughs for the first time, it never made efforts to turn the subway and commuter railroads into a combined regional transit system. (For such a model, consider Paris’ Regional Express Network). New York may be an extraordinarily transit-oriented city, but once the municipal boundary is crossed into Nassau and Westchester, transit—especially other than commutes to Manhattan—is near as foreign a concept as it is in a wealthy Los Angeles suburb.

1968: Program for Action

The new MTA announced the last of its comprehensive plans to expand the network on the pharaonic scale of prewar construction. It proposed a number of new lines in the outer boroughs, a full Second Avenue subway, and a “superexpress” line along the LIRR in Queens. Construction began on several of the projects, but even those were only completed in truncated form or abandoned entirely. Never again would the MTA seriously plan major network expansion. Instead, the only discussion is of projects like the new Second Avenue line or 7 train extension, which are of a scale that would barely have registered on the city’s consciousness in the 1910s and ‘20s.

1973: Closure of the Third Avenue Elevated

As transit ridership dropped from prewar level, segments of the city’s subway and elevated system were abandoned entirely. While elevated lines had previously been closed to be replaced with adjacent subway lines, they were now closed without their promised replacements ever being built, including, infamously, the Second Avenue elevated line in Manhattan. The Bronx segment of the Third Avenue Elevated was the last major segment of the system to be shut down without replacement.

1975: Fiscal crisis and Second Avenue abandonment

The centerpiece of the Program for Action, the Second Avenue Subway, had begun construction in the early 1970s. But with the complete disintegration of the city’s finances, construction simply could no longer be supported. The disconnected tunnel segments have lain underused beneath the streets ever since. Several bond issues intended to finance subway expansion had also been defeated, and the limited funds that were available ended up being diverted to the system’s dilapidated trains and stations.

1988: Opening of three-stop Jamaica extension

The 1968 Program for Action proposed a number of projects intended to improve subway service in some of the neighborhoods that had sprouted up in the postwar years, particularly in Queens. Unfortunately, few of the projects were built. One small remnant was the extension of the E train to Jamaica; the J and Z trains were also moved off a nearby elevated line into the new tunnel along Archer Avenue. But a combination of limited funds and community opposition derailed more substantial expansion plans. Even simple extensions along existing rail corridors had become out of reach.

Photo by Jonathan English/Madison McVeigh/CityLab

2017: First phase of the Second Avenue subway opens

The Second Avenue Subway has been part of the city’s transit plans since the creation of the IND in the 1920s. It was intended to replace two elevated lines that shut down in the 1940s and 1950s respectively. An attempt to begin construction was abandoned due to the financial crisis of the 1970s and only a few tunnel segments were built. Over the years, plans were scaled down, and its length was trimmed to only three stops on the Upper East Side. The prospect for future phases remains unknown.

Beyond: The high cost of forgotten history

Many other world cities also slowed their pace of subway construction in the early postwar years. They, too, succumbed to the appeal of the automobile, or struggled with debt and destruction accumulated during the Depression and Second World War. But by the 1960s, this had changed. London opened two new Underground lines in the 1960s and 1970s. Paris began its vast RER project to connect all of its commuter rail lines, linking the rapidly growing suburbs with the historic core.

By contrast, New York’s subway system had deteriorated to such a dismal state that nearly all available funds had to be diverted to basic maintenance and overhaul. The city’s declining population and fiscal troubles made expansion nearly impossible.

Now, New York’s economy has turned around, the population is growing, and the city is in a relatively good financial position. Still, the maintenance backlog is devouring capital spending. Staggering subway construction costs—by far the highest in the world—mean that whatever funding is available does not go very far at all. Old problems that precluded subway construction in the past echo in the present day: There is still no meaningful integration between the subway and suburban transit, the mayor and governor carry on the same types of jurisdictional battles, and the subway has not managed to step off the treadmill of deferred repairs. These problems have deep roots, and overcoming them will not be a simple matter.

Most challenging of all is the shockingly high cost of subway construction. Anyone would expect costs to have risen since the early days of the system, but the cost of the proposed Second Avenue line is nearly eight times what a comparable project cost in the 1980s, when adjusted for inflation.* Procurement problems and labor relations issues are partial explanations, but the most important factor may be the wholesale loss of experience resulting from the decade-long gaps in construction. One of the distinct characteristics of European systems with much lower building costs is continuous construction: Every time they complete a new line, they are able to apply the lessons from the one previous. But in New York, from the opening of the Archer Avenue Line in 1988 to the construction of the 7 train extension and Second Avenue lines in the 2010s, virtually all the experience and knowledge that had been built up in subway construction had atrophied.

The same situation risks repeating itself, as the Second Avenue construction has been completed with no new construction immediately on the horizon. The subway’s cost-induced construction paralysis becomes more severe with every passing decade. We must learn from history in order to break it.

Jonathan English is a Ph.D. candidate in urban planning at Columbia University.

The real reason female engineers are still a rarity in the UK January 24th 2021

By Diane Boon

Published Friday, July 31, 2020

Evidence is growing that perceptions about status, not gender stereotypes, are behind the profession’s inability to attract more women.

There’s no denying that a gender imbalance exists when it comes to engineering. In the UK, only 12 per cent of engineers are female. A quick Google search will bring up countless articles busting myths about STEM subjects being a man’s domain (even though female students often outperform their male classmates in these subjects). More often than not, such reports point at a seemingly endless number of barriers presented to women who would otherwise become engineers — a lack of role models, sexism in the workplace, a concern over progression prospects.

Of course, these are all valid obstacles. But there’s a greater issue impacting British engineering in general, and a lack of female engineers is a symptom of a greater problem within the sector. After all, if it were simply a case of engineering in general throwing up obstacles for women, why does Spain have relatively equal numbers of male and female engineers?

What if it’s not simply a case of engineering shutting its doors on a pool of potential talent? What if people — men and women alike — just aren’t knocking at the door of British engineering?

When it comes to addressing the lack of female engineers, often we will hear phrases like “It’s because girls are brought up to believe they can’t do maths!” or “It’s because we treat engineering as a ‘man’s job’.”

This might have been true many decades ago, but in the modern day it’s been quite some time since such strong gender biases were in play at an educational level. There’s still work to be done, of course, but the idea of an entire sector being cordoned off as ‘careers for men’ and ‘careers for women’ has long since been kicked to the curb. Female students are under no illusions – engineering is, as far as they are concerned, as valid an option for them as it is for their male classmates.

Research by EngineeringUK shows how this perception persisted between 2015 and 2019.

As the graphs show, girls don’t believe engineering is ‘just for boys’. In fact, the perception is only increasing, with 94 per cent of girls at school-leaving age (16–19) in 2019 saying they agreed that engineering is suitable for boys and girls. And 81 per cent of their male counterparts agreed.

It’s not that girls don’t think they can be engineers, then. Perception of ability isn’t the problem. Perception of engineering as a desirable career, however, is certainly in play. And it’s getting worse.

The perception of engineering as a desirable career path is dropping among 11-14-year-old girls, and 16-19-year-old girls in particular. This could be cited as proof that more needs to be done to break down barriers and build up role models for potential female engineers in order to make the career path look more attainable and aspirational for women.

However, it’s important to note that boys are not much more enthralled by the idea of becoming engineers either, with a slight decrease also present in the last four years.

Engineering in the UK, it seems, is simply not showcasing itself as a desirable career path to either genders, and the impact of this is simply showing more in a lack of female engineers due to the residual remnants of gendered stereotypes amplifying the overall problem.

But why is engineering not appealing to men or women?

This isn’t a global problem. The UK ranks lowest in Europe when it comes to female representation in its engineering workforce.

According to civil engineer Jessica Green, the issue is certainly on a national scale. In the UK, engineering simply isn’t presented with any of the glamour or prestige that parallel occupations, such as architecture, is afforded. In fact, Green admits she herself “turned [her] nose up at engineering”, believing that to go down that route would mean spending her career “dressed in overalls working in tunnels”.

That, she points out, is the concept of engineering that the UK pushes out. Despite the years of academic study and on-the-job training that many engineering roles require, for some reason, engineering is denied its rightful sense of achievement and prestige.

Meanwhile, as Green also points out, being able to call yourself an engineer in Spain is certainly held in high regard. There, you can’t call yourself an engineer at all without going through a difficult six-year university process, after which the title of ‘engineer’ is awarded. It’s held in the same regard as becoming a doctor. It’s little wonder then that Spain enjoys a relatively even number of male and female engineers.

Meanwhile, in the UK the word ‘engineer’ is used far more loosely. Not only that, but there’s a sense of ambiguity in the UK regarding what an engineer is. A lack of representation compared to other prestigious roles, such as doctors or lawyers, means that many people simply think of overalls and hard hats when it comes to engineers — despite the fact that plenty of engineers work in office environments or in a digital field.

It’s clear that engineering as a whole needs to rebrand itself in the UK, and not just for women. Female students in the UK are perfectly confident in their ability to become engineers — instead of a feeling that they need to prove themselves to the engineering sector, it is, in fact, the engineering sector that needs to prove itself to potential employees.

By building up the prestige of engineering and holding it in the same high regard as our European neighbours, the UK engineering workforce would no doubt begin to see a much stronger and swifter change in terms of gender representation within engineering.

To be a woman in engineering — as with everything in life — you need to work hard. But so do the men. Being a woman has neither helped nor hindered my career in this incredible field. What engineering needs to do smarter is raise its profile, make itself more appealing to future generations — it needs to reposition itself.

Diane Boon is director of commercial operations at structural steelwork company Cleveland Bridge.

Comment I had opportunities in both engineering and chemistry when I was young. I wasted them because they didn’t seem cool. I can recall looking out of the laboratory window , wearing my white coat , and envying the cool guys in their suits. It was a paint factory , my job was research in marine weathering because we supplied the MOD and private industry. I had no positive self image about what I was doing and started bunking off my day release at college. My boss , also Mr Cook, but no relation, started asking me complicated questions and I just wasn’t doing the academic side, so I quit to go down to Portsmouth to see my girlfriend. The overall head of research and development, Mr Bishop, told me I was like a highly strung racehorse that needed blinkers. I didn’t appreciate the metaphor then , but I do now , rather belatedly at 70.

My father had developed my interest in vehicle engineering , loads and materials. I even built a petrol driven go cart after he died, when I was 12 – though I found science boring. I was always building things and loved making balsa aircraft. I taught myself about aerodynamics and the ATC took us flying. Motor racing was exciting and I wanted to be like Colin Chapman. Then I read a book about Isambard Kingdom Brunel when I was at secondary school. I started thinking I would like to be a Civil Engineer, but with my father dead, and the family needing money, I went delivering papers in the early morning and working on the farm at night. In the late 1960s, I got a job in the paint factory warehouse , where they liked the way I reorganised their chaotic storage system. That led to me starting in the laboratory, but I had no plan.

So I went to university two years late, reading social science , majoring in economics with a minor in economic history – spending my entire 3 years of 20 weeks vacation working on large construction sites. The economic history excited my interest about the wonders of Victorian engineering.

Just out of university, opportunity knocked when back home . The No 1 Divisional County Highways boss asked me, while we were in the pub , if I would like to follow my long dead County Highways engineer great Uncle Harry -a local legend- and work for the county. I did for 6 months, out on the roads , learning before college , but didn’t like the image , so I quit. A few years later I got the chance to work and study in engineering for the Chileans , but that foolish sense of wanting something cleaner, and more obvious, to do led me to work as a teacher , initially in maths and P.E.

There was nothing about that maths cirriculium in the 1980s to stimulate boys or girls into the practicalities of maths. I recall a large 5th year boy ( now called 11th ) who gave most teachers trouble, though not me because I treated him as an individual, not a problem. He was classed as remedial, treading water before the dole queue. He sat at a table in front of my desk usually looking depressed and sad. One day he said ‘Sir, you like trains don’t you ? ‘ He had seen me reading books about railways. I said ‘Yes.’ He became very enthisiastic, saying ‘Me an’ me dad make trains, models, would you like to see pictures ?’ Sympathetically , I said ‘yes’.