July 19th 2024

What is immunotherapyCancer Research UKhttps://www.cancerresearchuk.org › treatment › what-is…

Immunotherapy uses our immune system to fight cancer. It works by helping the immune system recognise and attack cancer cells. You might have immunotherapy …

What exactly does immunotherapy do?

Immunotherapy is treatment that uses a person’s own immune system to fight cancer. Immunotherapy can boost or change how the immune system works so it can find and attack cancer cells.

July 17th 2024

‘Supermodel granny’ drug extends life in animals

Health and science correspondent

Updated 4 hours ago

A drug has increased the lifespans of laboratory animals by nearly 25%, in a discovery scientists hope can slow human ageing too.

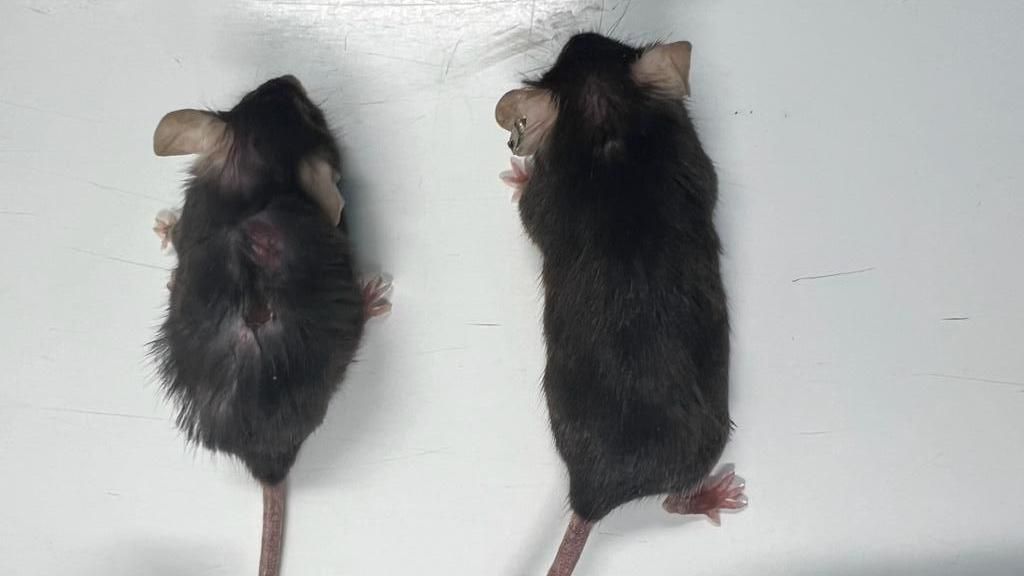

The treated mice were known as “supermodel grannies” in the lab because of their youthful appearance.

They were healthier, stronger and developed fewer cancers than their unmedicated peers.

The drug is already being tested in people, but whether it would have the same anti-ageing effect is unknown.

The quest for a longer life is woven through human history.

However, scientists have long known the ageing process is malleable – laboratory animals live longer if you significantly cut the amount of food they eat.

Now the field of ageing-research is booming as researchers try to uncover – and manipulate – the molecular processes of ageing.

The team at the MRC Laboratory of Medical Science, Imperial College London and Duke-NUS Medical School in Singapore were investigating a protein called interleukin-11.

Levels of it increase in the human body as we get older, it contributes to higher levels of inflammation, and the researchers say it flips several biological switches that control the pace of ageing.

Longer, healthier lives

The researchers performed two experiments.

- The first genetically engineered mice so they were unable to produce interleukin-11

- The second waited until mice were 75 weeks old (roughly equivalent to a 55-year-old person) and then regularly gave them a drug to purge interleukin-11 from their bodies

The results, published in the journal Nature,, external showed lifespans were increased by 20-25% depending on the experiment and sex of the mice.

Old laboratory mice often die from cancer, however, the mice lacking interleukin-11 had far lower levels of the disease.

And they showed improved muscle function, were leaner, had healthier fur and scored better on many measures of frailty.

https://emp.bbc.co.uk/emp/SMPj/2.53.8/iframe.htmlMedia caption,

See the difference between the mice unable to make interleukin-11 on the left and the normally ageing mice on the right

I asked one of the researchers, Prof Stuart Cook, whether the data was too good to be believed.

He told me: “I try not to get too excited, for the reasons you say, is it too good to be true?

“There’s lots of snake oil out there, so I try to stick to the data and they are the strongest out there.”

He said he “definitely” thought it was worth trialling in human ageing, arguing that the impact “would be transformative” if it worked and was prepared to take it himself.

But what about people?

The big unanswered questions are could the same effect be achieved in people, and whether any side effects would be tolerable.

Interleukin-11 does have a role in the human body during early development.

People are, very rarely, born unable to make it. This alters how the bones in their skull fuse together, affects their joints, which can need surgery to correct, and how their teeth emerge. It also has a role in scarring.

The researchers think that later in life, interleukin-11 is playing the bad role of driving ageing.

The drug, a manufactured antibody that attacks interleukin-11, is being trialled in patients with lung fibrosis. This is where the lungs become scarred, making it harder to breathe.

Prof Cook said the trials had not been completed, however, the data suggested the drug was safe to take.

This is just the latest approach to “treating” ageing with drugs. The type-2 diabetes drug metformin and rapamycin, which is taken to prevent an organ transplant being rejected, are both actively being researched for their anti-ageing qualities.

Prof Cook thinks a drug is likely to be easier for people than calorie restriction.

“Would you want to live from the age of 40, half-starved, have a completely unpleasant life, if you’re going to live another five years at the end? I wouldn’t,” he said.

Prof Anissa Widjaja, from Duke-NUS Medical School, said: “Although our work was done in mice, we hope that these findings will be highly relevant to human health, given that we have seen similar effects in studies of human cells and tissues.

“This research is an important step toward better understanding ageing and we have demonstrated, in mice, a therapy that could potentially extend healthy ageing.”

Ilaria Bellantuono, professor of musculoskeletal ageing at the University of Sheffield, said: “Overall, the data seems solid, this is another potential therapy targeting a mechanism of ageing, which may benefit frailty.”

However, he said there were still problems, including the lack of evidence in patients and the cost of making such drugs and “it is unthinkable to treat every 50-year-old for the rest of their life”.

Related Topics

- Exercise ‘buys five years for elderly’

- Published15 May 2015

- Metabolism peaks at one and tanks after 60 – study

- Published13 August 2021

June 19th 2024

Y chromosome is evolving faster than the X, primate study reveals

The male Y chromosome in humans is evolving faster than the X. Scientists have now discovered the same trend in six species of primate.

June 16th 2024

Are animals conscious? How new research is changing minds

- Published9 hours ago

Pallab Ghosh

Science Correspondent

Charles Darwin enjoys a near god-like status among scientists for his theory of evolution. But his ideas that animals are conscious in the same way humans are have long been shunned. Until now.

“There is no fundamental difference between man and animals in their ability to feel pleasure and pain, happiness, and misery,” Darwin wrote.

But his suggestion that animals think and feel was seen as scientific heresy among many, if not most animal behaviour experts.

Attributing consciousness to animals based on their responses was seen as a cardinal sin. The argument went that projecting human traits, feelings, and behaviours onto animals had no scientific basis and there was no way of testing what goes on in animals’ minds.

But if new evidence emerges of animals’ abilities to feel and process what is going on around them, could that mean they are, in fact, conscious?

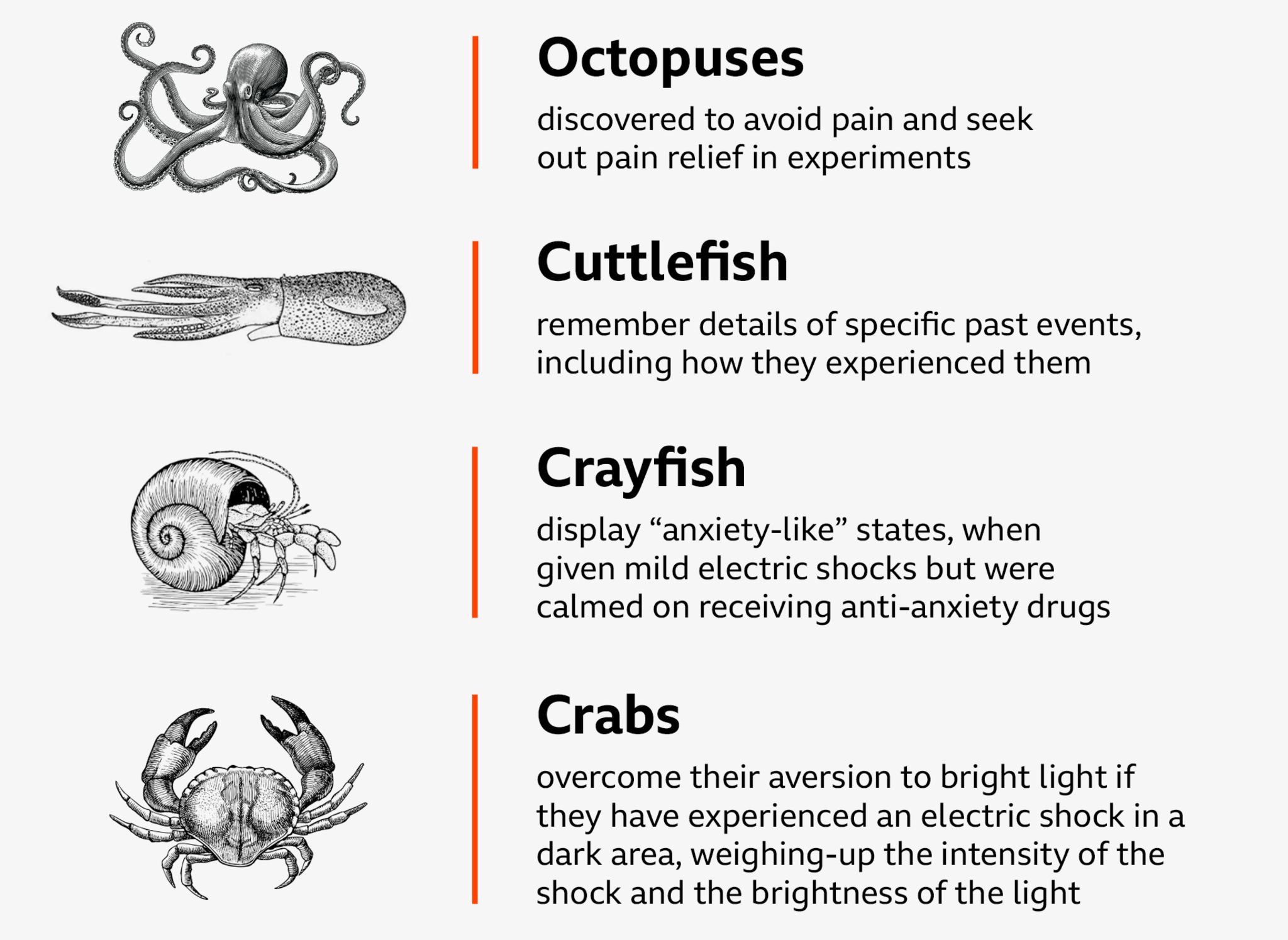

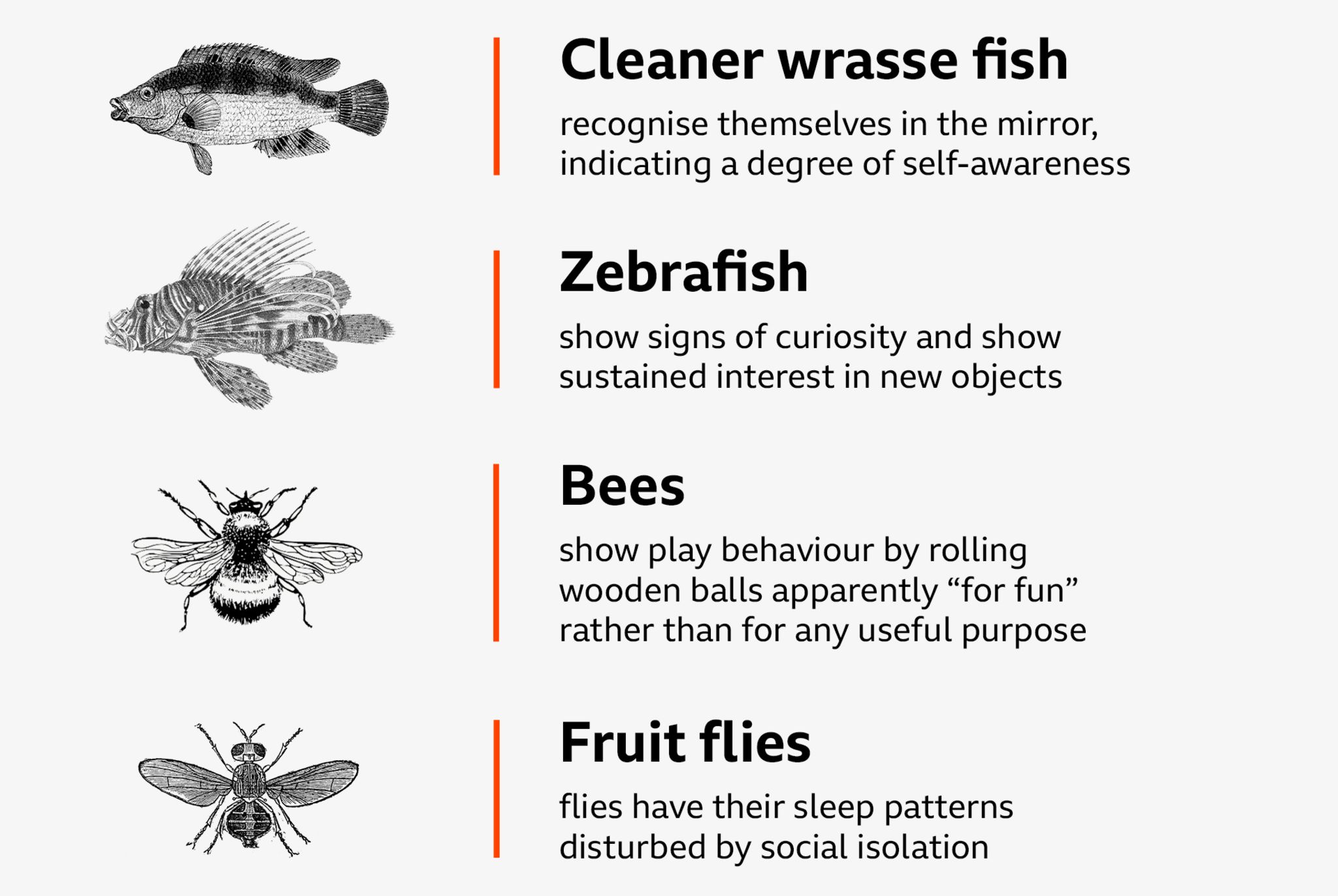

We now know that bees can count, recognise human faces and learn how to use tools.

Prof Lars Chittka of Queen Mary University of London has worked on many of the major studies of bee intelligence.

“If bees are that intelligent, maybe they can think and feel something, which are the building blocks of consciousness,” he says.

Prof Chittka’s experiments showed that bees would modify their behaviour following a traumatic incident and seemed to be able to play, rolling small wooden balls, which he says they appeared to enjoy as an activity.

These results have persuaded one of the most influential and respected scientists in animal research to make this strong, stark and contentious statement:

“Given all the evidence that is on the table, it is quite likely that bees are conscious,” he said.

It isn’t just bees. Many say that it is now time to think again, with the emergence of new evidence they say marks a “sea change” in thinking on the science of animal consciousness.

They include Prof Jonathan Birch of the London School of Economics.

“We have researchers from different fields starting to dare to ask questions about animal consciousness and explicitly think about how their research might be relevant to those questions,” says Prof Birch.

Anyone looking for a eureka moment will be disappointed.

Instead, a steady growth of evidence for a rethink has led to murmurings among the researchers involved. Now, many want a change in scientific thinking in the field.

What has been discovered may not amount to conclusive proof of animal consciousness, but taken together, it is enough to suggest that there is “a realistic possibility” that animals are capable of consciousness, according to Prof Birch.

This applies not only to what are known as higher animals such as apes and dolphins who have reached a more advanced stage of development than other animals. It also applies to simpler creatures, such as snakes, octopuses, crabs, bees and possibly even fruit flies, according to the group, who want funding for more research to determine whether animals are conscious, and if so, to what extent.

But if you’re wondering what we even mean by consciousness, you’re not alone. It’s something scientists can’t even agree on.

An early effort came in the 17th century, by the French philosopher René Descartes who said: “I think therefore I am.”

He added that “language is the only certain sign of thought hidden in a body”.

But those statements have muddied the waters for far too long, according to Prof Anil Seth of Sussex University, who has been wrestling with the definition of consciousness for much of his professional career.

“This unholy trinity, of language, intelligence and consciousness goes back all the way to Descartes,” he told BBC News, with a degree of annoyance at the lack of questioning of this approach until recently.

The “unholy trinity” is at the core of a movement called behaviourism, which emerged in the early 20th Century. It says that thoughts and feelings cannot be measured by scientific methods and so should be ignored when analysing behaviour.

Many animal behaviour experts were schooled in this view, but it is beginning to make way for a less human-centred approach, according to Prof Seth.

“Because we see things through a human lens, we tend to associate consciousness with language and intelligence. Just because they go together in us, it doesn’t mean they go together in general.”

Some are very critical of some uses of the word consciousness.

“The field is replete with weasel words and unfortunately one of those is consciousness,” says Prof Stevan Harnad of Quebec University.

“It is a word that is confidently used by a lot of people, but they all mean something different, and so it is not clear at all what it means.”

He says that a better, less weasley, word is “sentience”, which is more tightly defined as the capacity to feel. “To feel everything, a pinch, to see the colour red, to feel tired and hungry, those are all things you feel,” says Prof Harnad.

Others who have been instinctively sceptical of the idea of animals being conscious say that the new broader interpretation of what it means to be conscious makes a difference.

Dr Monique Udell, from Oregon State University, says she comes from a behaviourist background.

“If we look at distinct behaviours, for example what species can recognise themselves in a mirror, how many can plan ahead or are able to remember things that happened in the past, we are able to test these questions with experimentation and observation and draw more accurate conclusions based on data,” she says.

“And if we are going to define consciousness as a sum of measurable behaviours, then animals that have succeeded in these particular tasks can be said to have something that we choose to call consciousness.”

This is a much narrower definition of consciousness than the new group is promoting, but a respectful clash of ideas is what science is all about, according to Dr Udell.

“Having people who take ideas with a grain of salt and cast a critical eye is important because if we don’t come at these questions in different ways, then it is going to be harder to progress.”

But what next? Some say far more animals need to be studied for the possibility of consciousness than is currently the case.

“Right now, most of the science is done on humans and monkeys and we are making the job much harder than it needs to be because we are not learning about consciousness in its most basic form,” says Kristin Andrews, a professor of philosophy specialising in animal minds at York university in Toronto.

Prof Andrews and many others believe that research on humans and monkeys is the study of higher level consciousness – exhibited in the ability to communicate and feel complex emotions – whereas an octopus or snake may also have a more basic level of consciousness that we are ignoring by not investigating it.

Prof Andrews was among the prime movers of the New York Declaration on Animal Consciousness, external signed earlier this year, which has so far been signed by 286 researchers.

The short four paragraph declaration states that it is “irresponsible” to ignore the possibility of animal consciousness.

“We should consider welfare risks and use the evidence to inform our responses to these risks,” it says.

Chris Magee is from Understanding Animal Research, a UK body backed by research organisations and companies that undertake animal experiments.

He says animals already are assumed to be conscious when it comes to whether to conduct experiments on them and says UK regulations require that experiments should be conducted only if the benefits to medical research outweigh the suffering caused.

“There is enough evidence for us to have a precautionary approach,” he says.

But there is also a lot we don’t know, including about decapod crustaceans such as crabs, lobsters, crayfish, and shrimp.

“We don’t know very much about their lived experience, or even basic things like the point at which they die.

“And this is important because we need to set rules to protect them either in the lab or in the wild.”

A government review led by Prof Birch in 2021, external assessed 300 scientific studies on the sentience of decapods and Cephalopods, which include octopus, squid, and cuttlefish.

Prof Birch’s team found that there was strong evidence that these creatures were sentient in that they could experience feelings of pain, pleasure, thirst, hunger, warmth, joy, comfort and excitement. The conclusions led to the government including these creatures into its Animal Welfare (Sentience) Act in 2022.

“Issues related to octopus and crab welfare have been neglected,” says Prof Birch.

“The emerging science should encourage society to take these issues a bit more seriously.”

There are millions of different types of animals and precious little research has been carried out on how they experience the world. We know a bit about bees and other researchers have shown indications of conscious behaviour in cockroaches and even fruit flies but there are so many other experiments to be done involving so many other animals.

It is a field of study that the modern-day heretics who have signed the New York Declaration claim has been neglected, even ridiculed. Their approach, to say the unsayable and risk sanction is nothing new.

Around the same time that Rene Descartes was saying “I think therefore I am”, the Catholic church found the Italian astronomer Galileo Galilei “vehemently suspect of heresy” for suggesting that the Earth was not the centre of the Universe.

It was a shift in thinking that opened our eyes to a truer, richer picture of the Universe and our place in it.

Shifting ourselves from the centre of the Universe a second time may well do the same for our understanding of ourselves as well as the other living things with whom we share the planet.

BBC InDepth is the new home on the website and app for the best analysis and expertise from our top journalists. Under a distinctive new brand, we’ll bring you fresh perspectives that challenge assumptions, and deep reporting on the biggest issues to help you make sense of a complex world. And we’ll be showcasing thought-provoking content from across BBC Sounds and iPlayer too. We’re starting small but thinking big, and we want to know what you think – you can send us your feedback by clicking on the button below.

June 13th 2024

How Fast Can Airplanes Go, Exactly?

An aviation expert breaks down how fast the answer beyond “really, really fast.”

Published on June 5, 2024 at 3:38 PM Taro Hama @ e-kamakura/Moment/Getty Images

Taro Hama @ e-kamakura/Moment/Getty Images

Science and human ingenuity have given an innumerable number of gifts to society: indoor plumbing, medicine, electricity. Sure, it’s also given us drone warfare, AI, and those weird Amazon Go stores—but let’s focus on the positive. One of the most exciting advancements of the modern era is the commercial airplane, which has made traveling around our massive planet in less that 180 days a true possibility.

The earliest airplanes weren’t exactly speed demons. The first flyers traveled at max speeds of 31 miles per hour, back in 1903. Now, 104 years later, planes have gotten a little faster. But just how fast? It turns out the answer isn’t so simple—it’s a lot more complicated than getting a basic miles per hour answer.

Thrillist spoke with Luciano Stanzione, who leads AviationExperts.org, an aviation consulting agency, to better understand how fast the modern commercial airplane is capable of flying.

How fast can the fastest commercial airliner fly?

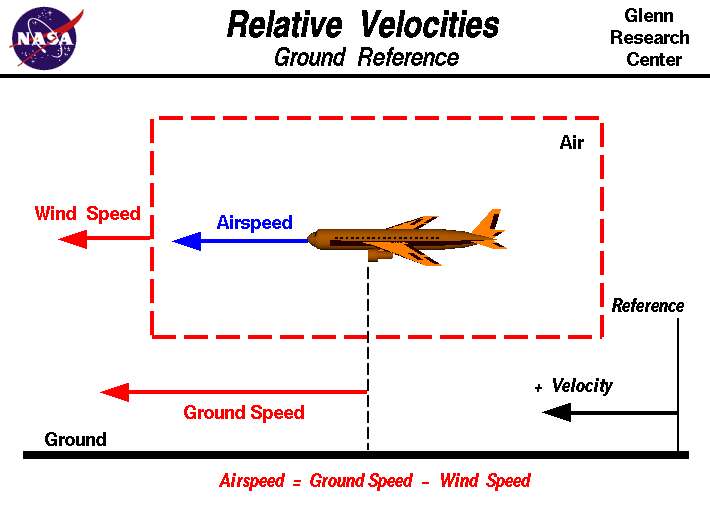

According to Stanzione, the speed of an airplane depends on many surprisingly complex factors, primarily that airspeed is calculated differently than ground speed.

“The airspeed is measured in KIAS (indicated air speed in knots) and in Mach speed (reference value to the speed of sound, used in higher altitudes),” Stanzione tells Thrillist. “Airliners travel, depending on the type of aircraft, loads, altitudes and routes, and specifics of the dispatch in a wide variety of ranges.”

So after accounting for all of those factors, what are the speeds that these commercial airliners can reach? “Speed is a complex aspect of aviation,” Stanzione says. The speed that airplanes can reach in the air can’t be as easily determined by the same metrics in which we measure the speed of objects on the ground. So bear with Stanzione for this scientific breakdown for a second.

“At lower altitudes, the usual max speeds are around 320/350 knots above 10,000 feet, and limited to 250 knots below 10,000. At higher altitudes, the max speeds vary depending on the aspects mentioned before, but usually, they go from 0.70 mach to 0.90 mach and above. (Over 0.90 is a high speed for an airliner),” Stanzione explains. “Those speeds are in relation to the mass of air within which the aircraft is moving. In reference to the ground, that could translate to a wide variety of ranges, depending on winds (which can be very strong up there) and other factors. A plane flying at the same airspeed above could be moving on the ground at 200 or 1,000 miles per hour.”

According to Pilot’s Institute, these speeds would be relative to roughly 587 mph and 669 mph for the fastest commercial airliners. This is roughly more than three times the flying speed of the world’s fastest bird, the peregrine falcon. The below graphic from NASA demonstrates the above calculation a bit more simply:

What would it look like for an airplane to reach top speeds on the ground?

“That depends on many factors, some of them mentioned above,” Stanzione says. “Sometimes they could be traveling at the same ground speed and others, the comparison would not be even possible.”

What can affect the speeds of an airplane?

“Winds, weight, type of aircraft, load, center of gravity, weather immersion, atmospheric pressure, aircraft status, turbulence, plane limitations, fuel consumption limitations and many other factors,” Stanzione says.

Do airplanes always travel at their fastest speed?

No.

How fast will airliners be able to go in the future?

“That is impossible to determine but manufacturers are working again on high-speed jets, above sonic speeds,” Stanzione says. “For a few decades now, improvements on speed have not been major. Let’s see if new supersonic developments change that.”

What is the fastest airplane in the world?

The fastest planes in the world are not commercial jets that we can travel on as mere civilians. The world’s fastest planes are non-commercial jets, according to Stanzione:

- NASA X-43: Mach 9.6 (11,854 km/h or 7,380 mph)

- Lockheed SR-72: Mach 6 (7,200 km/h or 4,470 mph)

- North American X-15A-2: Mach 6.72 (7,300 km/h or 4,530 mph)

- MiG-31 Foxhound: Mach 2.83 (3,000 km/h or 1,864 mph)

- Lockheed SR-71 Blackbird: Mach 3.56 (3,500 km/h or 2,175 mph)

- F-22 Raptor: Mach 2.25 (2,200 km/h or 1,367 mph)

- Sukhoi Su-35S: Mach 2.25 (2,200 km/h or 1,367 mph)

- Dassault Rafale: Mach 2.0 (2,000 km/h or 1,243 mph)

- Eurofighter Typhoon: Mach 2.0 (2,000 km/h or 1,243 mph)

- MiG-25 Foxbat: Mach 3.2 (3,200 km/h or 1,988 mph)

The fastest commercial planes are the Boeing 747 8i and the Boeing 747, while the fastest private jet is the Gulfstream G700.

Looking for more travel tips?

Whether you need help sneaking weed onto a plane, finding an airport where you can sign up for PreCheck without an appointment, or making sure you’re getting everything you’re entitled to when your flight is canceled, we’ve got you covered. Keep reading for up-to-date travel hacks and all the travel news you need to help you plan your next big adventure.

Want more Thrillist? Follow us on Instagram, TikTok, Twitter, Facebook, Pinterest, and YouTube.

Opheli Garcia Lawler is a Senior Staff Writer at Thrillist. She holds a bachelor’s and master’s degree in Journalism from NYU’s Arthur L. Carter Journalism Institute. She’s worked in digital media for eight years, and before working at Thrillist, she wrote for Mic, The Cut, The Fader, Vice, and other publications. Follow her on Twitter @opheligarcia and Instagram @opheligarcia.

June 9th 2024

Einstein Once Wrote an Urgent Letter that Altered History Forever

While Einstein didn’t work directly on the Manhattan Project, he was responsible for its inception.

- Elana Spivack

Read when you’ve got time to spare.

Advertisement

Einstein Once Wrote an Urgent Letter that Altered History Forever

While Einstein didn’t work directly on the Manhattan Project, he was responsible for its inception.

- How a 195-year-old discovery could build the future of energy

- 101 years ago, physicists made a critical discovery we still don’t understand

- “Holy Grail” Metallic Hydrogen Is Going to Change Everything

On August 2, 1939, German physicist Albert Einstein penned a letter to President Franklin D. Roosevelt. It was one month before Germany invaded Poland, and two years before the attack on Pearl Harbor.

This letter, mentioned in the newly released Christopher Nolan movie Oppenheimer, set off its own chain reaction that resulted in the Manhattan Project, which began in August 1942 and ended three years later with the devastating atomic bomb drops.

Einstein wrote to Roosevelt that “it may be possible to set up a nuclear chain reaction in a large mass of uranium, by which vast amounts of power and large quantities of new radium-like elements would be generated.” This achievement, which would almost certainly come about “in the immediate future,” could lead to the creation of “extremely powerful bombs.”

Einstein then urged the President to keep Government Departments abreast of further developments, especially about securing a supply of uranium ore for the U.S., and hasten experimental work. While the letter was written in August, it wouldn’t reach Roosevelt’s hands until October of that year.

“Immediately after getting the letter, [Roosevelt] put a scientific committee to work looking into the possibility of making use of atomic power in the war,” Jeffrey Urbin, an education specialist at the Roosevelt Presidential Library and Museum, writes to Inverse in an email. The weapons were coded “tube alloys” going forward.

Behind the Letter

Einstein mentions three other renowned physicists: Enrico Fermi, Leo Szilard, and Jean Frédéric Joliot. Each one independently contributed to the science behind the atomic bomb, building on decades of prior nuclear research that set the stage for this historic inflection point.

Hungarian-Jewish Szilard conceived of the nuclear chain reaction in 1933. In light of the neutron’s discovery by James Chadwick in 1932, Szilard contemplated that when an atom’s nucleus is split, vast energy stores are released, including neutrons that could instigate another split producing more neutrons, and so on, but he couldn’t procure research funding.

It wasn’t until December 1938, after Szilard had emigrated to America, that Otto Hahn and Fritz Strassman discovered fission in uranium, impelling him to conduct experiments on neutron emission in the fission process. In March 1939, during a three-month research tenure at Columbia University, he proved that for every neutron absorbed about two neutrons were released during fission. “That night,” Szilard wrote, “there was very little doubt in my mind that the world was headed for grief.”

All the while, Italian Fermi also investigated nuclear fission and chain reactions, also at Columbia. He had received the 1938 Nobel Prize in Physics for his work on neutron artificial radioactivity and nuclear reactions from slow neutrons. After the discovery of nuclear fission in December 1938, Fermi and his team probed chain reactions in uranium.

French Joliot conducted elaborate research on atomic structure with his wife, Iréne Joliot-Curie (daughter of Marie Curie, whom he assisted in the lab at age 25). Joliot and Curie jointly received the Nobel Prize in Chemistry in 1935 for their discovery of artificial radioactivity, which occurs when neutrons swarm stable isotopes.

As Einstein intimates, the methodology for building atomic bombs was all but set, and the Nazis had taken over the uranium ore mines in Czechoslovakia. Urbin also writes that Hitler and his regime would build a super weapon without a second thought. “It was imperative that the Allies prevent them from being the first to do so. Einstein understood as well as anyone given he had fled Germany.”

The question became: Who could build a bomb first?

Enter: Oppenheimer

Meanwhile, American theoretical physicist J. Robert Oppenheimer had been working in Ernest Lawrence’s Radiation Lab at the University of California, Berkeley since 1927. There, he developed a fast neutron theoretical physics in 1942. Later that year he began investigating bomb design and then combined the two disciplines with a fast neutron lab to develop an atomic weapon. In the fall of that year, General Leslie R. Groves asked the 38-year-old Oppenheimer to spearhead Project Y, at the secret Los Alamos Laboratory in rural New Mexico.

However, Oppenheimer hadn’t always been entrenched in nuclear warfare research. Before 1940, he had sunk his teeth into quantum field theory, astrophysics, black holes, and more. Berkeley also served as fertile ground for Oppenheimer’s stake in political activism. Partially because of Lawrence, he became interested in the physics of the atomic bomb in 1941. A brilliant physicist who received his Ph.D. at age 22, Oppenheimer proved a tireless researcher and “excellent director” of Los Alamos

In August 1939, Oppenheimer likely wasn’t even writing out equations for building an atomic bomb. Neither Einstein nor Roosevelt could’ve known at that point who he would become. Still, in retrospect, it seems almost inevitable that he became the momentous figure we know now.

More from Inverse

- How a 195-year-old discovery could build the future of energy

- 101 years ago, physicists made a critical discovery we still don’t understand

- “Holy Grail” Metallic Hydrogen Is Going to Change Everything

Advertisement

On August 2, 1939, German physicist Albert Einstein penned a letter to President Franklin D. Roosevelt. It was one month before Germany invaded Poland, and two years before the attack on Pearl Harbor.

This letter, mentioned in the newly released Christopher Nolan movie Oppenheimer, set off its own chain reaction that resulted in the Manhattan Project, which began in August 1942 and ended three years later with the devastating atomic bomb drops.

Einstein wrote to Roosevelt that “it may be possible to set up a nuclear chain reaction in a large mass of uranium, by which vast amounts of power and large quantities of new radium-like elements would be generated.” This achievement, which would almost certainly come about “in the immediate future,” could lead to the creation of “extremely powerful bombs.”

Einstein then urged the President to keep Government Departments abreast of further developments, especially about securing a supply of uranium ore for the U.S., and hasten experimental work. While the letter was written in August, it wouldn’t reach Roosevelt’s hands until October of that year.

“Immediately after getting the letter, [Roosevelt] put a scientific committee to work looking into the possibility of making use of atomic power in the war,” Jeffrey Urbin, an education specialist at the Roosevelt Presidential Library and Museum, writes to Inverse in an email. The weapons were coded “tube alloys” going forward.

Behind the Letter

Einstein mentions three other renowned physicists: Enrico Fermi, Leo Szilard, and Jean Frédéric Joliot. Each one independently contributed to the science behind the atomic bomb, building on decades of prior nuclear research that set the stage for this historic inflection point.

Hungarian-Jewish Szilard conceived of the nuclear chain reaction in 1933. In light of the neutron’s discovery by James Chadwick in 1932, Szilard contemplated that when an atom’s nucleus is split, vast energy stores are released, including neutrons that could instigate another split producing more neutrons, and so on, but he couldn’t procure research funding.

It wasn’t until December 1938, after Szilard had emigrated to America, that Otto Hahn and Fritz Strassman discovered fission in uranium, impelling him to conduct experiments on neutron emission in the fission process. In March 1939, during a three-month research tenure at Columbia University, he proved that for every neutron absorbed about two neutrons were released during fission. “That night,” Szilard wrote, “there was very little doubt in my mind that the world was headed for grief.”

All the while, Italian Fermi also investigated nuclear fission and chain reactions, also at Columbia. He had received the 1938 Nobel Prize in Physics for his work on neutron artificial radioactivity and nuclear reactions from slow neutrons. After the discovery of nuclear fission in December 1938, Fermi and his team probed chain reactions in uranium.

French Joliot conducted elaborate research on atomic structure with his wife, Iréne Joliot-Curie (daughter of Marie Curie, whom he assisted in the lab at age 25). Joliot and Curie jointly received the Nobel Prize in Chemistry in 1935 for their discovery of artificial radioactivity, which occurs when neutrons swarm stable isotopes.

As Einstein intimates, the methodology for building atomic bombs was all but set, and the Nazis had taken over the uranium ore mines in Czechoslovakia. Urbin also writes that Hitler and his regime would build a super weapon without a second thought. “It was imperative that the Allies prevent them from being the first to do so. Einstein understood as well as anyone given he had fled Germany.”

The question became: Who could build a bomb first?

Enter: Oppenheimer

Meanwhile, American theoretical physicist J. Robert Oppenheimer had been working in Ernest Lawrence’s Radiation Lab at the University of California, Berkeley since 1927. There, he developed a fast neutron theoretical physics in 1942. Later that year he began investigating bomb design and then combined the two disciplines with a fast neutron lab to develop an atomic weapon. In the fall of that year, General Leslie R. Groves asked the 38-year-old Oppenheimer to spearhead Project Y, at the secret Los Alamos Laboratory in rural New Mexico.

However, Oppenheimer hadn’t always been entrenched in nuclear warfare research. Before 1940, he had sunk his teeth into quantum field theory, astrophysics, black holes, and more. Berkeley also served as fertile ground for Oppenheimer’s stake in political activism. Partially because of Lawrence, he became interested in the physics of the atomic bomb in 1941. A brilliant physicist who received his Ph.D. at age 22, Oppenheimer proved a tireless researcher and “excellent director” of Los Alamos

In August 1939, Oppenheimer likely wasn’t even writing out equations for building an atomic bomb. Neither Einstein nor Roosevelt could’ve known at that point who he would become. Still, in retrospect, it seems almost inevitable that he became the momentous figure we know now.

April 23rd 2024

Does light itself truly have an infinite lifetime?

In all the Universe, only a few particles are eternally stable. The photon, the quantum of light, has an infinite lifetime. Or does it?

Key Takeaways

- In the expanding Universe, for billions upon billions of years, the photon seems to be one of the very few particles that has an apparently infinite lifetime.

- Photons are the quanta that compose light, and in the absence of any other interactions that force them to change their properties, are eternally stable, with no hint that they would transmute into any other particle.

- But how well do we know this to be true, and what evidence can we point to in order to determine their stability? It’s a fascinating question that pushes us right to the limits of what we can scientifically observe and measure.

Share Does light itself truly have an infinite lifetime? on Facebook

Share Does light itself truly have an infinite lifetime? on Twitter (X)

Share Does light itself truly have an infinite lifetime? on LinkedIn

One of the most enduring ideas in all the Universe is that everything that exists now will someday see its existence come to an end. The stars, galaxies, and even the black holes that occupy the space in our Universe will all some day burn out, fade away, and otherwise decay, leaving what we think of as a “heat death” state: where no more energy can possibly be extracted, in any way, from a uniform, maximum entropy, equilibrium state. But, perhaps, there are exceptions to this general rule, and that some things will truly live on forever.

Featured Videos

One such candidate for a truly stable entity is the photon: the quantum of light. All of the electromagnetic radiation that exists in the Universe is made up of photons, and photons, as far as we can tell, have an infinite lifetime. Does that mean that light will truly live forever? That’s what Anna-Maria Galante wants to know, writing in to ask:

“Do photons live forever? Or do they ‘die,’ and convert to some other particle? The light we see erupting from cosmic events over a verrrrry long past … we seem to know where it comes from, but where does it go? What is the life cycle of a photon?”

It’s a big and compelling question, and one that brings us right up to the edge of everything we know about the Universe. Here’s the best answer that science has today.

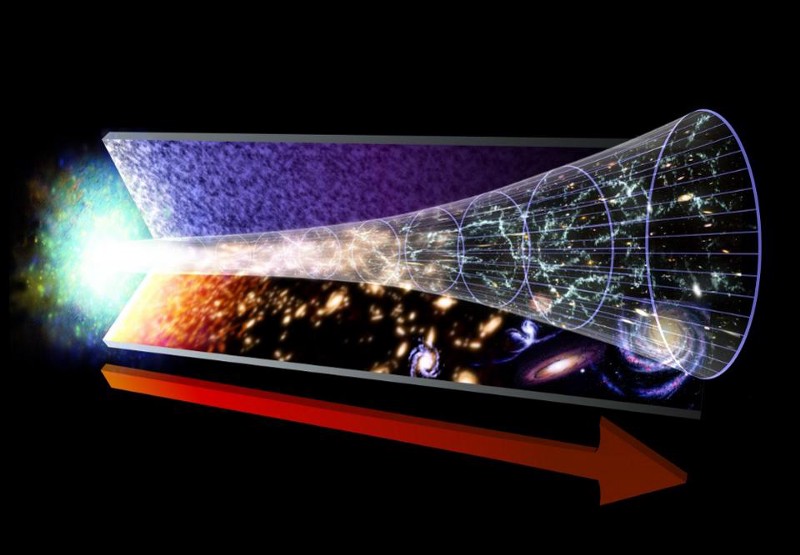

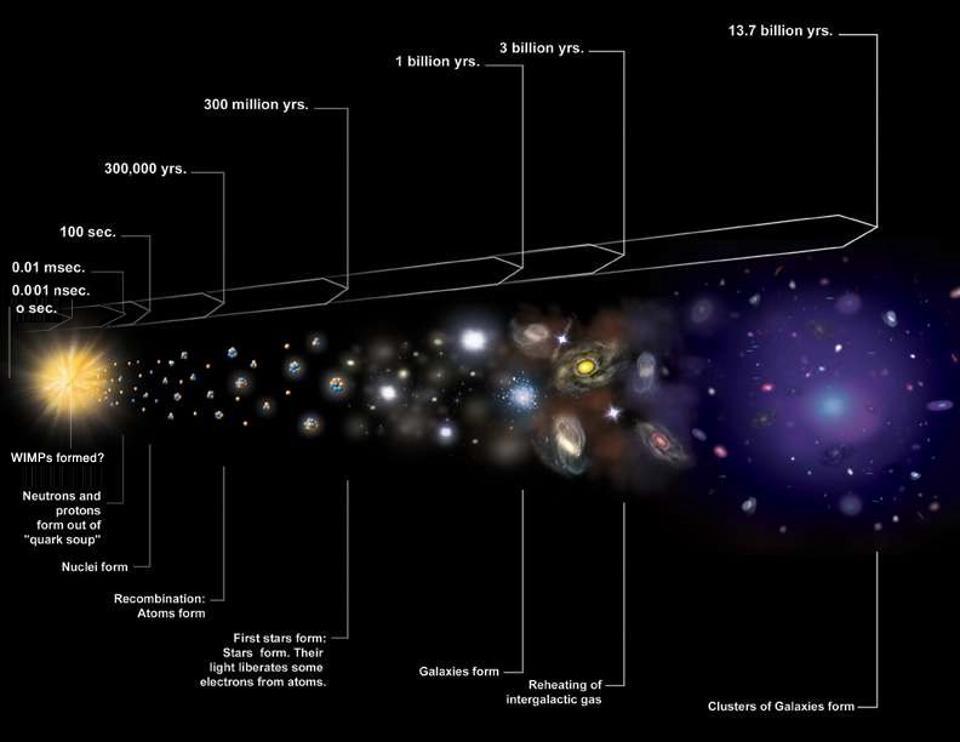

The first time the question of a photon having a finite lifetime came up, it was for a very good reason: we had just discovered the key evidence for the expanding Universe. The spiral and elliptical nebulae in the sky were shown to be galaxies, or “island Universes” as they were then known, well beyond the scale and scope of the Milky Way. These collections of millions, billions, or even trillions of stars were located at least millions of light-years away, placing them well outside of the Milky Way. Moreover, it was quickly shown that these distant objects weren’t just far away, but they appeared to be receding from us, as the more distant they were, on average, the greater the light from them turned out to be systematically shifted toward redder and redder wavelengths.

Top Stories

Of course, by the time this data was widely available in the 1920s and 1930s, we had already learned about the quantum nature of light, which taught us that the wavelength of light determined its energy. We also had both special and general relativity well in hand, which taught us that once the light leaves its source, the only way you could change its frequency was to either:

- have it interact with some form of matter and/or energy,

- have the observer moving either toward or away from the observer,

- or to have the curvature properties of space itself change, such as due to a gravitational redshift/blueshift or an expansion/contraction of the Universe.

The first potential explanation, in particular, led to the formulation of a fascinating alternative cosmology: tired light cosmology.

First formulated in 1929 by Fritz Zwicky — yes, the same Fritz Zwicky who coined the term supernova, who first formulated the dark matter hypothesis, and who once tried to “still” the turbulent atmospheric air by firing a rifle through his telescope tube — the tired light hypothesis put forth the notion that propagating light loses energy through collisions with other particles present in the space between galaxies. The more space there was to propagate through, the logic went, the more energy would be lost to these interactions, and that would be the explanation, rather than peculiar velocities or cosmic expansion, for why light appeared to be more severely redshifted for more distant objects.

However, in order for this scenario to be correct, there are two predictions that should be true.

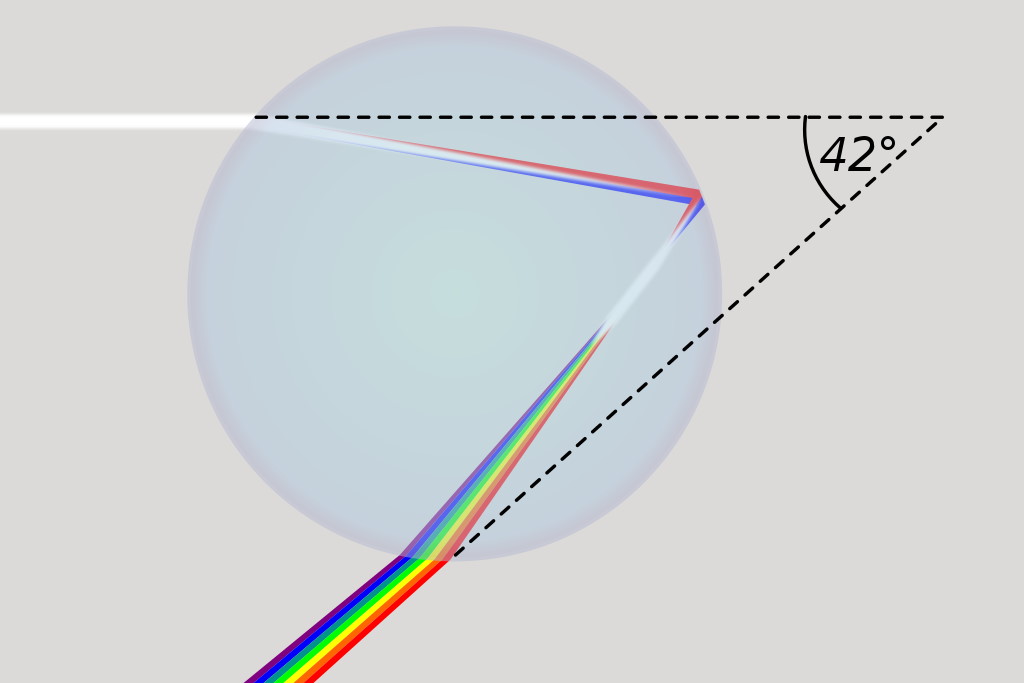

1. ) When light travels through a medium, even a sparse medium, it slows down from the speed of light in vacuum to the speed of light in that medium. The slowdown affects light of different frequencies by different amounts. Just as light passing through a prism splits into different colors, light passing through an intergalactic medium that interacted with it should slow light of different wavelengths down by different amounts. When that light re-enters a true vacuum, it will resume moving at the speed of light in a vacuum.

And yet, when we observed the light coming from sources at different distances, we found no wavelength-dependence to the amount of redshift that light exhibited. Instead, at all distances, all wavelengths of emitted light are observed to redshift by the exact same factor as all others; there is no wavelength-dependence to the redshift. Because of this null observation, the first prediction of tired light cosmology is falsified.

But there’s a second prediction to contend with, as well.

2.) If more distant light loses more energy by passing through a greater length of a “lossy medium” than less distant light, then those more distant objects should appear to be blurred by a progressively greater and greater amount than the less distant ones.

And again, when we go to test this prediction, we find that it isn’t borne out by observations at all. More distant galaxies, when seen alongside less distant galaxies, appear just as sharp and high-resolution as the less distant ones. This is true, for example, for all five of the galaxies in Stephan’s Quintet, as well as for the background galaxies visible behind all five of the quintet’s members. This prediction is falsified as well.

While these observations are good enough to falsify the tired light hypothesis — and, in fact, were good enough to falsify it immediately, as soon as it was proposed — that’s only one possible way that light could be unstable. Light could either die out or convert into some other particle, and there’s a set of interesting ways to think about these possibilities.

The first arises simply from the fact that we have a cosmological redshift. Each and every photon that’s produced, irrespective of how it’s produced, whether thermally or from a quantum transition or from any other interaction, will stream through the Universe until it collides and interacts with another quantum of energy. But if you were a photon emitted from a quantum transition, unless you can engage in the inverse quantum reaction in rather rapid fashion, you’re going to begin traveling through intergalactic space, with your wavelength stretching due to the Universe’s expansion as you do. If you’re not lucky enough to be absorbed by a quantum bound state with the right allowable transition frequency, you’ll simply redshift and redshift until you’re below the longest possible wavelength that will ever allow you to be absorbed by such a transition ever again.

However, there’s a second set of possibilities that exists for all photons: they can interact with an otherwise free quantum particle, producing one of any number of effects.

This can include scattering, where a charged particle — usually an electron — absorbs and then re-emits a photon. This involves an exchange of both energy and momentum, and can boost either the charged particle or the photon to higher energies, at the expense of leaving the other one with less energy.

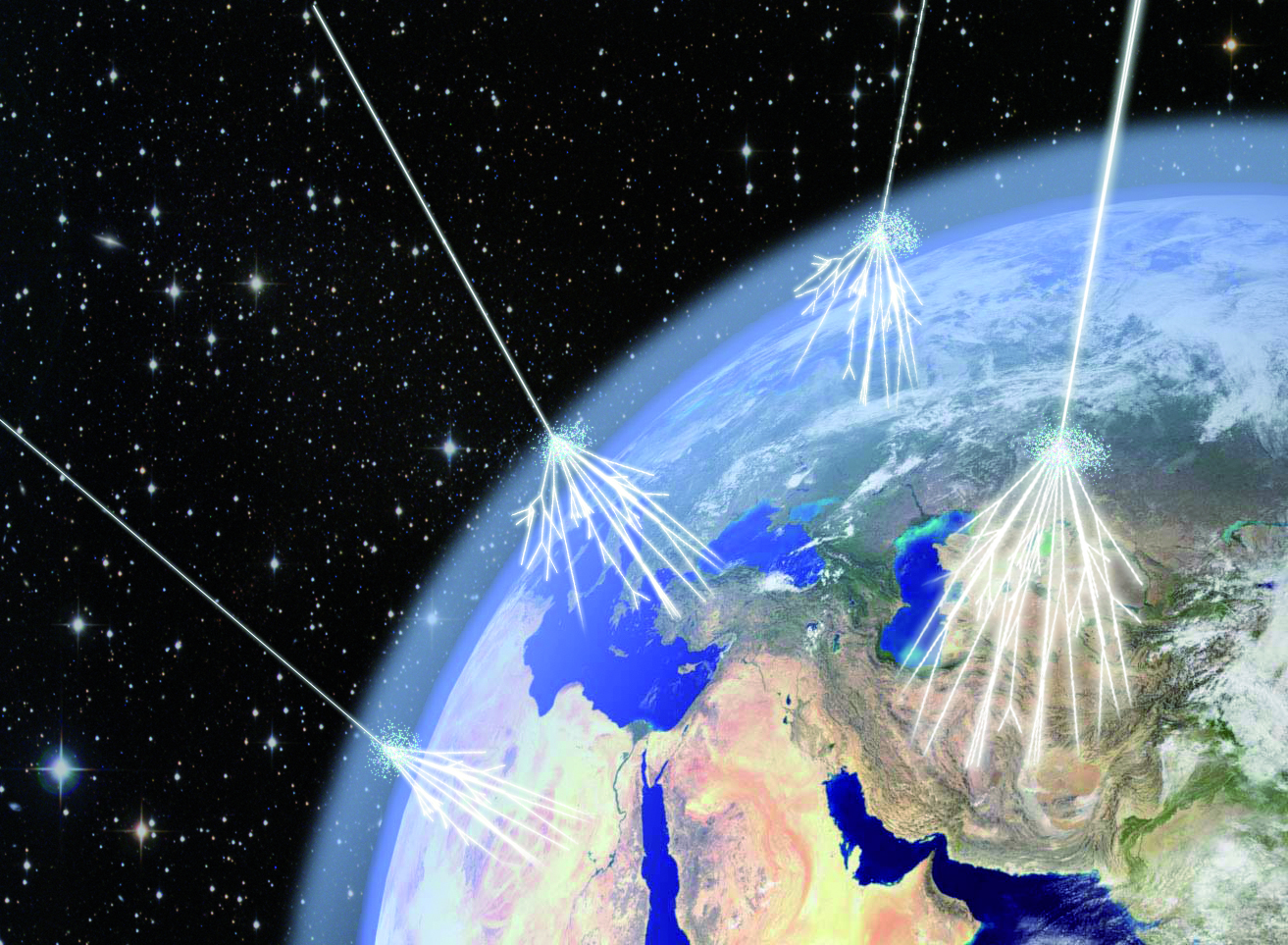

At high enough energies, the collision of a photon with another particle — even another photon, if the energy is high enough — can spontaneously produce a particle-antiparticle pair if there’s enough available energy to make them both through Einstein’s E = mc². In fact, the highest energy cosmic rays of all can do this even with the remarkably low-energy photons that are a part of the cosmic microwave background: the Big Bang’s leftover glow. For cosmic rays above ~1017 eV in energy, a single, typical CMB photon has a chance to produce electron-positron pairs. At even higher energies, more like ~1020 eV in energy, a CMB photon has a significantly large chance to convert to a neutral pion, which robs cosmic rays of energy rather quickly. This is the primary reason why there’s a steep drop-off in the population of the highest-energy cosmic rays: they’re above this critical energy threshold.

In other words, even very low-energy photons can be converted into other particles — non-photons — by colliding with another high-enough-energy particle.

There’s yet a third way to alter a photon beyond cosmic expansion or through converting into particles with a non-zero rest mass: by scattering off of a particle that results in the production of still additional photons. In practically every electromagnetic interaction, or interaction between a charged particle and at least one photon, there are what are known as “radiative corrections” that arise in quantum field theories. For every standard interaction where the same number of photons exist at the beginning as at the end, there’s a little less than a 1% chance — more like 1/137, to be specific — that you’ll wind up radiating an additional photon in the end over the number you started off with.

And every time you have an energetic particle that possesses a positive rest mass and a positive temperature, those particles will also radiate photons away: losing energy in the form of photons.

Photons are very, very easy to create, and while it’s possible to absorb them by inducing the proper quantum transitions, most excitations will de-excite after a given amount of time. Just like the old saying that “What goes up must come down,” quantum systems that get excited to higher energies through the absorption of photons will eventually de-excite as well, producing at least the same number of photons, generally with the same net energy, as were absorbed in the first place.

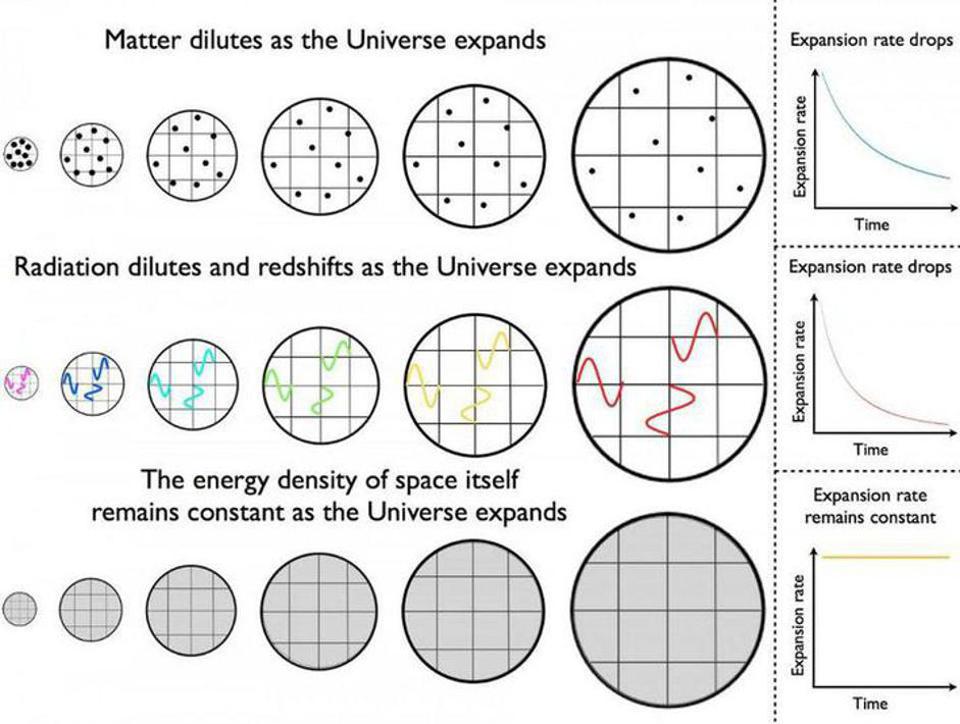

Given that there are so many ways to create photons, you’re probably salivating for ways to destroy them. After all, simply waiting for the effects of cosmic redshift to bring them down to an asymptotically low energy value and density is going to take an arbitrarily long time. Each time the Universe stretches to become larger by a factor of 2, the total energy density in the form of photons drops by a factor of 16: a factor of 24. A factor of 8 comes because the number of photons — despite all the ways there are to create them — remains relatively fixed, and doubling the distance between objects increases the volume of the observable Universe by a factor of 8: double the length, double the width, and double the depth.

The fourth-and-final factor of two comes from the cosmological expansion, which stretches the wavelength to double its original wavelength, thereby halving the energy-per-photon. On long enough timescales, this will cause the energy density of the Universe in the form of photons to asymptotically drop toward zero, but it will never quite reach it.

You might try to get clever, and imagine some sort of exotic, ultra-low-mass particle that couples to photons, that a photon could convert into under the right conditions. Some sort of boson or pseudoscalar particle — like an axion or axino, a neutrino condensate, or some sort of exotic Cooper pair — could lead to precisely this sort of occurrence, but again, this only works if the photon is sufficiently high in energy to convert to the particle with a non-zero rest mass via E = mc². Once the photon’s energy redshifts below a critical threshold, that no longer works.

Similarly, you might imagine the ultimate way to absorb photons: by having them encounter a black hole. Once anything crosses over from outside the event horizon to inside it, it not only can never escape, but it will always add to the rest mass energy of the black hole itself. Yes, there will be many black holes populating the Universe over time, and they will grow in mass and size as time continues forward.

But even that will only occur up to a point. Once the density of the Universe drops below a certain threshold, black holes will begin decaying via Hawking radiation faster than they grow, and that means the production of even greater numbers of photons than went into the black hole in the first place. Over the next ~10100 years or so, every black hole in the Universe will eventually decay away completely, with the overwhelming majority of the decay products being photons.

So will they ever die out? Not according to the currently understood laws of physics. In fact, the situation is even more dire than you probably realize. You can think of every photon that was or will be:

- created in the Big Bang,

- created from quantum transitions,

- created from radiative corrections,

- created through the emission of energy,

- or created via black hole decay,

and even if you wait for all of those photons to reach arbitrarily low energies due to the Universe’s expansion, the Universe still won’t be devoid of photons.

Why’s that?

Because the Universe still has dark energy in it. Just as an object with an event horizon, like a black hole, will continuously emit photons due to the difference in acceleration close to versus far away from the event horizon, so too will an object with a cosmological (or, more technically, a Rindler) horizon. Einstein’s equivalence principle tells us that observers cannot tell the difference between gravitational acceleration or acceleration due to any other cause, and any two unbound locations will appear to accelerate relative to one another owing to the presence of dark energy. The physics that results is identical: a continuous amount of thermal radiation gets emitted. Based on the value of the cosmological constant we infer today, that means a blackbody spectrum of radiation with a temperature of ~10–30 K will always permeate all of space, no matter how far into the future we go.

Even at its very end, no matter how far into the future we go, the Universe will always continue to produce radiation, ensuring that it will never reach absolute zero, that it will always contain photons, and that even at the lowest energies it will ever reach, there ought to be nothing else for the photon to decay or transition into. Although the energy density of the Universe will continue to drop as the Universe expands, and the energy inherent to any individual photon will continue to drop as time ticks onward and onward into the future, there will never be anything “more fundamental” that they transition into.

Travel the Universe with astrophysicist Ethan Siegel. Subscribers will get the newsletter every Saturday. All aboard!

Fields marked with an * are required

There are exotic scenarios we can cook up that will change the story, of course. Perhaps it’s possible that photons really do have a non-zero rest mass, causing them to slow down to slower than the speed of light when enough time passes. Perhaps photons really are inherently unstable, and there’s something else that’s truly massless, like a combination of gravitons, that they can decay into. And perhaps there’s some sort of phase transition that will occur, far into the future, where the photon will reveal its true instability and will decay into a yet-unknown quantum state.

But if all we have is the photon as we understand it in the Standard Model, then the photon is truly stable. A Universe filled with dark energy ensures, even as the photons that exist today redshift to arbitrarily low energies, that new ones will always get created, leading to a Universe with a finite and positive photon number and photon energy density at all times. We can only be certain of the rules to the extent that we’ve measured them, but unless there’s a big piece of the puzzle missing that we simply haven’t uncovered yet, we can count on the fact that photons might fade away, but they’ll never truly die.

The only way to beat the speed of light

There’s a speed limit to the Universe: the speed of light in a vacuum. Want to beat the speed of light? Try going through a medium!

Key Takeaways

- There’s an ultimate speed limit at which anything can travel through the fabric of space: the speed of light in a vacuum.

- At 299,792,458 m/s, it’s the ultimate cosmic speed limit, and the speed at which all massless particles must travel through empty space.

- But as soon as you’re not in a vacuum any longer, even light itself slows down. This gives rise to the one-and-only way to beat the speed of light: by sending it through a medium.

Share The only way to beat the speed of light on Facebook

Share The only way to beat the speed of light on Twitter (X)

Share The only way to beat the speed of light on LinkedIn

In our Universe, there are a few rules that everything must obey. Energy, momentum, and angular momentum are always conserved whenever any two quanta interact. The physics of any system of particles moving forward in time is identical to the physics of that same system reflected in a mirror, with particles exchanged for antiparticles, where the direction of time is reversed. And there’s an ultimate cosmic speed limit that applies to every object: nothing can ever exceed the speed of light, and nothing with mass can ever reach that vaunted speed.

Featured Videos

Over the years, people have developed very clever schemes to try to circumvent this last limit. Theoretically, they’ve introduced tachyons as hypothetical particles that could exceed the speed of light, but tachyons are required to have imaginary masses, and do not physically exist. Within General Relativity, sufficiently warped space could create alternative, shortened pathways over what light must traverse, but our physical Universe has no known wormholes. And while quantum entanglement can create “spooky” action at a distance, no information is ever transmitted faster than light.

But there is one way to beat the speed of light: enter any medium other than a perfect vacuum. Here’s the physics of how it works.

Light, you have to remember, is an electromagnetic wave. Sure, it also behaves as a particle, but when we’re talking about its propagation speed, it’s far more useful to think of it not only as a wave, but as a wave of oscillating, in-phase electric and magnetic fields. When it travels through the vacuum of space, there’s nothing to restrict those fields from traveling with the amplitude they’d naturally choose, defined by the wave’s energy, frequency, and wavelength. (Which are all related.)

But when light travels through a medium — that is, any region where electric charges (and possibly electric currents) are present — those electric and magnetic fields encounter some level of resistance to their free propagation. Of all the things that are free to change or remain the same, the property of light that remains constant is its frequency as it moves from vacuum to medium, from a medium into vacuum, or from one medium to another.

If the frequency stays the same, however, that means the wavelength must change, and since frequency multiplied by wavelength equals speed, that means the speed of light must change as the medium you’re propagating through changes.

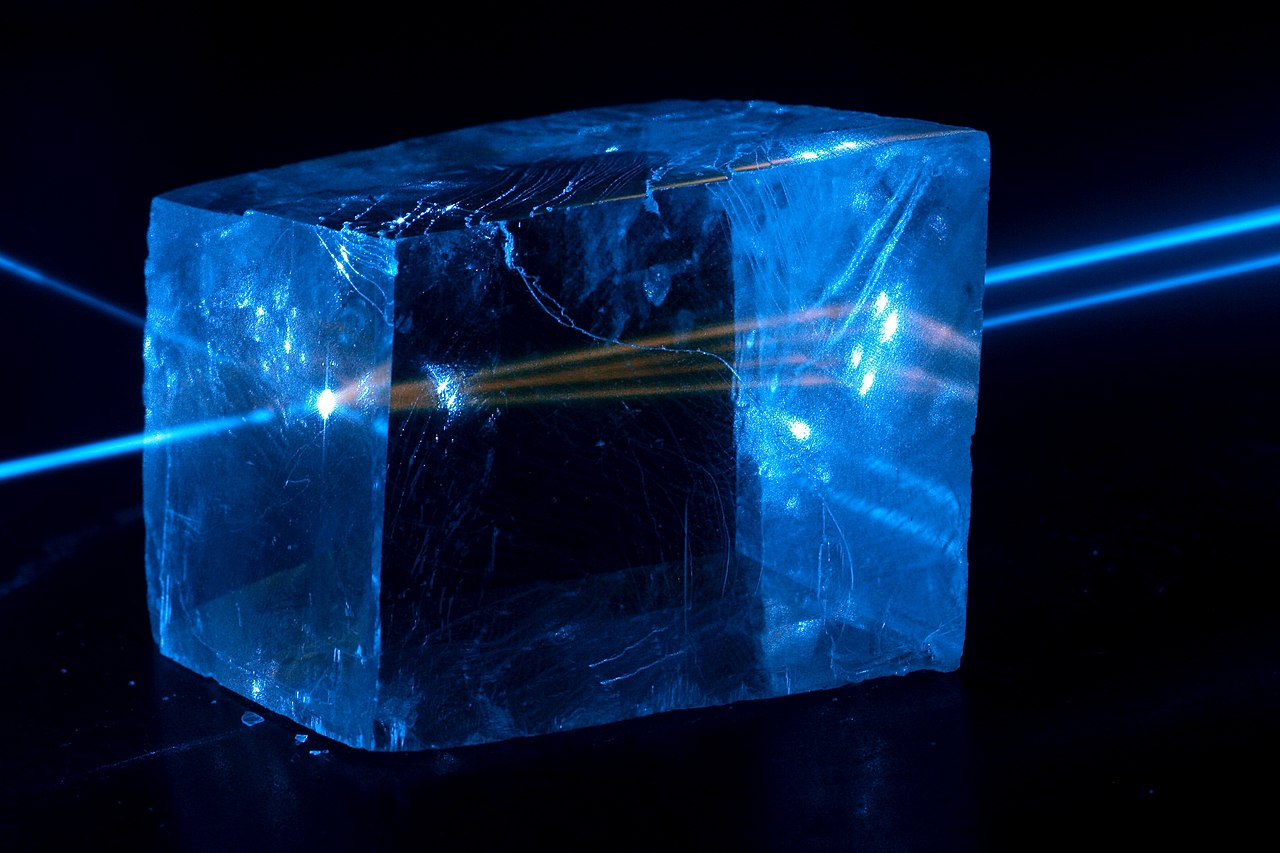

One spectacular demonstration of this is the refraction of light as it passes through a prism. White light — like sunlight — is made up of light of a continuous, wide variety of wavelengths. Longer wavelengths, like red light, possess smaller frequencies, while shorter wavelengths, like blue light, possess larger frequencies. In a vacuum, all wavelengths travel at the same speed: frequency multiplied by wavelength equals the speed of light. The bluer wavelengths have more energy, and so their electric and magnetic fields are stronger than the redder wavelength light.

Top Stories

When you pass this light through a dispersive medium like a prism, all of the different wavelengths respond slightly differently. The more energy you have in your electric and magnetic fields, the greater the effect they experience from passing through a medium. The frequency of all light remains unchanged, but the wavelength of higher-energy light shortens by a greater amount than lower-energy light.

As a result, even though all light travels slower through a medium than vacuum, redder light slows by a slightly smaller amount than blue light, leading to many fascinating optical phenomena, such as the existence of rainbows as sunlight breaks into different wavelengths as it passes through water drops and droplets.

In the vacuum of space, however, light has no choice — irrespective of its wavelength or frequency — but to travel at one speed and one speed only: the speed of light in a vacuum. This is also the speed that any form of pure radiation, such as gravitational radiation, must travel at, and also the speed, under the laws of relativity, that any massless particle must travel at.

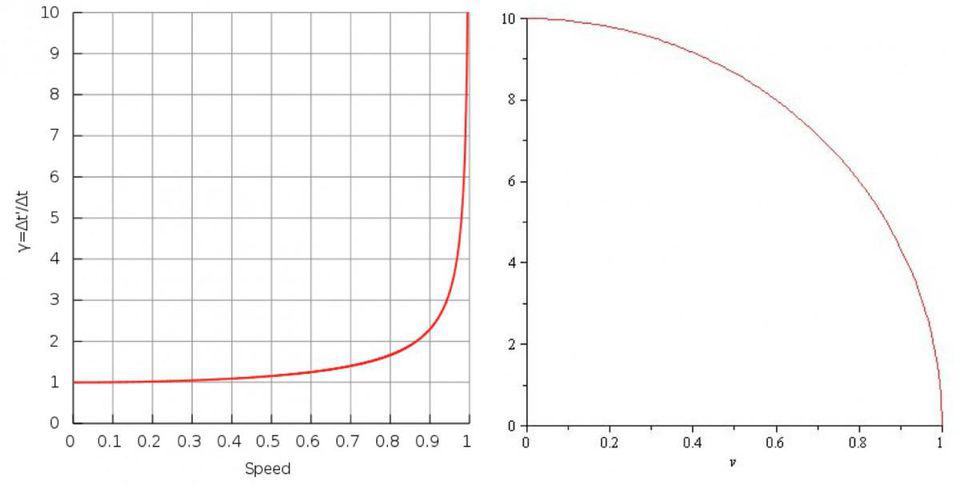

But most particles in the Universe have mass, and as a result, they have to follow slightly different rules. If you have mass, the speed of light in a vacuum is still your ultimate speed limit, but rather than being compelled to travel at that speed, it’s instead a limit that you can never attain; you can only approach it.

The more energy you put into your massive particle, the closer it can move to the speed of light, but it must always travel more slowly. The most energetic particles ever made on Earth, which are protons at the Large Hadron Collider, can travel incredibly close to the speed of light in a vacuum: 299,792,455 meters-per-second, or 99.999999% the speed of light.

No matter how much energy we pump into those particles, we can only add more “9s” to the right of that decimal place, however. We can never reach the speed of light.

Or, more accurately, we can never reach the speed of light in a vacuum. That is, the ultimate cosmic speed limit, of 299,792,458 m/s is unattainable for massive particles, and simultaneously is the speed that all massless particles must travel at.

But what happens, then, if we travel not through a vacuum, but through a medium instead? As it turns out, when light travels through a medium, its electric and magnetic fields feel the effects of the matter that they pass through. This has the effect, when light enters a medium, of immediately changing the speed at which light travels. This is why, when you watch light enter or leave a medium, or transition from one medium to another, it appears to bend. The light, while free to propagate unrestricted in a vacuum, has its propagation speed and its wavelength depend heavily on the properties of the medium it travels through.

However, particles suffer a different fate. If a high-energy particle that was originally passing through a vacuum suddenly finds itself traveling through a medium, its behavior will be different than that of light.

First off, it won’t experience an immediate change in momentum or energy, as the electric and magnetic forces acting on it — which change its momentum over time — are negligible compared to the amount of momentum it already possesses. Rather than bending instantly, as light appears to, its trajectory changes can only proceed in a gradual fashion. When particles first enter a medium, they continue moving with roughly the same properties, including the same speed, as before they entered.

Second, the big events that can change a particle’s trajectory in a medium are almost all direct interactions: collisions with other particles. These scattering events are tremendously important in particle physics experiments, as the products of these collisions enable us to reconstruct whatever it is that occurred back at the collision point. When a fast-moving particle collides with a set of stationary ones, we call these “fixed target” experiments, and they’re used in everything from creating neutrino beams to giving rise to antimatter particles that are critical for exploring certain properties of nature.

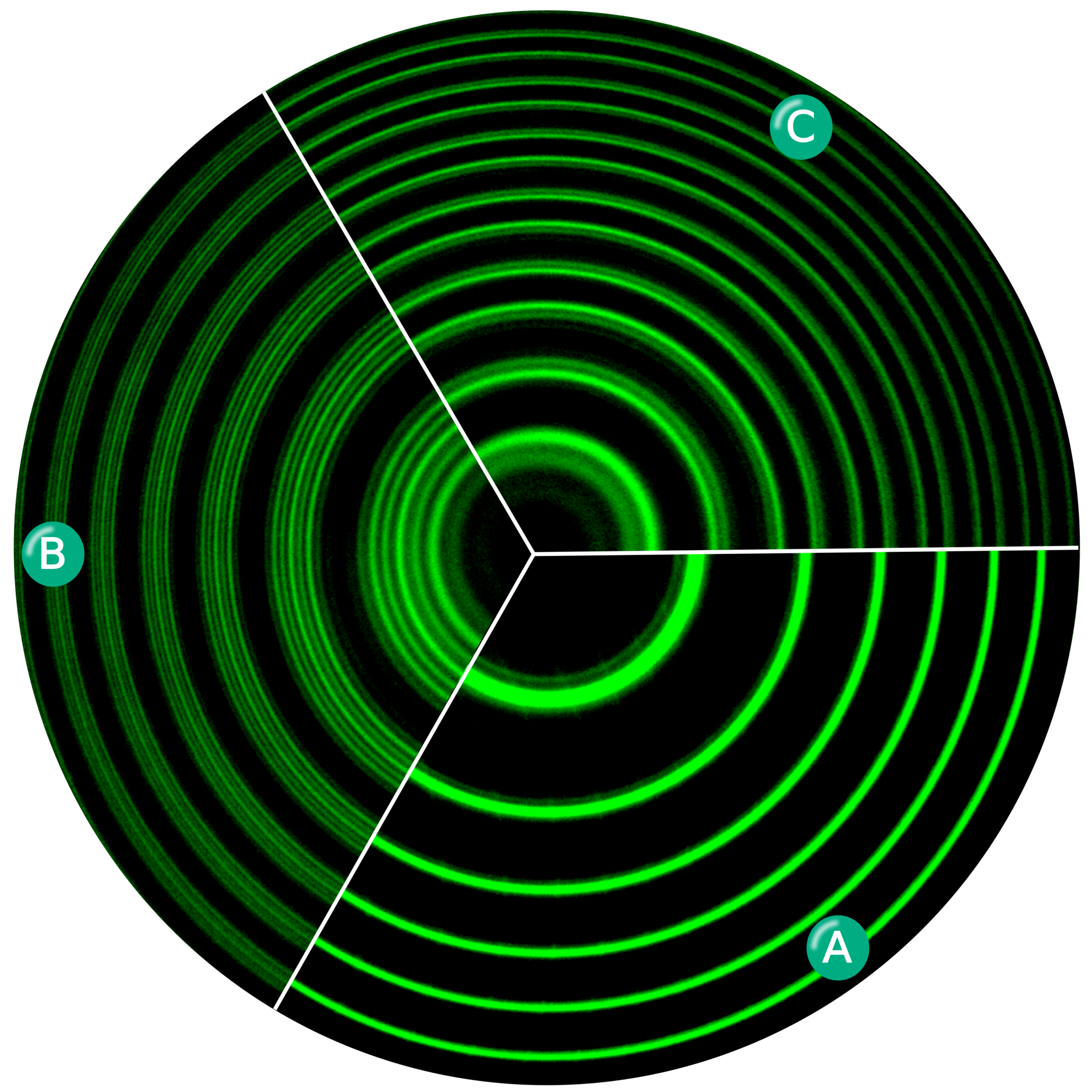

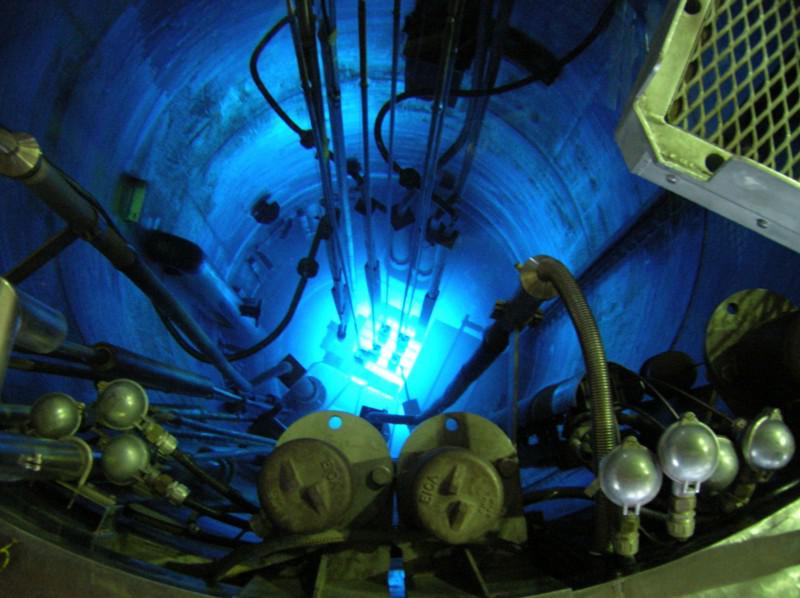

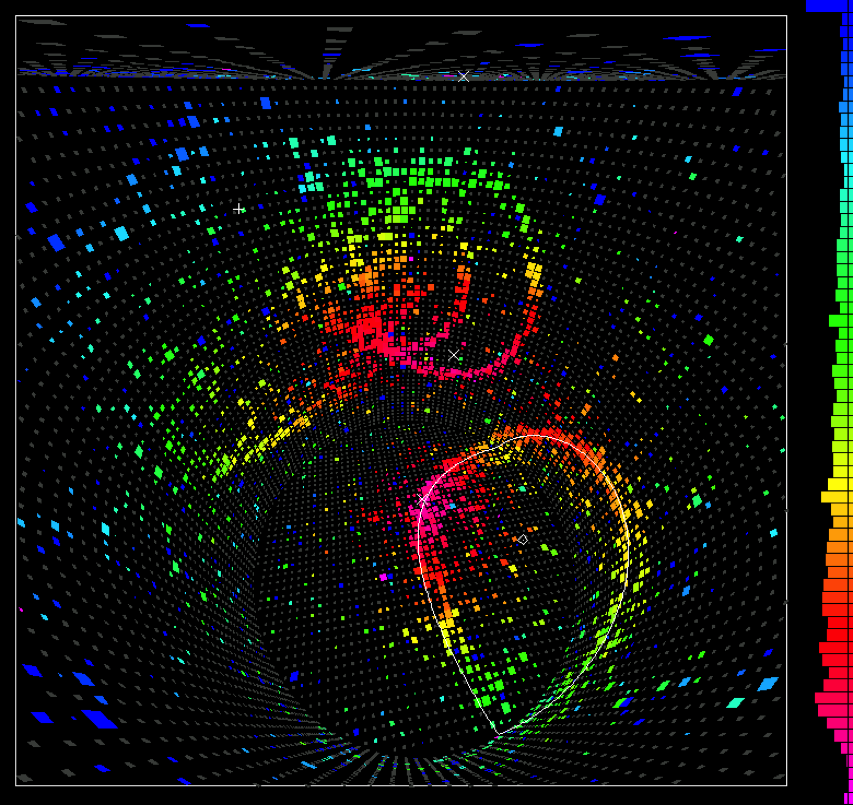

But the most interesting fact is this: particles that move slower than light in a vacuum, but faster than light in the medium that they enter, are actually breaking the speed of light. This is the one and only real, physical way that particles can exceed the speed of light. They can’t ever exceed the speed of light in a vacuum, but can exceed it in a medium. And when they do, something fascinating occurs: a special type of radiation — Cherenkov radiation — gets emitted.

Named for its discoverer, Pavel Cherenkov, it’s one of those physics effects that was first noted experimentally, before it was ever predicted. Cherenkov was studying radioactive samples that had been prepared, and some of them were being stored in water. The radioactive preparations seemed to emit a faint, bluish-hued light, and even though Cherenkov was studying luminescence — where gamma-rays would excite these solutions, which would then emit visible light when they de-excited — he was quickly able to conclude that this light had a preferred direction. It wasn’t a fluorescent phenomenon, but something else entirely.

Today, that same blue glow can be seen in the water tanks surrounding nuclear reactors: Cherenkov radiation.

Where does this radiation come from?

When you have a very fast particle traveling through a medium, that particle will generally be charged, and the medium itself is made up of positive (atomic nuclei) and negative (electrons) charges. The charged particle, as it travels through this medium, has a chance of colliding with one of the particles in there, but since atoms are mostly empty space, the odds of a collision are relatively low over short distances.

Instead, the particle has an effect on the medium that it travels through: it causes the particles in the medium to polarize — where like charges repel and opposite charges attract — in response to the charged particle that’s passing through. Once the charged particle is out of the way, however, those electrons return back to their ground state, and those transitions cause the emission of light. Specifically, they cause the emission of blue light in a cone-like shape, where the geometry of the cone depends on the particle’s speed and the speed of light in that particular medium.

This is an enormously important property in particle physics, as it’s this very process that allows us to detect the elusive neutrino at all. Neutrinos hardly ever interact with matter. However, on the rare occasions that they do, they only impart their energy to one other particle.

Travel the Universe with astrophysicist Ethan Siegel. Subscribers will get the newsletter every Saturday. All aboard!

Fields marked with an * are required

What we can do, therefore, is to build an enormous tank of very pure liquid: liquid that doesn’t radioactively decay or emit other high-energy particles. We can shield it very well from cosmic rays, natural radioactivity, and all sorts of other contaminating sources. And then, we can line the outside of this tank with what are known as photomultiplier tubes: tubes that can detect a single photon, triggering a cascade of electronic reactions enabling us to know where, when, and in what direction a photon came from.

With large enough detectors, we can determine many properties about every neutrino that interacts with a particle in these tanks. The Cherenkov radiation that results, produced so long as the particle “kicked” by the neutrino exceeds the speed of light in that liquid, is an incredibly useful tool for measuring the properties of these ghostly cosmic particles.

The discovery and understanding of Cherenkov radiation was revolutionary in many ways, but it also led to a frightening application in the early days of laboratory particle physics experiments. A beam of energetic particles leaves no optical signature as it travels through air, but will cause the emission of this blue light if it passes through a medium where it travels faster than light in that medium. Physicists used to close one eye and stick their head in the path of the beam; if the beam was on, they’d see a “flash” of light due to the Cherenkov radiation generated in their eye, confirming that the beam was on. (Needless to say, this process was discontinued with the advent of radiation safety training.)

Still, despite all the advances that have occurred in physics over the intervening generations, the only way we know of to beat the speed of light is to find yourself a medium where you can slow that light down. We can only exceed that speed in a medium, and if we do, this telltale blue glow — which provides a tremendous amount of information about the interaction that gave rise to it — is our data-rich reward. Until warp drive or tachyons become a reality, the Cherenkov glow is the #1 way to go!

Tags

Related

Ask Ethan: Can the Universe ever expand faster than the speed of light?

How the Universe’s expansion rate continues to baffle us. “In expanding the field of knowledge we but increase the horizon of ignorance.” –Henry Miller It’s the most fundamental law of special […]

What is “early dark energy” and can it save the expanding Universe?

There are two fundamentally different ways of measuring the Universe’s expansion. They disagree. “Early dark energy” might save us.

Does the expansion of the Universe break the speed of light?

Just 13.8 billion years after the hot Big Bang, we can see 46.1 billion light-years away in all directions. Doesn’t that violate…something?

Why the cosmic speed limit is below the speed of light

As particles travel through the Universe, there’s a speed limit to how fast they’re allowed to go. No, not the speed of light: below it.

What if Einstein never existed?

Even without the greatest individual scientist of all, every one of his great scientific advances would still have occurred. Eventually.

April 11th 2024

Could a Self-Sustaining Starship Carry Humanity to Distant Worlds?

Generation ships offer a tantalizing possibility: transporting humans on a permanent voyage to a new home among the stars.

By: Christopher Mason

The only barrier to human development is ignorance, and this is not insurmountable.

—Robert Goddard

Until 1992, when the first exoplanets were discovered, there had never been direct evidence of a planet found outside our solar system. Thirty years after this first discovery, thousands of additional exoplanets have been identified. Further, hundreds of these planets are within the “habitable zone,” indicating a place where liquid water, and maybe life, could be present. However, to get there, we need a brave crew to leave our solar system, and an even braver intergenerational crew to be born into a mission that, by definition, they could not choose. They would likely never see our solar system as anything more than a bright dot among countless others.

The idea of having multiple generations of humans live and die on the same spacecraft is actually an old one, first described by rocket engineer Robert Goddard in 1918 in his essay “The Last Migration.” As he began to create rockets that could travel into space, he naturally thought of a craft that would keep going, onward, farther, and eventually reach a new star. More recently, the Defense Advanced Research Projects Agency (DARPA) and NASA launched a project called the 100 Year Starship, with the goal of fostering the research and technology needed for interstellar travel by 2100.

This concept of a species being liberated from its home planet was captivating to Goddard, but it has also been the dream of sailors and stargazers since the beginning of recorded history. Every child staring into the night sky envisions flying through it. But, usually, they also want to return to Earth. One day, we may need to construct a human-driven city aboard a spacecraft and embark on a generational voyage to another solar system — never meant to return.

Distance, Energy, Particle Assault

Such a grand mission would need to overcome many enormous challenges, the first and perhaps most obvious being distance. Not including the sun, the closest known star to Earth (Proxima Centauri) is 4.24 light-years, or roughly 25 trillion miles, away. Although 4.24 light-years is a mere hop on the cosmic scale, it would take quite some time to get there with our current technology.

The Parker solar probe, launched by NASA in 2018, is the fastest-moving object ever made by humans, clocking in at 430,000 miles per hour. But even at this speed, it would take 6,617 years to reach Proxima Centauri. Or, put another way, it would take roughly 220 human generations to make the trip.

Using current technology, it would take roughly 220 human generations to make the trip to Proxima Centauri.

The only way to decrease this number would be to move faster. Which brings us to our second challenge: finding the needed energy for propulsion and sustenance. To decrease the amount of time (and the number of generations) it would take to get to the new star, our speed would need to increase through either burning more fuel or developing new spacecraft with technology orders of magnitude better than what is currently at hand. Regardless of the technology used, the acceleration would likely need to come from one or a combination of these sources: prepackaged (nonrenewable) fuel, energy collected from starlight (which would be more challenging when between stars), elements like hydrogen in the interstellar medium, or by slingshotting off of celestial bodies.

The latest advancements in thrust technology might help refocus this issue. Nuclear fusion offers a promising solution, as it produces less radiation and converts energy more efficiently than other methods, which would enable spacecraft to reach much higher speeds. Leveraging nuclear fusion, as envisioned by Project Daedalus (British Interplanetary Society) and Project Longshot (U.S. Naval Academy/NASA), offers a path to interstellar travel within a single human lifetime. These studies suggest that a fusion-powered spacecraft could reach speeds exceeding 62 million miles per hour, potentially reducing travel times to nearby stars to just 45 years.

Yet even if we address the challenges of distance and energy by designing an incredibly fast, fuel-efficient engine, we’re faced with another problem: the ever-present threat of micrometeoroids. Consider that a grain of sand moving at 90 percent of the speed of light contains enough kinetic energy to transform into a small nuclear bomb (two kilotons of TNT). Given the variable particle sizes that are floating around in space and the extremely high velocities proposed for this mission, any encounter would be potentially catastrophic. This, too, would require further engineering to overcome, as the thick shielding we have available to us now would not only degrade over time but would likely be far too heavy. A few solutions might be creating lighter polymers, which can be replaced and fixed as needed in flight; utilizing extensive long-distance monitoring to identify large objects before impact; or developing some kind of protective field from the spacecraft’s front, capable of deflecting or absorbing the impact of incoming particles.

Physiological and Psychological Risks

As exemplified by the NASA Twins Study, the SpaceX Inspiration4 mission, and additional NASA one-year and six-month missions, the crews of a generation ship would face another critical issue: physiological and psychological stress. One way to get around the technological limitation of either increasing the speed of our ships or protecting the ships from colliding with debris is to, instead, slow biology using hibernation or diapause. However, humans who overeat and lie around all day with little movement in simulated hibernation or bed-rest studies can run a higher risk of developing type 2 diabetes, obesity, heart disease, and even death. So, how do bears do it?

During hibernation or torpor, bears are nothing short of extraordinary. Their body temperature dips, their heart rate plummets to as low as five beats per minute, and for months, they essentially do not eat, urinate, or defecate. Remarkably, they’re able to maintain their bone density and muscle mass. Part of their hibernation trick seems to come from turning down their sensitivity to insulin by maintaining stable blood glucose levels. Their heart becomes more efficient as well. A bear essentially activates an energy-saving, “smart heart” mode, relying on only two of its four chambers to circulate thicker blood.

In 2019, a seminal study led by Joanna Kelley at Washington State University revealed striking gene expression changes in bears during hibernation. Researchers used the same Illumina RNA-sequencing technology as used in NASA’s Twins Study to examine the grizzly bears as they entered hyperphagia (when bears eat massive quantities of food to store energy as fat) and then again during hibernation. They found that tissues across the body had coordinated, dynamic gene expression changes occurring during hibernation. Though the bears were fast asleep, their fatty tissue was anything but quiet. This tissue showed extensive signs of metabolic activity, including changes in more than 1,000 genes during hibernation. These “hibernation genes” are prime targets for people who would prefer to wait in stasis on the generation ship than stay awake.

Another biological mechanism that we could utilize on the generation ship is diapause, which enables organisms to delay their own development in order to survive unfavorable environmental conditions (e.g., extreme temperature, drought, or food scarcity). Many moth species, including the Indian meal moth, can start diapause at different developmental stages depending on the environmental signals. If there is no food to eat, as in a barren desert, it makes sense to wait until there is a better time and the rain of nutrients falls.

Diapause is actually not a rare event; embryonic diapause has been observed occurring in more than 100 mammals. Even after fertilization, some mammalian embryos can decide “to wait.” Rather than immediately implanting into the uterus, the blastocyst (early embryo) can stay in a state of dormancy, where little or no development takes place. This is somewhat like a rock climber pausing during an ascent, such as when a storm arrives, then examining all of the potential routes they may take and waiting until the storm passes. In diapause, even though the embryo is unattached to the uterine wall, the embryo can wait out a bad situation, such as a scarcity of food. Thus, the pregnant mother can remain pregnant for a variable gestational period, in order to await improved environmental conditions. The technology to engage human hibernation or diapause doesn’t exist in the 21st century, but one day might.

The impact of weightlessness, radiation, and mission stress on the muscles, joints, bones, immune system, and eyes of astronauts is not to be underestimated. The physiological and psychological risks of such a mission are especially concerning given that the majority of existing models are based on trips that were relatively short and largely protected from radiation by the Earth’s magnetosphere, with the most extensive study so far from Captain Scott Kelly’s 340-day trip.

Artificial gravity — essentially building a spacecraft that spins to replicate the effects of Earth’s gravity — would address many of these issues, though not all. Another major challenge would be radiation. There are a number of ways to try and mitigate this risk, be it shielding around the ship, preemptive medications (actively being studied by NASA), frequent temporal monitoring of cell-free DNA (cfDNA) for the early detection of actionable mutations, or cellular and genetic engineering of astronauts to better protect or respond to radiation. The best defense against radiation, especially in a long-term mission outside of our solar system, would likely be through a combination of these efforts.

But even if the radiation problem is solved, the psychological and cognitive strain of isolation and limited social interaction must be addressed. Just imagine if you had to work and live with your officemates and family, for your entire life, in the same building. While we can carefully select the first generation of astronauts for a long generation ship mission, their children might struggle to adapt to the social and environmental aspects of their new home.

Analog missions performed on Earth have shown that after 500 days in isolation with a small crew, most of the relationships were strained or even antagonistic.

Analog missions performed on Earth, such as the Mars-500 project, have shown that after 500 days in isolation with a small crew, most of the relationships were strained or even antagonistic. There are many descriptions of “space madness” appearing in both fiction and nonfiction, but their modeling and association to risk is limited. There is simply no way to know how the same crew and its descendent generations would perform in 10 or 100 years, and certainly not over thousands of years. Human history is replete with examples of strife, war, factions, and political backstabbing, but also with examples of cooperation, symbiosis, and shared governance in support of large goals (such as in research stations in Antarctica).

Choosing Our New Home

Before we launch the first-ever generation ships, we will need to gain a large amount of information about the candidate planets to which we are sending the first settlers. One way to do this is by sending probes to potential solar systems, gaining as much detail as possible to ensure ships have what they need before they are launched. Work on such ideas has already begun, as with the Breakthrough Starshot mission proposed by Yuri Milner, Stephen Hawking, and Mark Zuckerberg.

The idea is simple enough, and the physics was detailed by Kevin Parkin in 2018. If there were a fleet of extremely light spacecraft that contained miniaturized cameras, navigation gear, communication equipment, navigation tools (thrusters), and a power supply, they could be “beamed” ahead with lasers to accelerate their speed. If each minispacecraft had a “lightsail” targetable by lasers, they could all be sped up to reduce the transit time. Such a “StarChip” could make the journey to the exoplanet Proxima Centauri b — an exoplanet orbiting within the habitable zone of Proxima Centauri — in roughly 25 years and send back data for us to review, following another 25 years of data transit back to Earth. Then, we would have more information on what may be awaiting a crew if that location were chosen. The idea for this plan is credited to physicist Philip Lubin, who imagined in his 2015 article, “A Roadmap to Interstellar Flight,” an array of adjustable lasers that could focus on the StarChip with a combined power of 100 gigawatts to propel the probes to our nearest known star.

The ideal scenario would be seeding the world in preparation for humans, similar to missions being conducted on Mars. If these StarChips work, then they could be used to send microbes to other planets as well as sensors. They certainly have many challenges ahead of them as well, requiring them to survive the trip, decelerate, and then land on the new planet — no small feat. However, this travel plan is all within the range of tolerable conditions for known extremophiles on Earth that casually survive extreme temperatures, radiation, and pressure. The tardigrades, for one, have already survived the vacuum of space and may be able to make the trip to the other planet, and we could have other “seed” organisms sent along, too. Such an idea of a “genesis probe” that could seed other planets with Earth-based microbes, first proposed by Claudius Gros in 2016, would obviously violate all current planetary-protection guidelines, but it might also be the best means to prepare a planet for our arrival. Ideally, this would be done only once robotic probes have conducted an extensive analysis of the planet to decrease the chance of causing harm to any life that may already exist there.

The Ethics of a Generation Ship